Creating and managing a robust Kubernetes environment demands smooth collaboration between your Network and Application teams. But their priorities and working styles are usually quite different, leading to conflicts with potentially serious consequences – slow app development, delayed deployment, and even network downtime.

Only the success of both teams, working towards a common goal, can ensure today’s modern applications are delivered on time with proper security and scalability. So, how do you leverage the skills and expertise of each team, while helping them work in tandem?

In our whitepaper Get Me to the Cluster, we detail a solution for enabling external access to Kubernetes services that enables Network and Application teams to combine their strengths without conflict.

How to Expose Apps in Kubernetes Clusters

The solution works specifically for Kubernetes clusters hosted on premises, with nodes running on bare metal or traditional Linux virtual machines (VMs) and standard Layer 2 switches and Layer 3 routers providing the networking for communication in the data center. It doesn’t extend to cloud‑hosted Kubernetes clusters, because cloud providers don’t allow us to control the core networking in their data centers nor the networking in their managed Kubernetes environment.

Before we go over the specifics of our solution, let’s review why other standard ways to expose applications in a Kubernetes cluster don’t work for on‑premises deployments:

- Service – Groups together pods running the same apps. This is great for internal pod-to-pod communication, but is only visible inside the cluster, so it doesn’t help expose apps externally.

- NodePort – Opens a specific port on every node in the cluster and forwards traffic to the corresponding app. While this allows external users to access the service, it’s not ideal because the configuration is static and you have to use high‑numbered TCP ports (instead of well‑known lower port numbers) and coordinate port numbers with other apps. You also can’t share common TCP ports among different apps.

- LoadBalancer – Uses the NodePort definitions on each node to create a network path from the outside world to your Kubernetes nodes. It’s great for cloud‑hosted Kubernetes, because AWS, Google Cloud Platform, Microsoft Azure and most other cloud providers support it as an easily configured feature that works well and provides the required public IP address and matching DNS

Arecord for a service. Unfortunately, there’s no equivalent for on‑premises clusters.

Enabling External User Access to On‑Premises Kubernetes Clusters

That leaves us with the Kubernetes Ingress object, which is specifically designed for traffic that flows from users outside the cluster to pods inside the cluster (north‑south traffic). The Ingress creates an external HTTP/HTTPS entry point for the cluster – a single IP address or DNS name at which external users can access multiple services. This is just what’s needed! The Ingress object is implemented by an Ingress controller – in our solution the enterprise‑grade F5 NGINX Ingress Controller based on NGINX Plus.

It might surprise you that another key component of the solution is Border Gateway Protocol (BGP), a Layer 3 routing protocol. But a great solution doesn’t have to be complex!

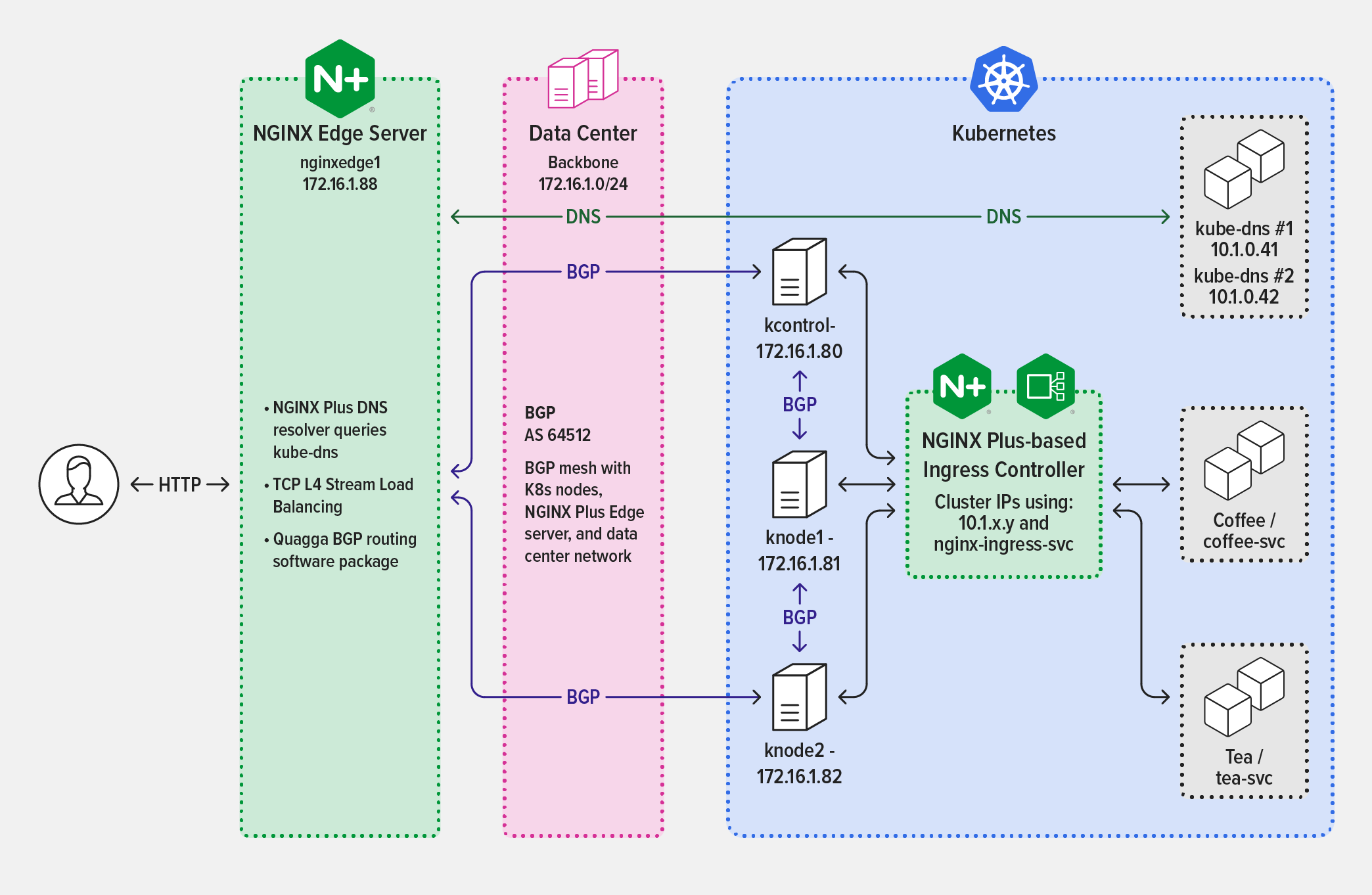

The solution outlined in Get Me to the Cluster actually has four components:

- iBGP network – Internal BGP (iBGP) is used to exchange routing information within an autonomous system (AS) in the data center and helps ensure the network is reliable and scalable. iBGP is already in place and supported by the Network team in most data centers.

- Project Calico CNI networking – Project Calico is an open source networking solution that flexibly connects environments in on‑premises data centers while giving fine‑grained control over traffic flow. We use the CNI plug‑in from Project Calico for networking in the Kubernetes cluster, with BGP enabled. This allows you to control IP address pools allocated for pods, which helps to quickly identify any networking issues.

- NGINX Ingress Controller based on NGINX Plus – With NGINX Ingress Controller you can watch the service endpoint IP addresses of the pods and automatically reconfigure the list of upstream services with no interruption of traffic processing. Application teams can also take advantage of the many other enterprise‑grade Layer 7 HTTP features in NGINX Plus, including active health checks, mTLS, and JWT‑based authentication.

- NGINX Plus as a reverse proxy at the edge – NGINX Plus sits as a reverse proxy at the edge of the Kubernetes cluster, providing a path between switches and routers in the data center and the internal network in the Kubernetes cluster. This functions as replacement for the Kubernetes LoadBalancer object and uses Quagga for BGP.

The diagram illustrates the solution architecture, indicating which protocols the solution components use to communicate, not the order in which data is exchanged during request processing.

Download the Whitepaper for Free

By working together to implement a solution with well‑defined components, Network and Application teams can easily deliver optimal performance and reliability.

Our solution uses modern networking tools, protocols, and existing architectures. Because it is designed to be inexpensive and easy to implement, manage, and support, it adds ease and builds bridges between your teams.

To see the code in action and learn step-by-step how to deploy our solution, download Get Me to the Cluster for free.