At NGINX we’ve been talking for the last several years about the need to make applications truly modern and adaptive – portable, cloud native, resilient, scalable, and easy to update. More recently, two concepts have come to the fore that facilitate the creation and delivery of modern apps. The first is Platform Ops, where a corporate‑level platform team curates, maintains, connects, and secures all the tools that development and DevOps teams need to do their jobs. The second is shifting left, which means integrating production‑grade security, networking, and monitoring into applications during earlier stages of the development lifecycle. Developers end up with more responsibility for functions that used to belong to ITOps, but at the same time have more choices and more independence in exactly how they implement those functions.

While this sounds good, in reality it’s challenging to implement Platform Ops and to “shift-left” infrastructure and operations tooling. For one thing, more and more apps are being deployed in highly distributed ways, in containerized environments, and using one of the growing numbers of Kubernetes orchestration engines. Companies also want to deploy their apps in multiple environments without getting bogged down by the differences among clouds and between clouds and on‑premises environments.

A “Golden Image” for Modern Apps

As they should, our customers and community continue to request our help with the challenges they face. They want all the goodness, but stapling together all the pieces – security, networking, observability and performance monitoring, scaling – requires real work. Making the resulting platform robust enough for production environments requires yet more work. They wonder: “Why isn’t there a ‘golden image’ for modern apps which we can launch from a single repo?”

That’s a good question, and we took it as our own challenge to come up with a good answer. First, we reframed the question in more concrete terms. We think our customers and community are asking this: “Can you help us integrate different software products into a more cohesive whole, tune the stack to nail down the right configurations and settings, and save us the work and trouble? And can you make it easier to run the application in different clouds without having to make major configuration changes due to differences in underlying services and functionality?”

We see a solution that really addresses these questions as beneficial to the entire community – not just our partners at hundreds of enterprises and all the major cloud vendors – truly a non-zero-sum win. Ideally, the solution is not a “toy”, but code that’s solid, tested, and ready to deploy in live production applications running in Kubernetes environments. And, frankly, we want anyone to be able to steal our work straight out of GitHub.

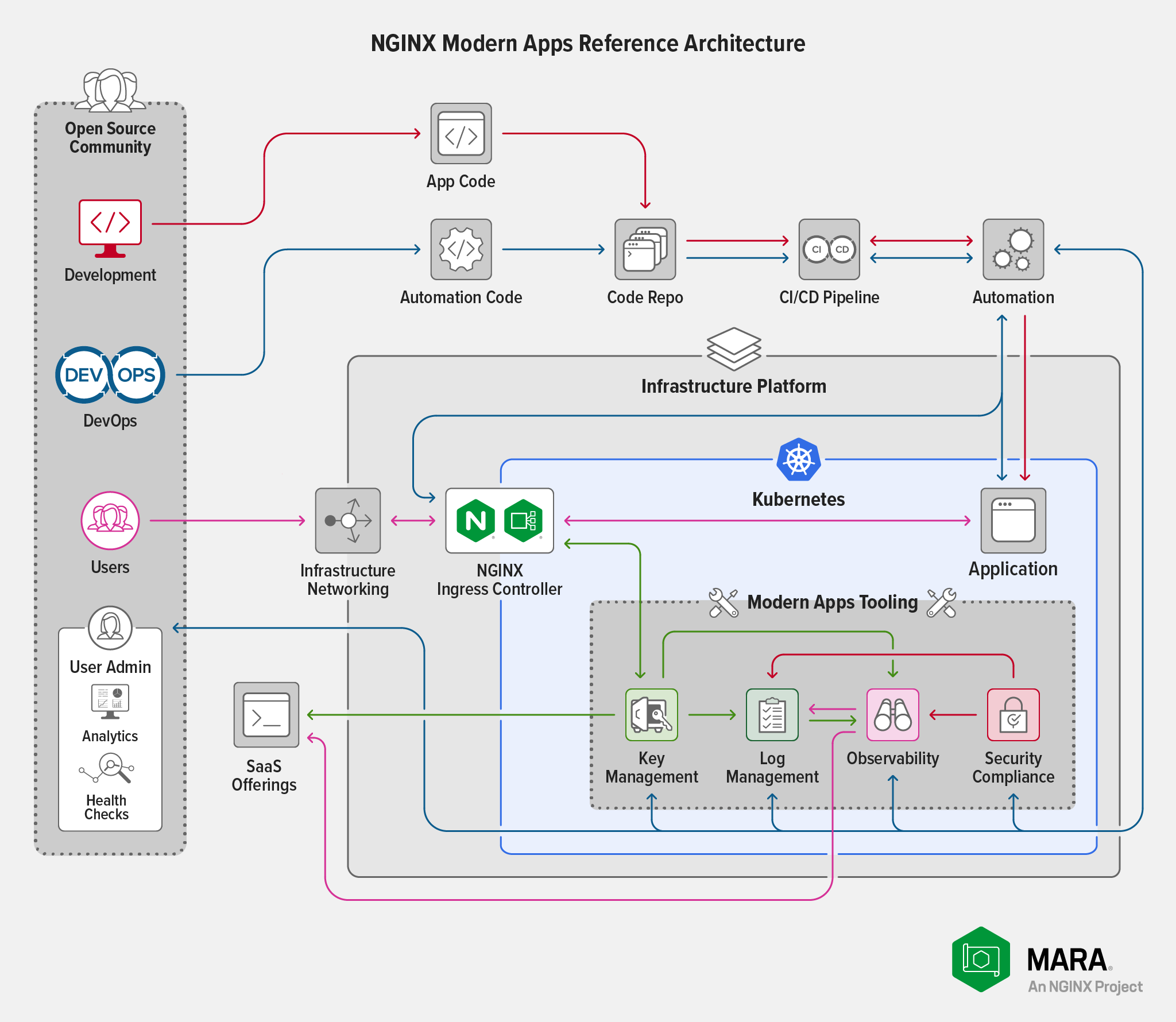

To cut to the chase, today at NGINX Sprint 2.0 we’re announcing the launch of our solution: the first iteration of the Modern Apps Reference Architecture (MARA), an open source architecture and deployment model for modern apps. We hope you like it, use it, steal it, and, even better, modify it or fork it to improve or customize. This post runs through what we built and how it works.

Defining Modern Adaptive Applications

First, let’s define the ideal modern, adaptive application. It might be composed of microservices, be containerized, and adhere to cloud‑native design principles (loosely coupled, easy to scale, not tied to infrastructure) – but it doesn’t have to be. Part of the ethos of modern applications is architecting specifically to take advantage of infrastructure abstraction. This definition is straightforward but important because it establishes the basic template for all reference architectures.

The key pillars of a modern application architecture include portability, scalability, resilience, and agility.

- Portability – It’s easy to deploy the application on multiple types of devices and infrastructures, on public clouds, and on premises.

- Scalability – The app can quickly and seamlessly scale up or down to accommodate spikes or reductions in demand, anywhere in the world.

- Resiliency – The app can gracefully fail over to newly spun‑up clusters or virtual environments in different availability regions, clouds, or data centers.

- Agility – The app can be quickly updated through automated CI/CD pipelines; in the world of modern apps, this also implies a higher code velocity and more frequent code pushes.

Designing Our Reference Architecture

We wanted to create a platform that fulfilled the basic requirements of our modern apps definition and deployment pattern. Aside from the technical goals, we wanted to illustrate modern app‑design principles and encourage our community to deploy on Kubernetes. And yes, we wanted to offer “stealable” code that developers, DevOps, and Platform Ops teams can play with, modify, and improve on. In short, we wanted to offer:

- An easily deployable, production‑ready Kubernetes architecture that isn’t a toy

- A platform that highlights how partner products work on Kubernetes

- A push button for easy build and deployment of a Kubernetes Ingress controller

- A test environment for future product and alliance integrations

- A pluggable deployment framework

- A single repo of open source code to simplify discovery and adoption

Here are the design and partnership choices we’ve made for the first version of the platform (for our plans for the next version, see Many More Integrations and More Flexibility in Version 2). We strongly believe that making our reference app partner‑inclusive is key to driving both partner and community engagement.

- Open source development – https://github.com/nginxinc/kic-reference-architectures/; we built the repo in collaboration with the NGINX/F5 community

- Deployment target – Amazon Elastic Kubernetes Service (EKS)

- Infrastructure-as-Code automation – Python and Pulumi, which accepts Terraform recipes and automation scripts from nearly all popular coding languages

- Ingress Controller image – NGINX Plus prebuilt for subscribers, NGINX Open Source prebuilt on DockerHub, and either version built from source

- Log management (storage and analysis) – Elastic, because it is very popular with our users

- Performance monitoring and dashboards – Prometheus and Grafana

- Continuous development (CD) – Spinnaker

- TLS – cert-manager

- Demo application – Google Bank of Anthos

How the Code Is Deployed

To install and deploy the sample application, you issue a single command to invoke the start‑up script, and the following Pulumi projects are executed in the indicated order. Each project name maps to a directory name relative to the root directory of the repository. For details, see the README.

vpc - Defines and installs VPC and subnets to use with EKS

└─eks - Deploys EKS

└─ecr - Configures ECR for use in EKS cluster

└─kic-image-build - Builds new NGINX Ingress Controller image

└─kic-image-push - Pushes image built in previous step to ECR

└─kic-helm-chart - Deploys NGINX Ingress Controller to EKS

cluster

└─logstore - Deploys Elastic log store to EKS cluster

└─logagent - Deploys Elastic (filebeat) logging agent to

EKS cluster

└─certmgr - Deploys cert-manager.io Helm chart to EKS

cluster

└─anthos - Deploys Bank of Anthos application to EKS

clusterMany More Integrations and More Flexibility in Version 2

We recognize that our initial effort might not provide all the integrations you need for your Kubernetes environment. Platform Ops is about smart – but not unlimited – choice. To make it easier for Platform Ops, DevOps, and developer teams to try out and potentially adopt our new reference platform, we plan a lot of improvements in the near term, including:

- Stand up Digital Ocean, OpenShift, Rancher, vSphere, and other Kubernetes environments

-

Integrate with NGINX Controller> to manage and monitor NGINX Plus Ingress Controller

[Editor – NGINX Controller is now F5 NGINX Management Suite.]

- Provide out-of-the-box configuration for NGINX App Protect

- Integrate with F5 products and services like BIG‑IP, Cloud Services, and Volterra

- Integrate with NGINX Service Mesh and Istio‑based Aspen Mesh

- Integrate natively with Terraform and other automation tools

- Support other CI/CD options

- Deploy separate or multiple clusters for infrastructure and application services

We hope that our work can become a framework for other reference platforms and a “stealable” starting point for building out all types of differentiated modern applications. Because Kubernetes is such a powerful mechanism for both building modern applications and for empowering Platforms Ops and shift‑left culture, the more expansive and pluggable our reference architecture, the better. We are excited to see what you, the community, think of our work and, more importantly, what you build with it.

Get Started with the Reference Architecture

Download our reference platform and take it for a spin. Let us know what you think and what you want us to build next. Pull requests are more than welcome. We’re eager to partner with you on the next generation of modern, adaptable, “stealable” applications that pay it forward to the community and all developers.

Related Posts

This post is part of a series. As we add capabilities to MARA over time, we’re publishing the details on the blog:

- A New, Open Source Modern Apps Reference Architecture (this post)

- Integrating OpenTelemetry into the Modern Apps Reference Architecture – A Progress Report

- MARA: Now Running on a Workstation Near You

- Announcing Version 1.0.0 of the NGINX Modern Apps Reference Architecture