According to The State of Application Strategy in 2022 report from F5, digital transformation in the enterprise continues to accelerate globally. Most enterprises deploy between 200 and 1,000 apps spanning across multiple cloud zones, with today’s apps moving from monolithic to modern distributed architectures.

Kubernetes first hit the tech scene for mainstream use in 2016, a mere six years ago. Yet today more than 75% of organizations world‑wide run containerized applications in production, up 30% from 2019. One critical issue in Kubernetes environments, including Amazon Elastic Kubernetes Service (EKS), is security. All too often security is “bolted on” at the end of the app development process, and sometimes not even until after a containerized application is already up and running.

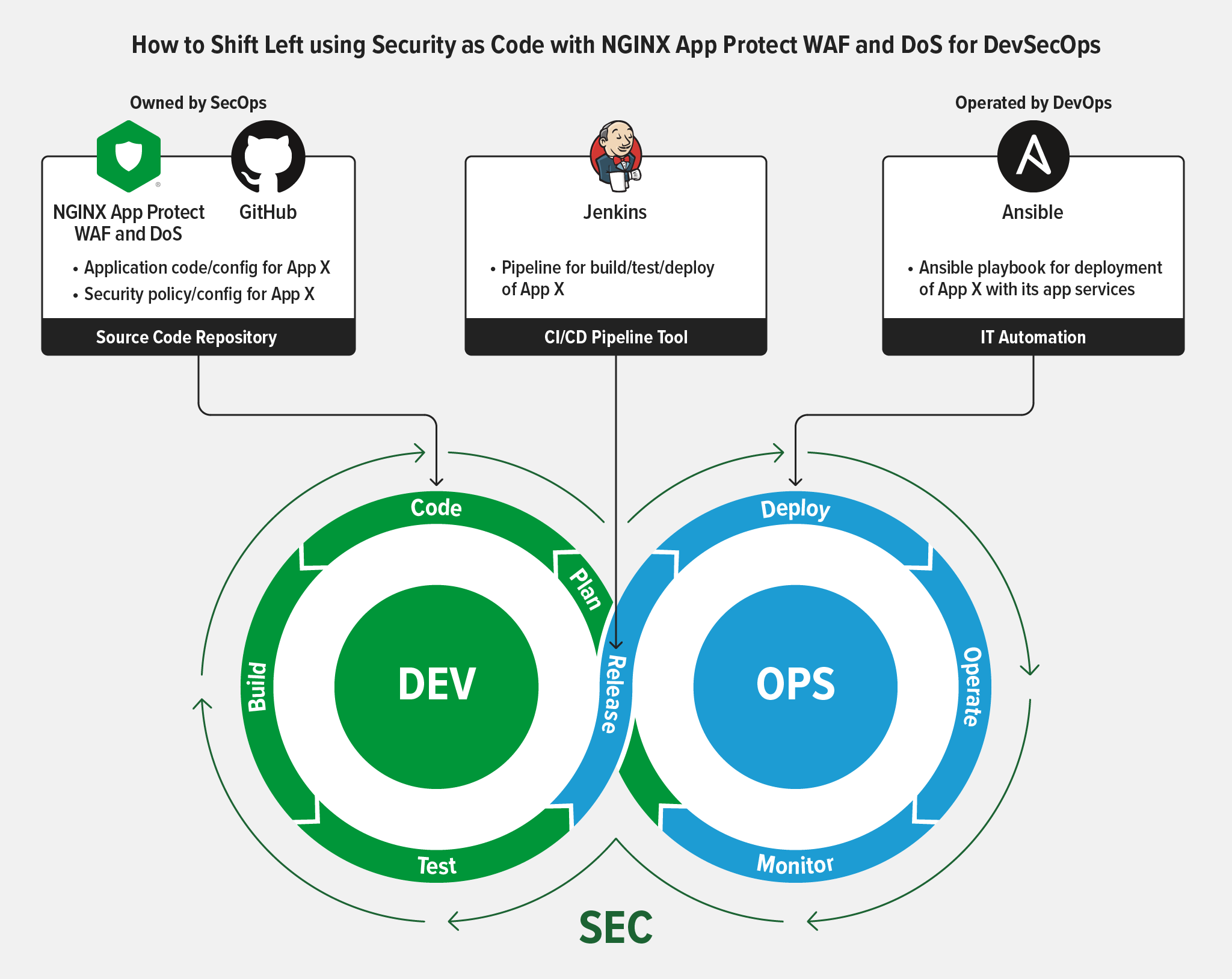

The current wave of digital transformation, accelerated by the COVID‑19 pandemic, has forced many businesses to take a more holistic approach to security and consider a “shift left” strategy. Shifting security left means introducing security measures early into the software development lifecycle (SDLC) and using security tools and controls at every stage of the CI/CD pipeline for applications, containers, microservices, and APIs. It represents a move to a new paradigm called DevSecOps, where security is added to DevOps processes and integrates into the rapid release cycles typical of modern software app development and delivery.

DevSecOps represents a significant cultural shift. Security and DevOps teams work with a common purpose: to bring high‑quality products to market quickly and securely. Developers no longer feel stymied at every turn by security procedures that stop their workflow. Security teams no longer find themselves fixing the same problems repeatedly. This makes it possible for the organization to maintain a strong security posture, catching and preventing vulnerabilities, misconfigurations, and violations of compliance or policy as they occur.

Shifting security left and automating security as code protects your Amazon EKS environment from the outset. Learning how to become production‑ready at scale is a big part of building a Kubernetes foundation. Proper governance of Amazon EKS helps drive efficiency, transparency, and accountability across the business while also controlling cost. Strong governance and security guardrails create a framework for better visibility and control of your clusters. Without them, your organization is exposed to greater risk of security breaches and the accompanying longtail costs associated with damage to revenue and reputation.

To find out more about what to consider when moving to a security‑first strategy, take a look at this recent report from O’Reilly, Shifting Left for Application Security.

Automating Security for Amazon EKS with GitOps

Automation is an important enabler for DevSecOps, helping to maintain consistency even at a rapid pace of development and deployment. Like infrastructure as code, automating with a security-as-code approach entails using declarative policies to maintain the desired security state.

GitOps is an operational framework that facilitates automation to support and simplify application delivery and cluster management. The main idea of GitOps is having a Git repository that stores declarative policies of Kubernetes objects and the applications running on Kubernetes, defined as code. An automated process completes the GitOps paradigm to make the production environment match all stored state descriptions.

The repository acts as a source of truth in the form of security policies, which are then referenced by declarative configuration-as-code descriptions as part of the CI/CD pipeline process. As an example, NGINX maintains a GitHub repository with an Ansible role for F5 NGINX App Protect which we hope is useful for helping teams wanting to shift security left.

With such a repo, all it takes to deploy a new application or update an existing one is to update the repo. The automated process manages everything else, including applying configurations and making sure that updates are successful. This ensures that everything happens in the version control system for developers and is synchronized to enforce security on business‑critical applications.

When running on Amazon EKS, GitOps makes security seamless and robust, while virtually eliminating human errors and keeping track of all versioning changes that are applied over time.

NGINX App Protect and NGINX Ingress Controller Protect Your Apps and APIs in Amazon EKS

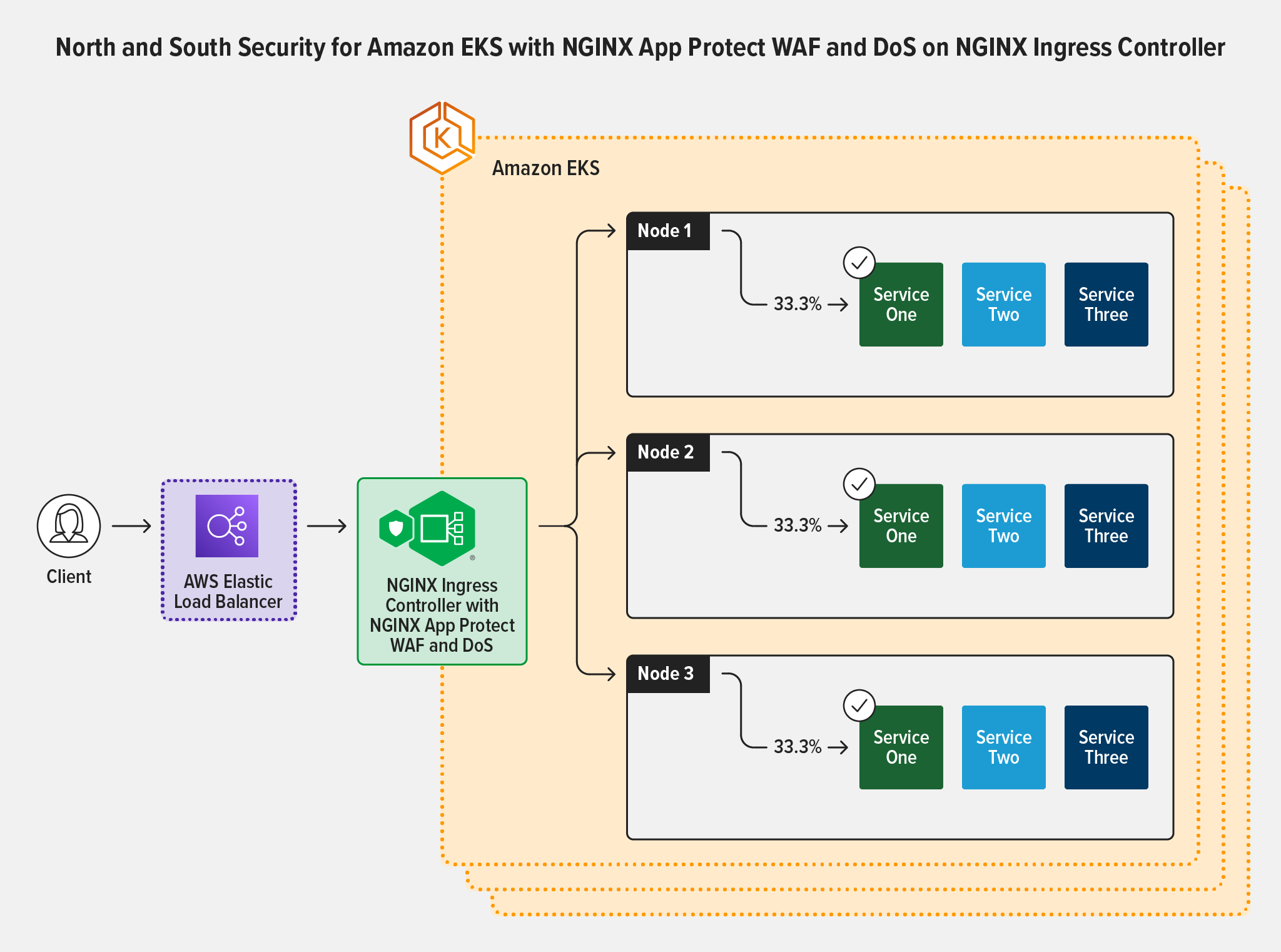

A robust design for Kubernetes security policy must accommodate the needs of both SecOps and DevOps and include provisions for adapting as the environment scales. Kubernetes clusters can be shared in many ways. For example, a cluster might have multiple applications running in it and sharing its resources, while in another case there are multiple instances of one application, each for a different end user or group. This implies that security boundaries are not always sharply defined and there is a need for flexible and fine‑grained security policies.

The overall security design must be flexible enough to accommodate exceptions, must integrate easily into the CI/CD pipeline, and must support multi‑tenancy. In the context of Kubernetes, a tenant is a logical grouping of Kubernetes objects and applications that are associated with a specific business unit, team, use case, or environment. Multi‑tenancy, then, means multiple tenants securely sharing the same cluster, with boundaries between tenants enforced based on technical security requirements that are tightly connected to business needs.

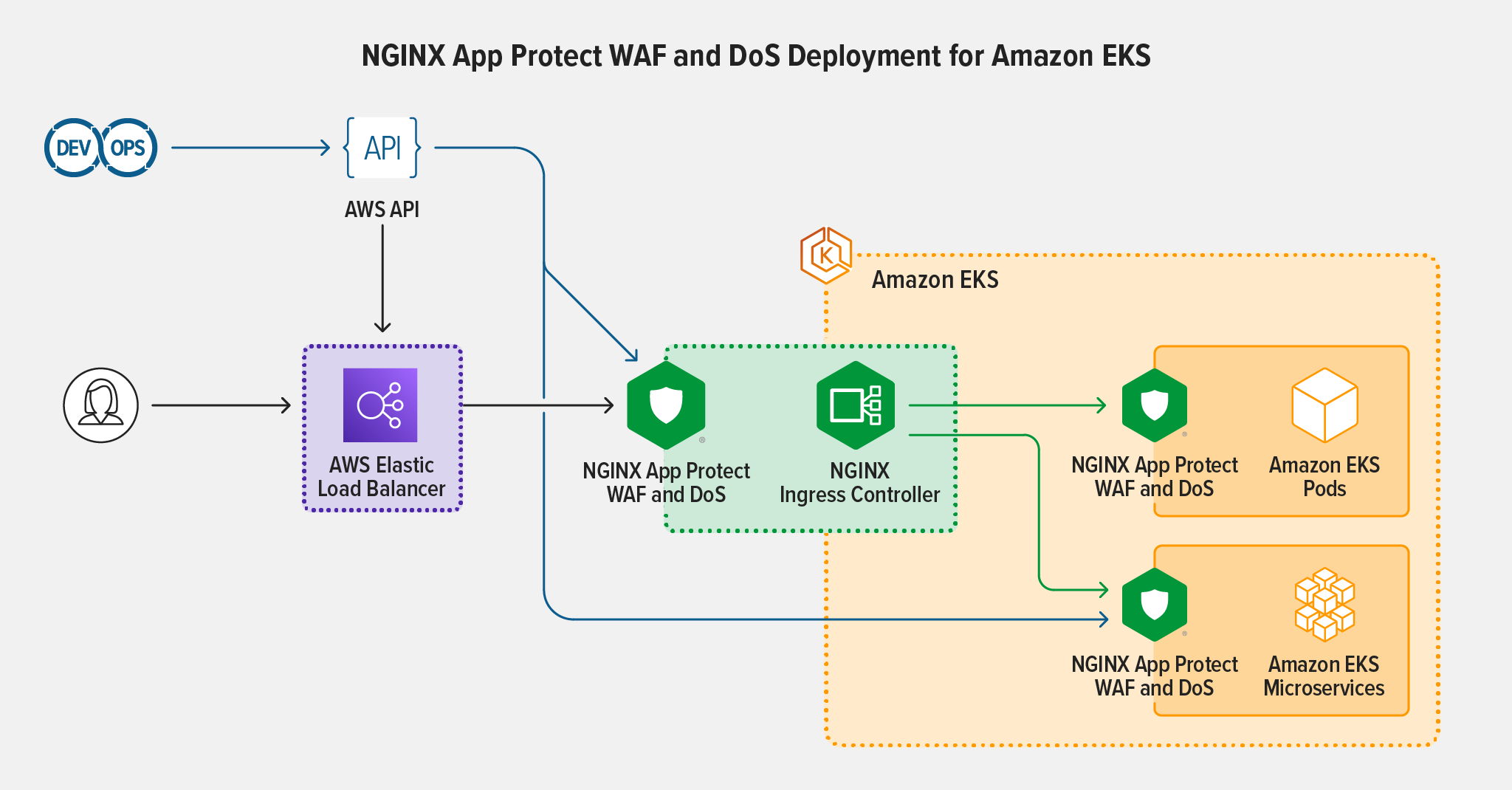

An easy way to implement low‑latency, high‑performance security on Amazon EKS is by embedding the NGINX App Protect WAF and DoS modules with NGINX Ingress Controller. None of our other competitors provide this type of inline solution. Using one product with synchronized technology provides several advantages, including reduced compute time, costs, and tool sprawl. Here are some additional benefits.

- Securing the application perimeter – In a well‑architected Kubernetes deployment, NGINX Ingress Controller is the only point of entry for data‑plane traffic flowing to services running within Kubernetes, making it an ideal location for a WAF and DoS protection.

- Consolidating the data plane – Embedding the WAF within NGINX Ingress Controller eliminates the need for a separate WAF device. This reduces complexity, cost, and the number of points of failure.

- Consolidating the control plane – WAF and DoS configuration can be managed with the Kubernetes API, making it significantly easier to automate CI/CD processes. NGINX Ingress Controller configuration complies with Kubernetes role‑based access control (RBAC) practices, so you can securely delegate the WAF and DoS configurations to a dedicated DevSecOps team.

The configuration objects for NGINX App Protect WAF and DoS are consistent across both NGINX Ingress Controller and NGINX Plus. A master configuration can easily be translated and deployed to either device, making it even easier to manage WAF configuration as code and deploy it to any application environment

To build NGINX App Protect WAF and DoS into NGINX Ingress Controller, you must have subscriptions for both NGINX Plus and NGINX App Protect WAF or DoS. A few simple steps are all it takes to build the integrated NGINX Ingress Controller image (Docker container). After deploying the image (manually or with Helm charts, for example), you can manage security policies and configuration using the familiar Kubernetes API.

The NGINX Ingress Controller based on NGINX Plus provides granular control and management of authentication, RBAC‑based authorization, and external interactions with pods. When the client is using HTTPS, NGINX Ingress Controller can terminate TLS and decrypt traffic to apply Layer 7 routing and enforce security.

NGINX App Protect WAF and NGINX App Protect DoS can then be deployed to enforce security policies to protect against point attacks at Layer 7 as a lightweight software security solution. NGINX App Protect WAF secures Kubernetes apps against OWASP Top 10 attacks, and provides advanced signatures and threat protection, bot defense, and Dataguard protection against exploitation of personally identifiable information (PII). NGINX App Protect DoS provides an additional line of defense at Layers 4 and 7 to mitigate sophisticated application‑layer DoS attacks with user behavior analysis and app health checks to protect against attacks that include Slow POST, Slowloris, flood attacks, and Challenger Collapsar.

Such security measures protect both REST APIs and applications accessed using web browsers. API security is also enforced at the Ingress level following the north‑south traffic flow.

NGINX Ingress Controller with NGINX App Protect WAF and DoS can secure Amazon EKS traffic on a per‑request basis rather than per‑service: this is a more useful view of Layer 7 traffic and a far better way to enforce SLAs and north‑south WAF security.

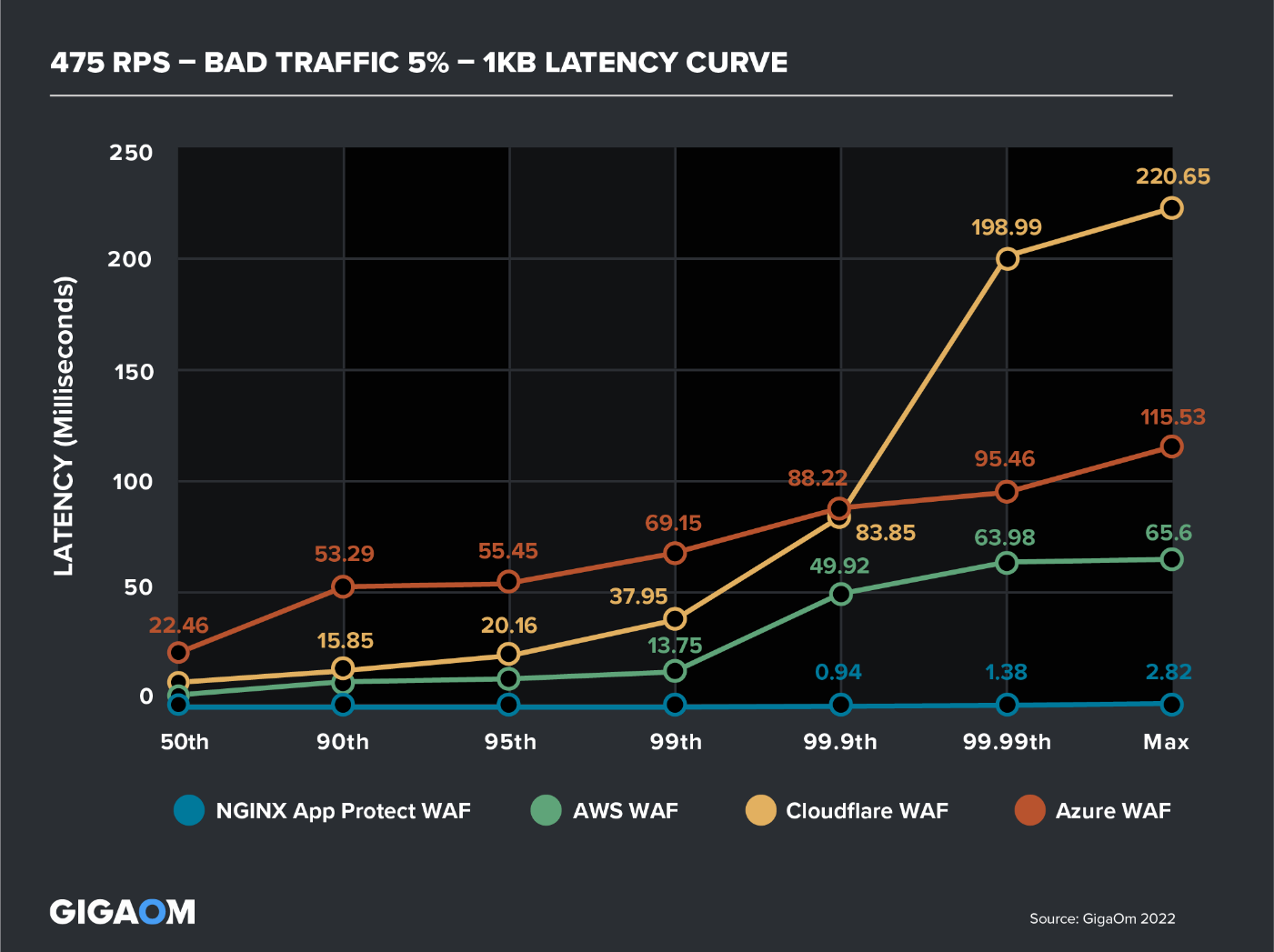

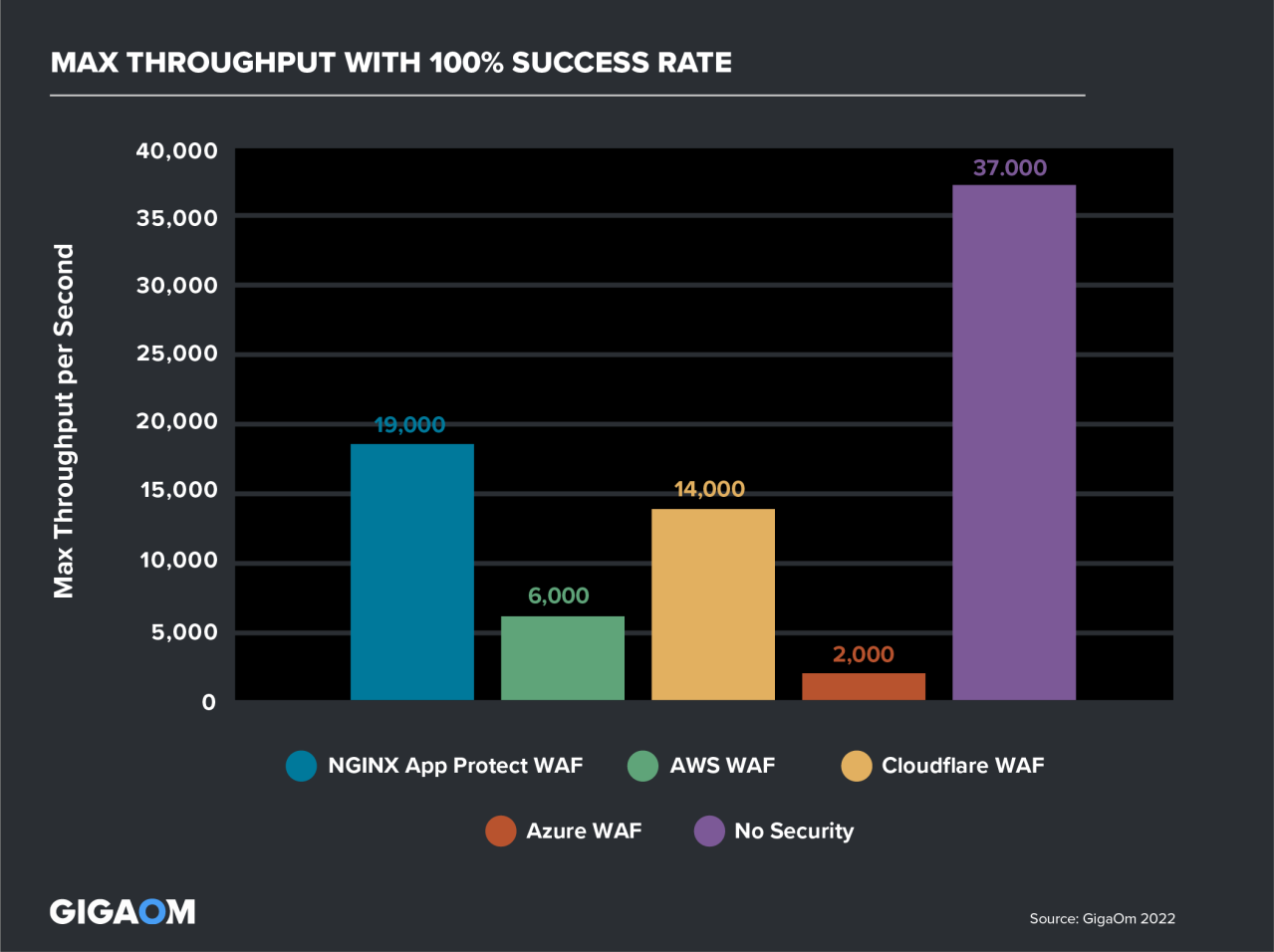

The latest High‑Performance Web Application Firewall Testing report from GigaOm shows how NGINX App Protect WAF consistently delivers strong app and API security while maintaining high performance and low latency, outperforming the other three WAFs tested – AWS WAF, Azure WAF, and Cloudflare WAF – at all tested attack rates.

As an example, Figure 4 shows the results of a test where the WAF had to handle 500 requests per second (RPS), with 95% (475 RPS) of requests valid and 5% of requests (25 RPS) “bad” (simulating script injection). At the 99th percentile, latency for NGINX App Protect WAF was 10x less than AWS WAF, 60x less than Cloudflare WAF, and 120x less than Azure WAF.

Figure 5 shows the highest throughput each WAF achieved at 100% success (no 5xx or 429 errors) with less than 30 milliseconds latency for each request. NGINX App Protect WAF handled 19,000 RPS versus Cloudflare WAF at 14,000 RPS, AWS WAF at 6,000 RPS, and Azure WAF at only 2,000 RPS.

How to Deploy NGINX App Protect and NGINX Ingress Controller on Amazon EKS

NGINX App Protect WAF and DoS leverage an app‑centric security approach with fully declarative configurations and security policies, making it easy to integrate security into your CI/CD pipeline for the application lifecycle on Amazon EKS.

NGINX Ingress Controller provides several custom resource definitions (CRDs) to manage every aspect of web application security and to support a shared responsibility and multi‑tenant model. CRD manifests can be applied following the namespace grouping used by the organization, to support ownership by more than one operations group.

When publishing an application on Amazon EKS, you can build in security by leveraging the automation pipeline already in use and layering the WAF security policy on top.

Additionally, with NGINX App Protect on NGINX Ingress Controller you can configure resource usage thresholds for both CPU and memory utilization, to keep NGINX App Protect from starving other processes. This is particularly important in multi‑tenant environments such as Kubernetes which rely on resource sharing and can potentially suffer from the ‘noisy neighbor’ problem.

Configuring Logging with NGINX CRDs

The logs for NGINX App Protect and NGINX Ingress Controller are separate by design, to reflect how security teams usually operate independently of DevOps and application owners. You can send NGINX App Protect logs to any syslog destination that is reachable from the Kubernetes pods, by setting the parameter to the app-protect-security-log-destination annotation to the cluster IP address of the syslog pod. Additionally, you can use the APLogConf resource to specify which NGINX App Protect logs you care about, and by implication which logs are pushed to the syslog pod. NGINX Ingress Controller logs are forwarded to the local standard output, as for all Kubernetes containers.

This sample APLogConf resource specifies that all requests are logged (not only malicious ones) and sets the maximum message and request sizes that can be logged.

apiVersion: appprotect.f5.com/v1beta1

kind: APLogConf

metadata:

name: logconf

namespace: dvwa

spec:

content:

format: default

max_message_size: 64k

max_request_size: any

filter:

request_type: allDefining a WAF Policy with NGINX CRDs

The APPolicy Policy object is a CRD that defines a WAF security policy with signature sets and security rules based on a declarative approach. This approach applies to both NGINX App Protect WAF and DoS, while the following example focuses on WAF. Policy definitions are usually stored on the organization’s source of truth as part of the SecOps catalog.

apiVersion: appprotect.f5.com/v1beta1

kind: APPolicy

metadata:

name: sample-policy

spec:

policy:

name: sample-policy

template:

name: POLICY_TEMPLATE_NGINX_BASE

applicationLanguage: utf-8

enforcementMode: blocking

signature-sets:

- name: Command Execution Signatures

alarm: true

block: true

[...]Once the security policy manifest has been applied on the Amazon EKS cluster, create an APLogConf object called log-violations to define the type and format of entries written to the log when a request violates a WAF policy:

apiVersion: appprotect.f5.com/v1beta1

kind: APLogConf

metadata:

name: log-violations

spec:

content:

format: default

max_message_size: 64k

max_request_size: any

filter:

request_type: illegalThe waf-policy Policy object then references sample-policy for NGINX App Protect WAF to enforce on incoming traffic when the application is exposed by NGINX Ingress Controller. It references log-violations to define the format of log entries sent to the syslog server specified in the logDest field.

apiVersion: k8s.nginx.org/v1

kind: Policy

metadata:

name: waf-policy

spec:

waf:

enable: true

apPolicy: "default/sample-policy"

securityLog:

enable: true

apLogConf: "default/log-violations"

logDest: "syslog:server=10.105.238.128:5144"Deployment is complete when DevOps publishes a VirtualServer object that configures NGINX Ingress Controller to expose the application on Amazon EKS:

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: eshop-vs

spec:

host: eshop.lab.local

policies:

- name: default/waf-policy

upstreams:

- name: eshop-upstream

service: eshop-service

port: 80

routes:

- path: /

action:

pass: eshop-upstreamThe VirtualServer object makes it easy to publish and secure containerized apps running on Amazon EKS while upholding the shared responsibility model, where SecOps provides a comprehensive catalog of security policies and DevOps relies on it to shift security left from day one. This enables organizations to transition to a DevSecOps strategy.

Conclusion

For companies with legacy apps and security solutions built up over years, shifting security left on Amazon EKS is likely a gradual process. But reframing security as code that is managed and maintained by the security team and consumed by DevOps helps deliver services faster and make them production ready.

To secure north‑south traffic in Amazon EKS, you can leverage NGINX Ingress Controller embedded with NGINX App Protect WAF for protect against point attacks at Layer 7 and NGINX App Protect DoS for DoS mitigation at Layers 4 and 7.

To try NGINX Ingress Controller with NGINX App Protect WAF, start a free 30-day trial on the AWS Marketplace or contact us to discuss your use cases.

To discover how you can prevent security breaches and protect your Kubernetes apps at scale using NGINX Ingress Controller and NGINX App Protect WAF and DoS on Amazon EKS, please download our eBook, Add Security to Your Amazon EKS with F5 NGINX.

To learn more about how NGINX App Protect WAF outperforms the native WAFs for AWS, Azure, and Cloudflare, download the High-Performance Web Application Firewall Testing report from GigaOm and register for the webinar on December 6 where GigaOm analyst Jake Dolezal reviews the results.