This post is part of a series:

- Deploying Application Services in Kubernetes, Part 1 explains why duplicating application services paradoxically can improve overall efficiency: because NetOps and DevOps teams have different mandates, it makes sense for them to select and manage the tools that best suit their specific needs.

- Deploying Application Services in Kubernetes, Part 2 (this post) provides guidance on where to deploy application services in a Kubernetes environment, using WAF as an example. Depending on your needs, it can make sense to deploy your WAF at the “front door” of the environment, on the Ingress Controller, per‑service, or per‑pod.

In the previous blog in this series, we looked at the rising influence of DevOps in controlling how applications are deployed, managed, and delivered. Although this may appear to invite conflict with NetOps teams, enterprises instead need to recognize that each team has different responsibilities, goals, and modes of operation. Careful choices about where to locate application services such as load balancing and web application firewall (WAF), with duplication in some cases, is the key to specialization and operational efficiency.

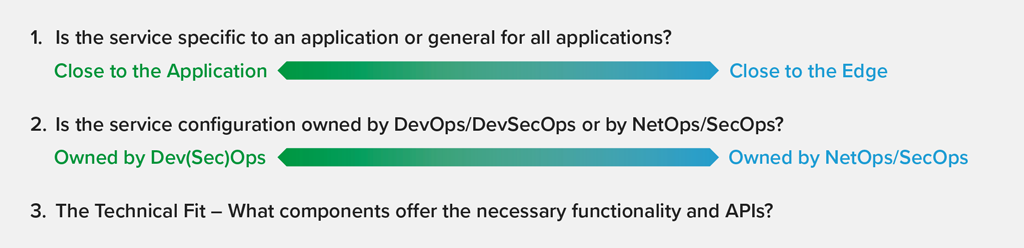

There are two primary criteria to consider when determining where to locate an application service:

- Is the service you wish to deploy (a) specific to an application or line of business, or (b) general, for all applications?

- Is the service configuration owned by (a) DevOps or DevSecOps, or (b) NetOps or SecOps?

When you lean towards (a), it often makes sense to deploy the service close to the applications that require it, and to give control to the DevOps team which is responsible for the operation of those applications.

When you lean more towards (b), then it’s generally best to deploy the service at the front door of the infrastructure, managed by the NetOps team which is responsible for the successful operation of the entire platform.

In addition, you need to consider the technical fit in case compromises are necessary. Can the service be deployed and operated using the ecosystem tools that either DevOps or NetOps teams are comfortable with? Do the appropriate tools deliver the necessary functionality, configuration interfaces, and monitoring APIs?

Kubernetes Introduces Additional Choices

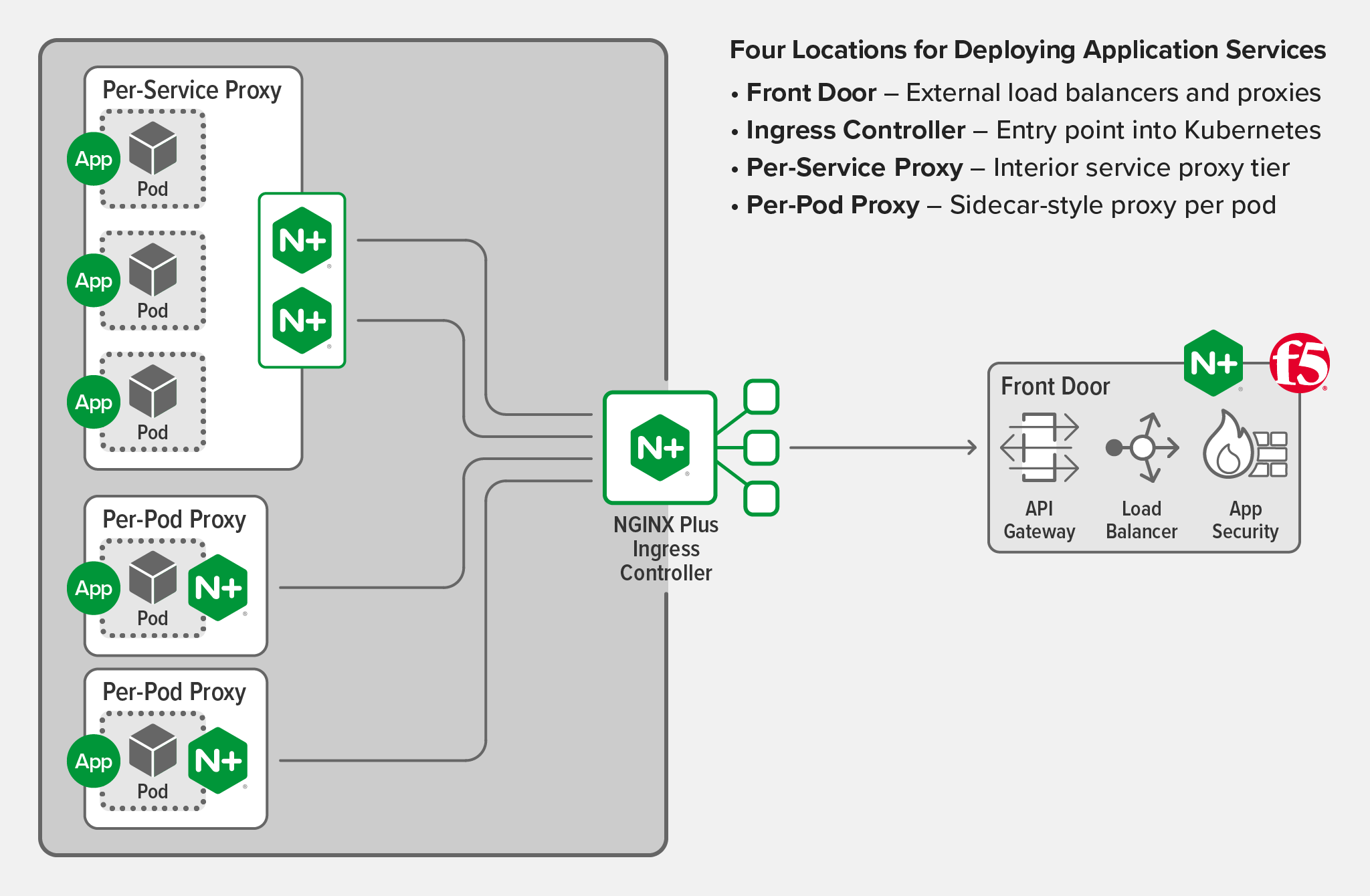

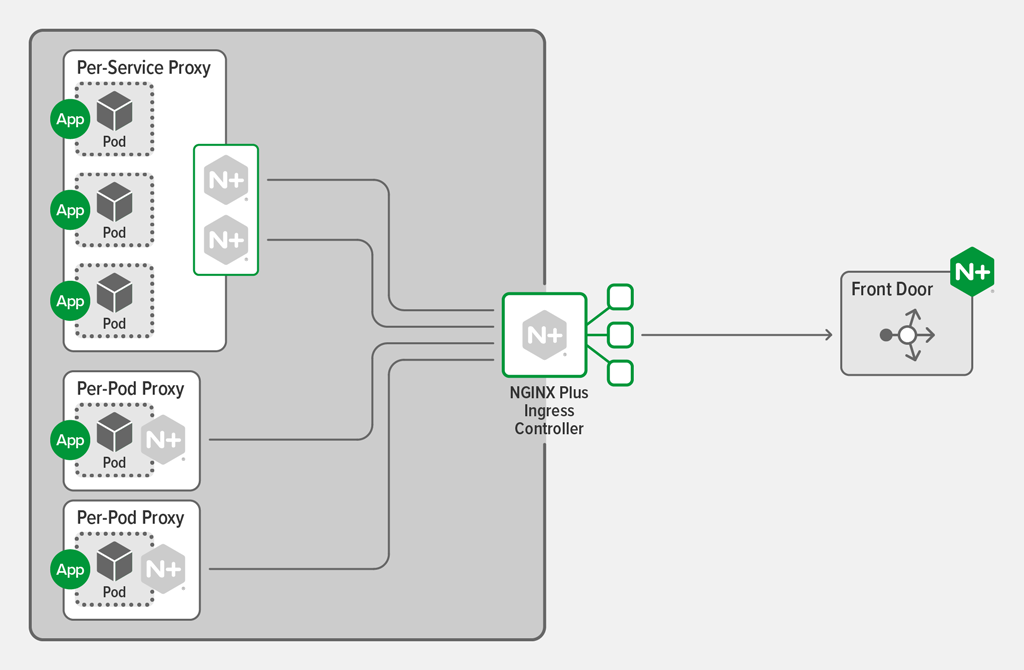

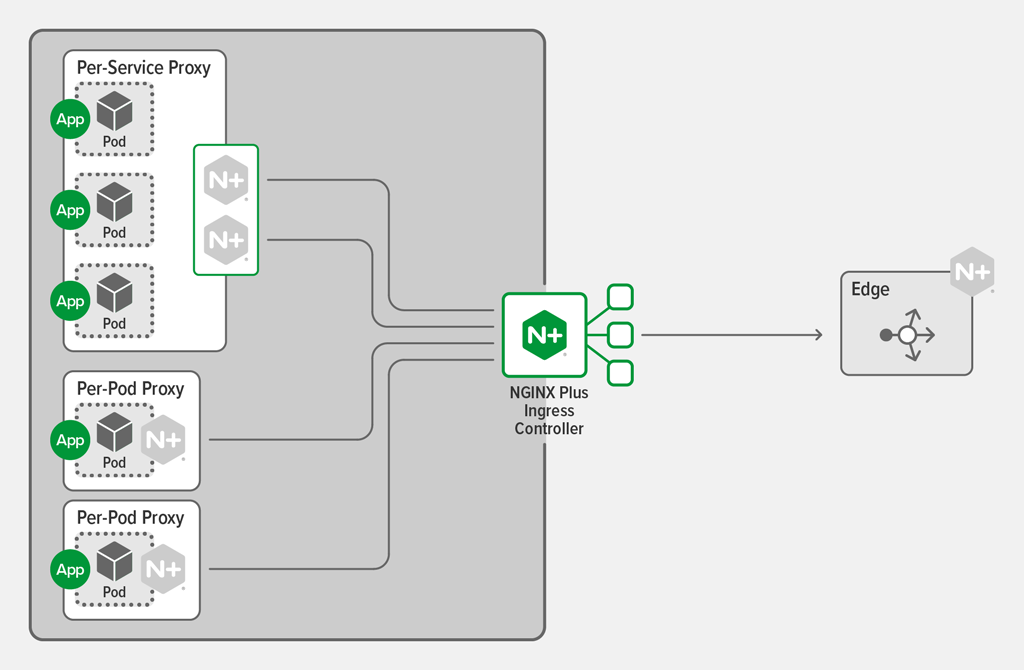

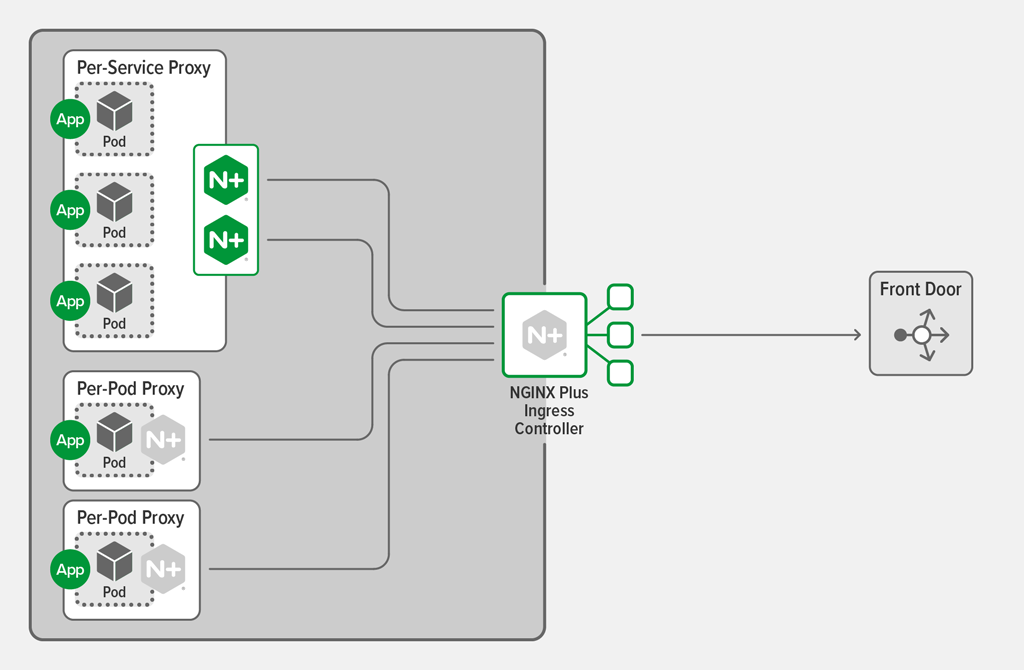

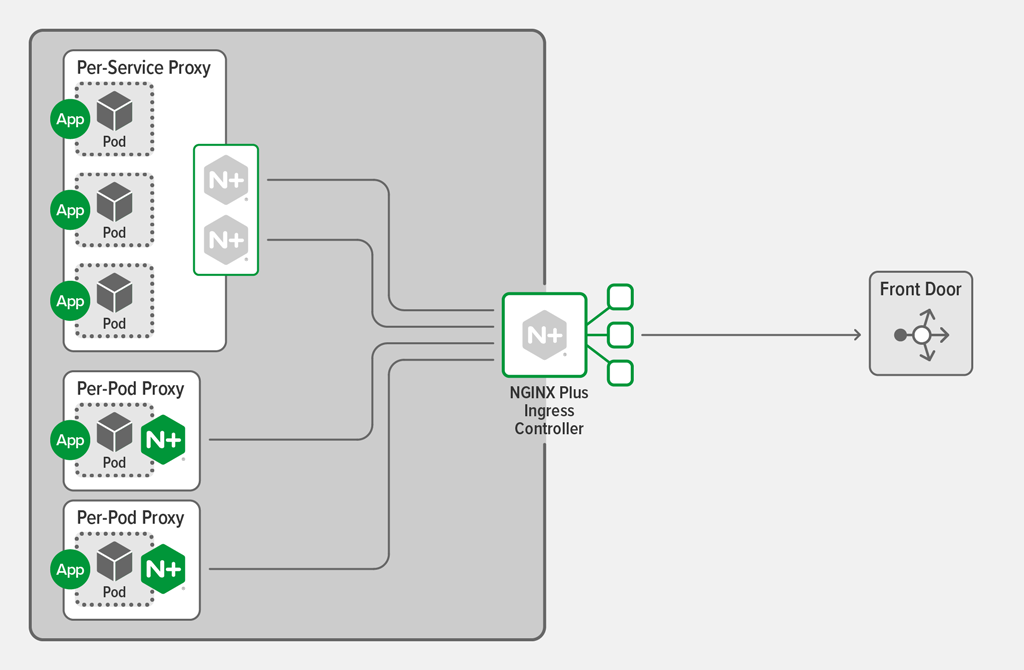

In a Kubernetes environment, there are several locations where you might deploy application services:

Let’s take web application firewall (WAF) as an example. WAF policies implement advanced security measures to inspect and block undesirable traffic, but these policies often need to be fine‑tuned for specific applications in order to minimize the number of false positives.

Deploying WAF at the Front Door

Consider deploying a WAF device and associated policies at the front door of the infrastructure when the following criteria are true:

- The WAF policy is created and governed by a central SecOps team.

- The WAF device is a dedicated appliance under the control of the NetOps team.

- All (or most) applications must be protected by the same WAF policy, and little application‑specific tuning is required.

- You want to be able to clearly demonstrate that security and governance policies are met, and have a single point of control to lock down any possible breaches or vulnerabilities.

Deploying WAF on the Ingress Controller

Consider deploying the WAF application service on your Ingress controller when the following criteria are true:

- The implementation of the WAF policies is under the direction of the DevOps or DevSecOps team.

- You want to centralize WAF policies at the infrastructure layer, rather than delegating them to individual applications.

- You make extensive use of Kubernetes APIs to manage the deployment and operation of your applications.

This approach still allows for a central SecOps team to define the WAF policies. They can define the policies in a manner that can be easily imported into Kubernetes, and the DevOps team responsible for the Ingress controller can then assign the WAF policies to specific applications.

As of NGINX Plus Ingress Controller release 1.8.0, the NGINX App Protect WAF module can be deployed directly on the Ingress Controller. All WAF configuration is managed using Ingress resources, configured through the Kubernetes API.

Deploying WAF on a Per‑Service Basis

You can also deploy WAF as a proxy tier within Kubernetes, in front of one or more specific services that require WAF protection. Clearly, this requires that the WAF has a software form factor that can be easily and efficiently deployed within a container.

Consider deploying WAF as a per‑service proxy when the following criteria are true:

- The WAF policies are under the direction of a DevSecOps team, and are specific to a small number of services.

- Updates to the policies can be managed by either a suitable container‑aware API, or by updating the WAF proxy Deployment.

- The WAF device operates appropriately in a Kubernetes environment.

For example, NGINX Plus with App Protect can be easily deployed in this manner. You can make the Deployment invisible to both the protected services and the clients that call them by taking these actions:

- Rename the service to be protected from (for example) WEB-SVC to WEB-SVC-INTERNAL

- Deploy the WAF proxy in a new Kubernetes service, named WEB-SVC

- Configure the WAF proxy to forward requests to WEB-SVC-INTERNAL

- Optionally, use Kubernetes network policies to enforce a rule stipulating that only the WAF proxies can communicate with WEB-SVC-INTERNAL

Deploying WAF on a Per‑Pod Basis

Finally, you may also deploy a WAF in a Pod, acting as an ingress proxy for the application running in the Pod. In this case, the WAF effectively becomes part of the application.

Consider deploying a WAF in this manner when the following criteria are true:

- The WAF policy is specific to the application.

- You want to bind the policy and the application closely together, for example so that the application is deployed with the WAF policy at all points in the development pipeline.

- The application might be frequently redeployed into different clusters, and you don’t want to have to ‘prime’ each cluster with a WAF service and the correct configuration.

This approach is particularly suitable when you have a legacy application that requires a specific security policy and you wish to package that application into a container form factor to make it easier to deploy and scale.

A Note on Service Meshes

The per‑Pod proxy model is different from the sidecar proxy that has been popularized by service meshes such as Istio, Aspen Mesh, Linkerd, NGINX Mesh, and others:

| Per-Pod Proxy | Sidecar Proxy | |

|---|---|---|

| Injected into Pod | At build time | At deployment (auto‑injection) |

| Traffic Coverage | Ingress only | Ingress and egress |

| Configuration Owner | App developer | DevOps or mesh owner |

| Features | Very capable | Generally lightweight and simple |

| Deployment | Added to the Pods that need it | Deployed everywhere by the mesh infrastructure |

The need to inspect and secure east‑west traffic using a WAF is typically low, and no current service mesh implementations enable you to easily configure a full WAF within selected sidecar proxies. The Ingress controller provides a strong security perimeter and is the most effective place to secure ingress traffic from untrusted, external clients (north‑south traffic).

Summary

With greater choice as to where to deploy an application service such as a WAF, you have more opportunities to maximize the operational efficiency of your Kubernetes platform.

| At Front Door | Ingress Controller | Per-Service Proxy | Per-Pod Proxy | |

|---|---|---|---|---|

| Availability | NGINX App Protect or other WAF solutions | NGINX Plus Ingress Controller 1.8.0 | NGINX App Protect or similar software WAFs | NGINX App Protect or similar software WAFs |

| Audience | SecOps | SecOps/DevSecOps | DevSecOps | App owner |

| Scope | Global | Per service/URI | Per service | Per endpoint |

| Cost/Efficiency | Good/excellent (consolidation with other services, for example ADC) | Excellent (consolidation with Ingress Controller) | Good | Low (dedicated WAF per Pod) |

| Configuration | nginx.conf | Kubernetes API | nginx.conf | nginx.conf |

Try the NGINX Plus Ingress Controller for yourself during a free 30-day trial of NGINX Plus and NGINX App Protect, or contact us to discuss your use cases.