In our previous blog series, Deploying Application Services in Kubernetes, we discussed a pattern that we see at many customers during their digital transformation journeys. Most journeys start with the traditional model for creating and deploying apps (usually monoliths), splitting responsibility for those two functions between siloed Development and Operations teams. As organizations move to a more modern model, they create microservices‑based apps and deploy them using DevOps practices which blur the division between traditional silos.

While DevOps teams and application owners are taking more direct control over how their applications are deployed, managed, and delivered, it’s often not practical to break down the silo walls all at once – nor do we find that it’s necessary. Instead we observe that a logical division of responsibilities still applies:

-

Network and security teams continue to focus on the overall security, performance, and availability of the corporate infrastructure. They care most about global services, which are generally deployed at the “front door” of that infrastructure.

These teams rely on F5 BIG-IP for use cases like global server load balancing (GSLB), DNS resolution and load balancing, and sophisticated traffic shaping. BIG‑IQ and NGINX Controller [now F5 NGINX Management Suite] provide metrics and alerts in a form that best suits NetOps teams, and for SecOps teams they provide the visibility and control over security that SecOps must have to protect the organization’s assets and comply with industry regulations.

-

Operations teams (DevOps with an emphasis on Ops) create and manage individual applications as required by their associated lines of business. They care most about services such as automation and CI/CD pipelines that help them iterate more quickly on updated features; such services are generally deployed “closer” to the app, for example inside a Kubernetes environment.

These teams rely on NGINX products like NGINX Plus, NGINX App Protect, NGINX Ingress Controller, and NGINX Service Mesh for load balancing and traffic shaping of distributed, microservices‑based applications hosted in multiple environments, including Kubernetes clusters. Use cases include TLS termination, host‑based routing, URI rewriting, JWT authentication, session persistence, rate limiting, health checking, security (with NGINX App Protect as an integrated WAF), and many more.

NetOps and DevOps in Kubernetes Environments

The differing concerns of NetOps and DevOps teams are reflected in the roles they play in Kubernetes environments and the tools they use to fulfil them. At the risk of oversimplification, we can say that NetOps teams are primarily concerned with infrastructure and networking functionality outside the Kubernetes cluster and DevOps teams with that functionality inside the cluster.

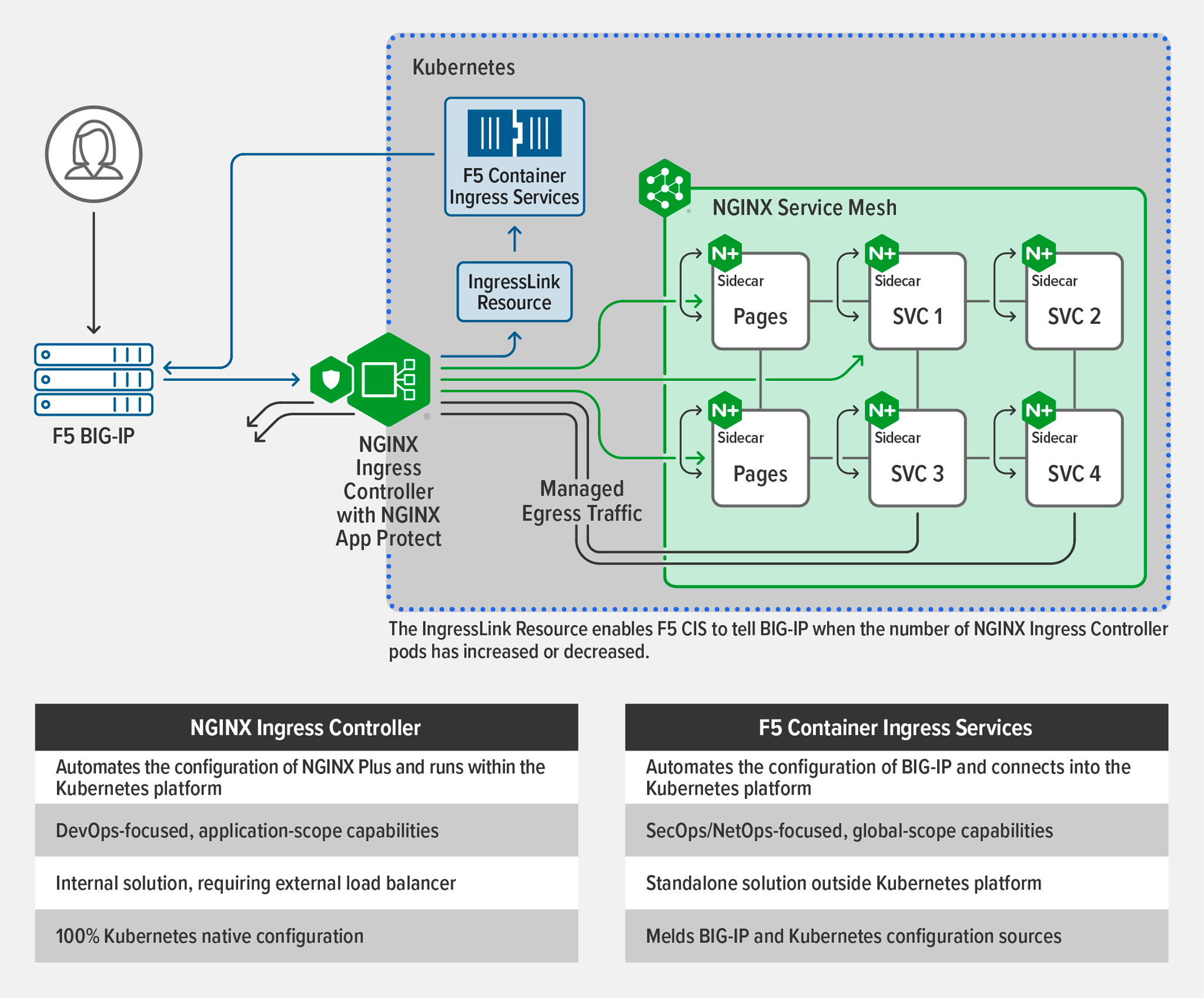

To direct traffic into Kubernetes clusters, NetOps teams can use BIG‑IP as an external load balancer that supports the capabilities and range of overlay networking technologies that they are already familiar with. On its own, however, BIG‑IP has no way to track changes to the set of pods inside the Kubernetes cluster (for example, changes to the number of pods or their IP addresses). To get around this constraint, F5 Container Ingress Services (CIS) is deployed as an operator inside the cluster. CIS watches for changes to the set of pods and automatically modifies the addresses in the BIG‑IP system’s load‑balancing pool accordingly. It also looks for the creation of new Ingress, Route, and custom resources and updates the BIG‑IP configuration accordingly.

Although you can use the combination of BIG‑IP with CIS to load balance traffic to application pods directly, in practice NGINX Ingress Controller is the ideal solution to discover and keep up with dynamic application changes to pods and workloads that represent large numbers of services – for example, during horizontal scaling of your application pods to meet changing levels of demand.

Another advantage of deploying NGINX Ingress Controller is that it shifts control of application load balancing to the DevOps teams that are in charge of the applications themselves. Its high‑performance control plane and DevOps‑centric design makes it particularly strong at supporting fast‑changing DevOps use cases – such as in‑place reconfigurations and rolling updates – across Kubernetes services in multiple namespaces. NGINX Ingress Controller uses the native Kubernetes API to discover pods as they are scaled.

How BIG-IP and NGINX Ingress Controller Work Together

As you may know, BIG‑IP and NGINX Ingress Controller were originally designed by two separate companies (F5 and NGINX respectively). Since F5 acquired NGINX, customers have told us that improving interoperability between the two tools would simplify management of their infrastructure. In response we have developed a new Kubernetes resource we call IngressLink.

The IngressLink resource is a simple enhancement that uses a Kubernetes CustomResourceDefinition (CRD) to “link” NGINX Ingress Controller and BIG‑IP. Simply put, it enables CIS to “tell” BIG‑IP whenever the set of NGINX Ingress Controller pods has changed. With this information, BIG‑IP can agilely shift its corresponding load‑balancing policies to match.

With BIG‑IP deployed for load balancing to the Kubernetes clusters and NGINX Ingress Controller handling ingress‑egress traffic, the traffic flow looks like this:

- BIG‑IP directs external traffic to the NGINX Ingress Controller instances.

- NGINX Ingress Controller distributes traffic to the appropriate services within the cluster.

- Because NGINX Ingress Controller is exceptionally efficient, a stable set of instances can handle frequent and large changes to the set of application pods. But when your NGINX Ingress Controller needs to horizontally scale out or in (to handle traffic surges and declines), CIS informs BIG‑IP about the changes (via the IngressLink resource), enabling BIG‑IP to adapt quickly to the changes.

Getting Started

For more details on how the IngressLink resource works, including a deployment guide, visit F5 CloudDocs.

Get started by requesting your free 30-day trial of NGINX Ingress Controller with NGINX App Protect WAF and DoS. If you aren’t yet a BIG‑IP user, select the trial at F5.com that works best for your team.