The key‑value store feature was introduced in NGINX Plus Release 13 for HTTP and extended to TCP and UDP in Release 14. With key‑value stores, you can customize how NGINX Plus handles traffic, based on all sorts of criteria, without needing to reload the configuration or restart NGINX Plus.

Editor – In NGINX Plus R16 and later, key‑value stores can be synchronized across all NGINX Plus instances in a cluster. (State sharing in a cluster is available for other NGINX Plus features as well.) For details, see our blog and the NGINX Plus Admin Guide.

In previous blog posts, we’ve described how to use key‑value stores for denylisting IP addresses and A/B testing. In this blog post, I describe how to use key‑value stores to impose different bandwidth limits for different categories of users, in support of application performance goals. This practice is one way to implement what is often referred to as class of service.

Throttling Bandwidth

There are many reasons to throttle bandwidth in web applications. On an ecommerce site, for example, you can enhance the user experience for users who are on track to make a purchase, by throttling bandwidth for more casual users. This also protects all legitimate users from malicious users who are just trying to hurt site performance.

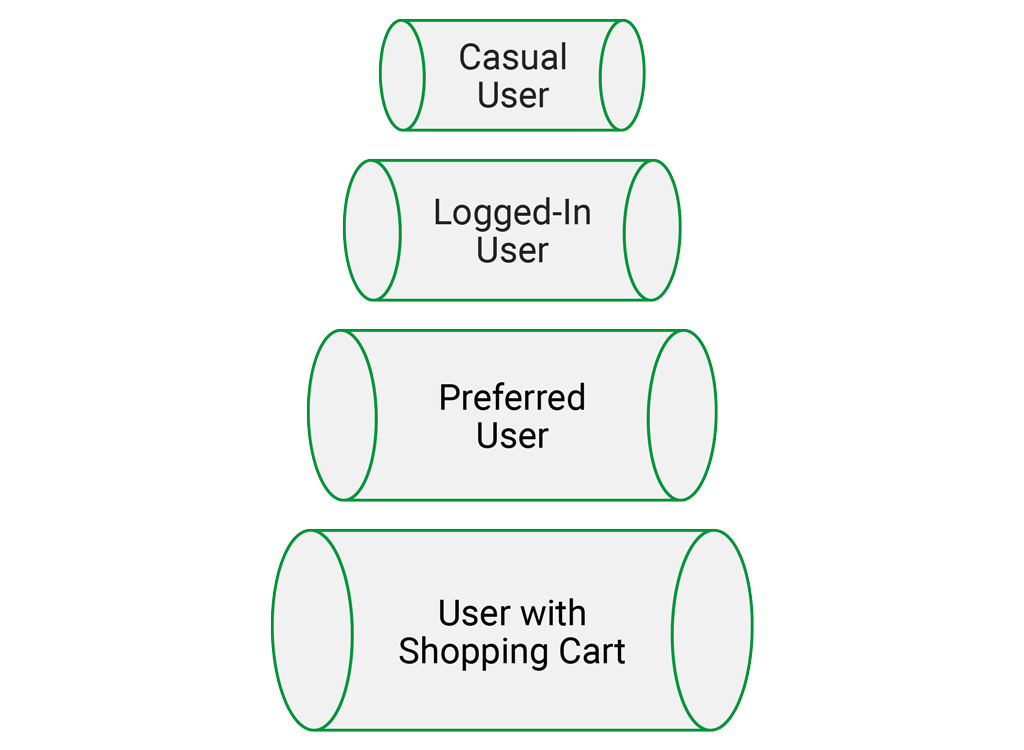

In our example, we set the lowest bandwidth limit for users who are not logged in, a higher limit for users who are logged in, a higher limit yet for preferred users, and the highest limit of all for users who are logged in and have items in their cart.

The tool you create to adjust bandwidth by user category is called a dynamic bandwidth limiter. You use two main features of NGINX Plus to make it work:

- The first is a core feature of NGINX and NGINX Plus: the ability to limit the rate of responses. Use the

limit_ratedirective or$limit_ratevariable to specify the maximum throughput. The advantage of the variable is that you can set different rates for different conditions, as we’re doing in our example. - The second feature is newer: the key‑value store in NGINX Plus. The NGINX Plus key‑value store can contain arbitrary JSON‑formatted keys and values; it’s stored in memory for fast access.

Configuring the Dynamic Bandwidth Limiter

To create a dynamic bandwidth limiter, we set a default limit in the NGINX Plus configuration with a value appropriate for the most casual users (the ones not logged in). Bandwidth limits are more generous for progressively more valuable users, with an external application doing the assignment of customers to a class of service in the key‑value store.

In the following example, the keyval_zone directive allocates 1 MB of shared memory for a key‑value store named ratezone. In the keyval directive, the first parameter defines the customer ID recorded in a cookie as the key for lookups in the key‑value store, and the second parameter specifies that the $bwlimit variable is set to the value associated with the key (the class of service for that customer).

The $cookie_CUSTOMERID variable represents a cookie named CUSTOMERID, a simple mechanism for uniquely identifying a user which is usually set by an application when the user logs in. It is most often used to to provide session persistence by tracking the user as he or she navigates through a site.

The key‑value store depends on the NGINX Plus API, which is enabled by including the api directive in the /api location with the write=on parameter for read‑write access. (The other location directive enables the built‑in NGINX Plus live activity monitoring dashboard, which also uses the NGINX Plus API.)

Note: This snippet does not restrict access to the API, but we strongly recommend that you do so, especially in a production environment. For more information, see the NGINX Plus Admin Guide.

keyval_zone zone=ratezone:1M;

keyval $cookie_CUSTOMERID $bwlimit zone=ratezone;

server {

listen 8080;

location = /dashboard.html {

root /usr/share/nginx/html;

}

location /api {

api write=on;

}

#...

}We then use a map block to define the bandwidth limit for each class of service. As set by the keyval directive, the $bwlimit variable captures the customer’s class of service as set in the key‑value store by the external application: bronze for logged‑in users, silver for preferred users, and gold for users with items in their shopping carts.

The map block assigns users who are not logged in or don’t have an entry in the key‑value store the default bandwidth limit of 256 Kbps. The limits for the classes of service are 512 Kbps for bronze, 1 Mbps for silver, and unlimited bandwidth for gold (indicated by 0). (You may want to set a limit for shopping cart users as well, depending on your experience with the performance of your app.) The $ratelimit variable captures the bandwidth limit.

The set directive in the server block implements the bandwidth limit by setting the built‑in $limit_rate variable to the value of $ratelimit as set in the map block.

map $bwlimit $ratelimit {

bronze 512k;

silver 1m;

gold 0;

default 256k;

}

server {

#...

set $limit_rate $ratelimit;

#...

}Updating the Key-Value Store with the NGINX Plus API

The HTTP GET, POST, and PATCH methods in the NGINX Plus API are used to access and manage the key‑value store. As mentioned previously, in our example an external application manages the key‑value store. For the purpose of illustration, we’ll use curl commands here to show the possible operations.

First we use the GET method to verify that the ratezone key‑value store is empty. The second curl command requests the file brownie.jpg, using the -b option to pass in the customer ID as the value of the HTTP Cookie header. The -o option writes the file to /dev/null (effectively discarding it) because the point here is to see the download rate, not to actually look at brownie.jpg.

Because the key‑value store is empty, there’s no key for the customer, so we expect him to get the default rate limit of 256 Kbps. This is is confirmed by the 276 Kbps rate reported in the Current Speed column.

root# curl -X GET localhost:8080/api/1/http/keyvals/ratezone

{}

root# curl -b "CUSTOMERID=CPE1704TKS" -o /dev/null localhost/brownie.jpg

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1893k 100 1893k 0 0 269k 0 0:00:07 0:00:07 --:--:-- 276kNow we use the HTTP POST method to create an entry in the key‑value store, one that assigns customer CPE1704TKS to the bronze class of service. The second command confirms the new entry exists, and the final one shows that the download speed has increased to 626 Kbps, reflecting the higher limit for bronze.

root# curl -X POST -d '{"CPE1704TKS":"bronze"}' localhost:8080/api/1/http/keyvals/ratezone

root# curl -X GET localhost:8080/api/1/http/keyvals/ratezone

{"CPE1704TKS":"bronze"}

root# curl -b "CUSTOMERID=CPE1704TKS" -o /dev/null localhost/brownie.jpg

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1893k 100 1893k 0 0 626k 0 0:00:03 0:00:03 --:--:-- 626kThen we use the PATCH method to promote the customer up to the silver class of service, and confirm the download rate has increased again.

root# curl -X PATCH -d '{"CPE1704TKS":"silver"}' localhost:8080/api/1/http/keyvals/ratezone

root# curl -X GET localhost:8080/api/1/http/keyvals/ratezone

{"CPE1704TKS":"silver"}

root# curl -b "CUSTOMERID=CPE1704TKS" -o /dev/null localhost/brownie.jpg

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1893k 100 1893k 0 0 1876k 0 0:00:01 0:00:01 --:--:-- 1877kLastly, we use the PATCH method again to promote the customer to the gold class of service, and confirm that the download rate increases dramatically when there is no imposed bandwidth limit.

root# curl -X PATCH -d '{"CPE1704TKS":"gold"}' localhost:8080/api/1/http/keyvals/ratezone

root# curl -X GET localhost:8080/api/1/http/keyvals/ratezone

{"CPE1704TKS":"gold"}

root# curl -b "CUSTOMERID=CPE1704TKS" -o /dev/null localhost/brownie.jpg

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1893k 100 1893k 0 0 192M 0 --:--:-- --:--:-- --:--:-- 205MUsing the Key-Value Store for Related Use Cases

This example shows how to use a Customer ID cookie ($cookie_CUSTOMERID) to identify each user and assign a class-of-service category. However, this logic can be applied to nearly any value captured in an NGINX variable ($remote_ip, $jwt_claim_sub, and so on). Some variables make more sense than others depending on the application or use case. You can also use the key‑value store to limit the connection rate, which is quite useful for keeping casual or malicious users from negatively impacting other users, especially active purchasers, and the performance of the application in general.

Complete Sample NGINX Configuration

keyval_zone zone=ratezone:1M;

keyval $cookie_CUSTOMERID $bwlimit zone=ratezone;

map $bwlimit $ratelimit {

bronze 512k;

silver 1m;

gold 0;

default 256k;

}

upstream backend1 {

zone backend1 64k;

server 192.168.1.100:443;

}

server {

listen 8080;

location = /dashboard.html {

root /usr/share/nginx/html;

}

location /api {

api write=on;

}

location / {

return 301 /dashboard.html;

}

}

server {

listen 80;

set $limit_rate $ratelimit;

location /favicon.ico {

alias /usr/share/nginx/html/nginx-favicon.png;

}

location = /brownie.jpg {

root /usr/share/nginx/html;

}

location / {

proxy_set_header Host $host;

proxy_pass https://backend1;

}

}To try out the NGINX Plus key‑value store for yourself, start your free 30-day trial today or contact us to discuss your use case.