The way enterprises architect applications has changed. According to our recent user survey, 58% of applications in an enterprise portfolio are monoliths, where all of the application logic is packaged and deployed as a single unit. That percentage is down from 65% just a year ago, underlining how quickly enterprises are pivoting toward more modern application architectures. In the same survey, we found the remaining 42% of applications are either entirely or partially composed of microservices (where the functional components of the application are refactored into discrete, packaged services). Moreover, 42% of the organizations we surveyed already use microservices in production and 40% say microservices are highly important to their business strategy.

It’s clear that microservices – originally the domain of cloud‑native companies like Google, Netflix, and Facebook – are now a mainstay in enterprise IT architectures. You can read more about this in our seminal blog series on microservices. It details the journey from monoliths to microservices, which profoundly impacts application infrastructure in three ways:

- People: Control shifts from infrastructure teams to application teams. AWS showed the industry that if you make infrastructure easy to manage, developers will provision it themselves. Responsibility for infrastructure then shifts away from dedicated infrastructure and network roles.

- Process: DevOps speeds provisioning time. DevOps applies agile methodologies to app deployment and maintenance. Modern app infrastructure must be automated and provisioned orders of magnitude faster, or you risk delaying the deployment of crucial fixes and enhancements.

- Technology: Infrastructure decouples software from hardware. Software‑defined infrastructure, Infrastructure as Code, and composable infrastructure all describe the trend of value shifting from proprietary hardware appliances to programmable software on commodity hardware or public cloud computing resources.

These trends impact all aspects of application development and delivery, but in particular they change the way load balancers – sometimes referred to as application delivery controllers, or ADCs – are deployed. Load balancers are the intelligent control point that sit in front of all apps and, increasingly, all microservices.

Bridging the Divide Between Dev and Ops

Now that NGINX is part of F5, customers often ask if they should deploy F5 BIG-IPs or NGINX Plus and NGINX Controller as their load balancing infrastructure. To answer that question, we need to look at how load balancers, or ADCs, have evolved.

Historically, a load balancer was deployed as hardware at the edge of the data center. The appliance improved the security, performance, and resilience of all the apps that sat behind it. However, the shift to microservices and the resulting changes to the people, process, and technology of application infrastructure require frequent changes to apps. These app changes in turn require changes to load balancer policies.

The F5 BIG-IP appliance sitting at the frontend of your environment does the heavy lifting, providing advanced application services – like local traffic management, global traffic management, DNS management, bot protection, DDoS mitigation, SSL offload, and identity and access management – for hundreds or even thousands of applications. Forcing constant policy changes to this environment can destabilize your application delivery and security, introducing risk and requiring your NetOps teams to spend time on testing of new policies rather than other, value‑added work. This process applies to all form factors of F5 BIG-IP, which has evolved to support hardware, software, and cloud options. All share the same architecture: a robust, fully integrated solution that provides a rich set of application services.

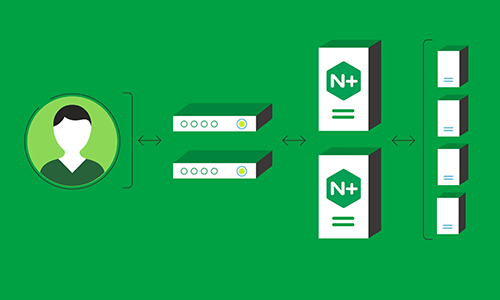

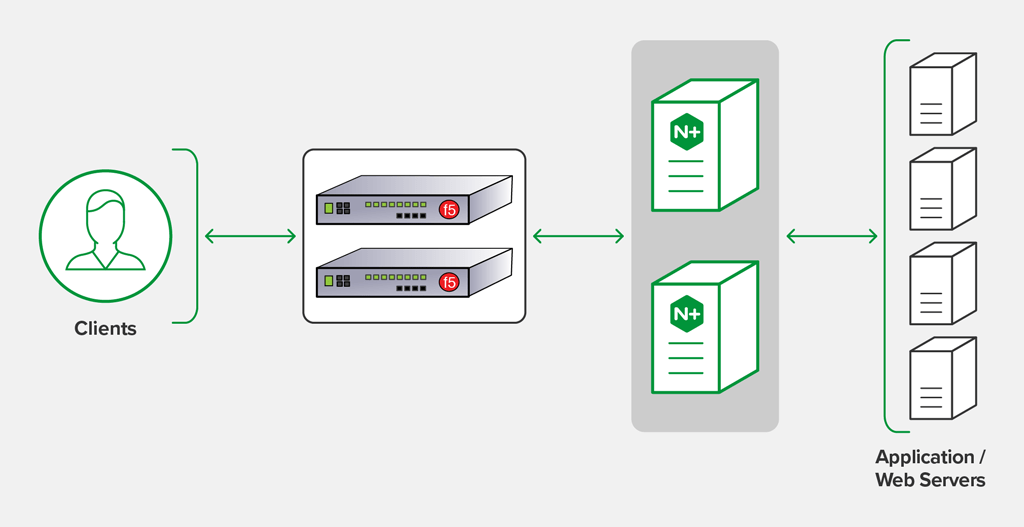

A different approach is needed in the world of modern, quickly changing apps and microservices. Here, we recommend that enterprises take a two‑pronged approach. First, retain their F5 BIG-IP infrastructure at the frontend to provide those advanced application services to the large numbers of mission‑critical apps it’s charged to protect and scale. Second, augment that solution by placing a lightweight software load balancer from NGINX – with NGINX Controller managing instances of NGINX Plus load balancers – directly in front of your modern application environments.

NGINX is decoupled from the hardware and operating system, enabling it to be part of your application stack. This empowers your DevOps and application teams to directly manage software load balancer and configure any associated application services, often automating them as part of a CI/CD framework.

The result? You achieve the agility and time-to-market benefits that your app teams need, without sacrificing the reliability and security controls your network teams require.

NGINX software load balancers are the key to bridging the divide between your dev (AppDev, DevOps) and ops (NetOps, SecOps) teams. Most customers are finding they need to migrate some or all of their F5 hardware to NGINX software. But how do you choose the right migration strategy?

The logical first step is to augment your existing F5 BIG-IP with NGINX Plus and NGINX Controller. There are three common deployment models to consider:

- Deploy NGINX behind the F5 appliance to act as a DevOps‑friendly abstraction layer

- Provision an NGINX instance for each of your apps, or even for each of your customers

- Run NGINX as your multi‑cloud application load balancer for cloud‑native apps

Because NGINX is lightweight and programmable, it consumes very few compute resources and imposes little to no additional strain on your infrastructure.

This approach gives you the ability to assess how quickly you need to build out software load balancing based on your application strategy. The speed of your migration to a software‑based infrastructure depends on how aggressively you’re moving to modern application architectures that incorporate microservices and cloud‑native services. To help, we’ve curated a list of resources to help you research, evaluate, and implement NGINX.

Resources to Help You Migrate from F5 Hardware to NGINX Software

Stage 1: Researching NGINX as a Complementary Solution to F5

The first stage in the process is to understand the benefits of deploying NGINX as an additional load balancer. If you’re just getting started, we recommend you check out our:

Stage 2: Evaluating the Business Case and Migration Options for NGINX

Now that you understand the basics, it’s time to build the business case for NGINX. Understand the various ways you can deploy NGINX to augment your F5 solutions. Learn from customers and NGINX experts with our:

Stage 3: Implementing NGINX and Migrating F5 BIG-IP iRules

After making the investment decision, it’s time to roll up your sleeves and deploy NGINX. Regardless of whether you are migrating all or some of your hardware to NGINX software, you’ll need to learn how to translate F5 BIG-IP iRules into NGINX configuration directives, to ensure continuity of application services spanning from the frontend BIG‑IP to the backend NGINX Plus instances. You’ll also want to learn the basics of using NGINX Plus and NGINX Controller.

Get Started with a Free Trial of NGINX Software

Getting started with NGINX is easy. We offer an automated trial experience.

Request a free trial of NGINX Controller (it also includes NGINX Plus) to experience the power of NGINX with additional monitoring, management, and analytics capabilities. This is the best option for infrastructure and network teams that do not manage network infrastructure via APIs or want to evaluate the NGINX Application Platform as a software ADC that more closely resembles an F5 BIG-IP.

We’d love to talk with you about how NGINX can help with your use case.