Editor – This post is part of a 10-part series:

- Reduce Complexity with Production-Grade Kubernetes

- How to Improve Resilience in Kubernetes with Advanced Traffic Management (this post)

- How to Improve Visibility in Kubernetes

- Six Ways to Secure Kubernetes Using Traffic Management Tools

- A Guide to Choosing an Ingress Controller, Part 1: Identify Your Requirements

- A Guide to Choosing an Ingress Controller, Part 2: Risks and Future-Proofing

- A Guide to Choosing an Ingress Controller, Part 3: Open Source vs. Default vs. Commercial

- A Guide to Choosing an Ingress Controller, Part 4: NGINX Ingress Controller Options

- How to Choose a Service Mesh

- Performance Testing NGINX Ingress Controllers in a Dynamic Kubernetes Cloud Environment

You can also download the complete set of blogs as a free eBook – Taking Kubernetes from Test to Production.

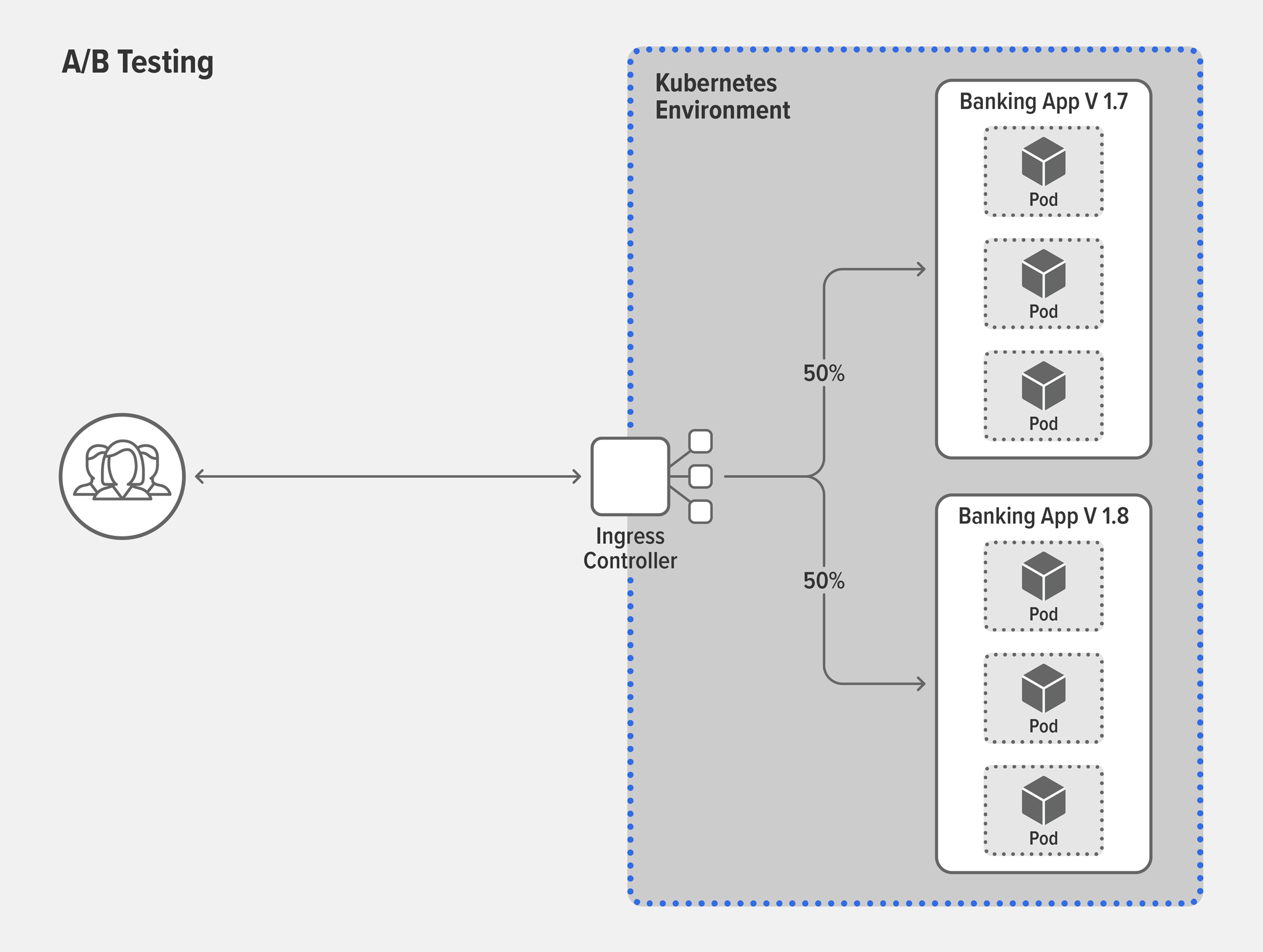

There’s a very easy way to tell that a company isn’t successfully using modern app development technologies – its customers are quick to complain on social media. They complain when they can’t stream the latest bingeworthy release. Or access online banking. Or make a purchase, because the cart is timing out.

Even if customers don’t complain publicly, that doesn’t mean their bad experience doesn’t have consequences. One of our customers – a large insurance company – told us that they lose customers when their homepage doesn’t load within 3 seconds.

All of those user complaints of poor performance or outages point to a common culprit: resiliency…or the lack of it. The beauty of microservices technologies – including containers and Kubernetes – is that they can significantly improve the customer experience by improving the resiliency of your apps. How? It’s all about the architecture.

I like to explain the core difference between monolithic and microservices architectures by using the analogy of a string of holiday lights. When a bulb goes out on an older‑style strand, the entire strand goes dark. If you can’t replace the bulb, the only thing worth decorating with that strand is the inside of your garbage can. This old style of lights is like a monolithic app, which also has tightly coupled components and fails if one component breaks.

But the lighting industry, like the software industry, detected this pain point. When a bulb breaks on a modern strand of lights, the others keep shining brightly, just as a well‑designed microservices app keeps working even when a service instance fails.

Kubernetes Traffic Management

Containers are a popular choice in microservices architectures because they are ideally suited for building an application using smaller, discrete components – they are lightweight, portable, and easy to scale. Kubernetes is the de facto standard for container orchestration, but there are a lot of challenges around making Kubernetes production‑ready. One element that improves both your control over Kubernetes apps and their resilience is a mature traffic management strategy that allows you to control services rather than packets, and to adapt traffic‑management rules dynamically or with the Kubernetes API. While traffic management is important in any architecture, for high‑performance apps two traffic‑management tools are essential: traffic control and traffic splitting.

Traffic Control

Traffic control (sometimes called traffic routing or traffic shaping) refers to the act of controlling where traffic goes and how it gets there. It’s a necessity when running Kubernetes in production because it allows you to protect your infrastructure and apps from attacks and traffic spikes. Two techniques to incorporate into your app development cycle are rate limiting and circuit breaking.

-

Use case: I want to protect services from getting too many requests

Solution: Rate limitingWhether malicious (for example, brute‑force password guessing and DDoS attacks) or benign (such as customers flocking to a sale), a high volume of HTTP requests can overwhelm your services and cause your apps to crash. Rate limiting restricts the number of requests a user can make in a given time period. Requests can include something as simple as a

GETrequest for the homepage of a website or aPOSTrequest on a login form. When under DDoS attack, for example, you can use rate limiting to limit the incoming request rate to a value typical for real users. -

Use case: I want to avoid cascading failures

Solution: Circuit breakingWhen a service is unavailable or experiencing high latency, it can take a long time for incoming requests to time out and clients to receive an error response. The long timeouts can potentially cause a cascading failure, in which the outage of one service leads to timeouts at other services and the ultimate failure of the application as a whole.

Circuit breakers prevent cascading failure by monitoring for service failures. When the number of failed requests to a service exceeds a preset threshold, the circuit breaker trips and starts returning an error response to clients as soon as the requests arrive, effectively throttling traffic away from the service.

The circuit breaker continues to intercept and reject requests for a defined amount of time before allowing a limited number of requests to pass through as a test. If those requests are successful, the circuit breaker stops throttling traffic. Otherwise, the clock resets and the circuit breaker again rejects requests for the defined time.

Traffic Splitting

Traffic splitting (sometimes called traffic testing) is a subcategory of traffic control and refers to the act of controlling the proportion of incoming traffic directed to different versions of a backend app running simultaneously in an environment (usually the current production version and an updated version). It’s an essential part of the app development cycle because it allows teams to test the functionality and stability of new features and versions without negatively impacting customers. Useful deployment scenarios include debug routing, canary deployments, A/B testing, and blue‑green deployments. (There is a fair amount of inconsistency in the use of these four terms across the industry. Here we use them as we understand their definitions.)

-

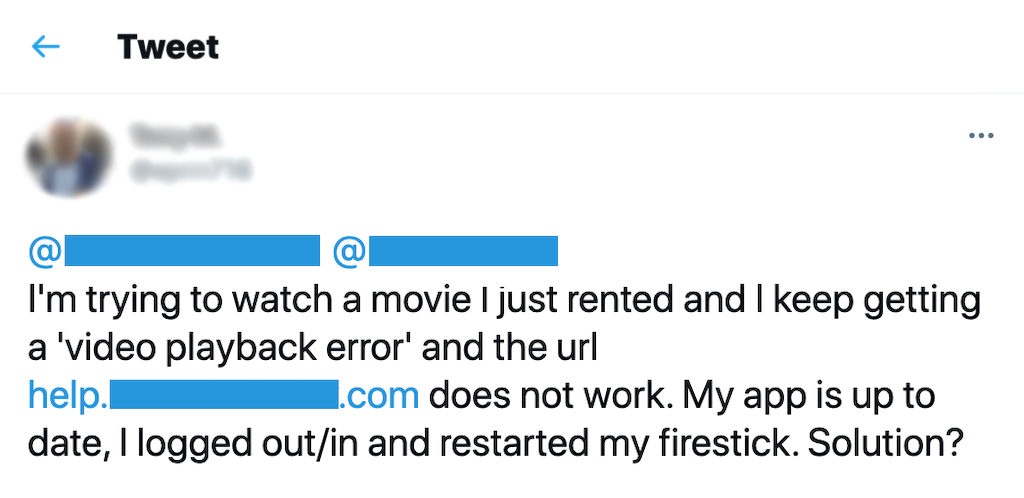

Use case: I’m ready to test a new version in production

Solution: Debug routingLet’s say you have a banking app and you’re going to add a credit score feature. Before testing with customers, you probably want to see how it performs in production. Debug routing lets you deploy it publicly yet “hide” it from actual users by allowing only certain users to access it, based on Layer 7 attributes such as a session cookie, session ID, or group ID. For example, you can allow access only to users who have an admin session cookie – their requests are routed to the new version with the credit score feature while everyone else continues on the stable version.

-

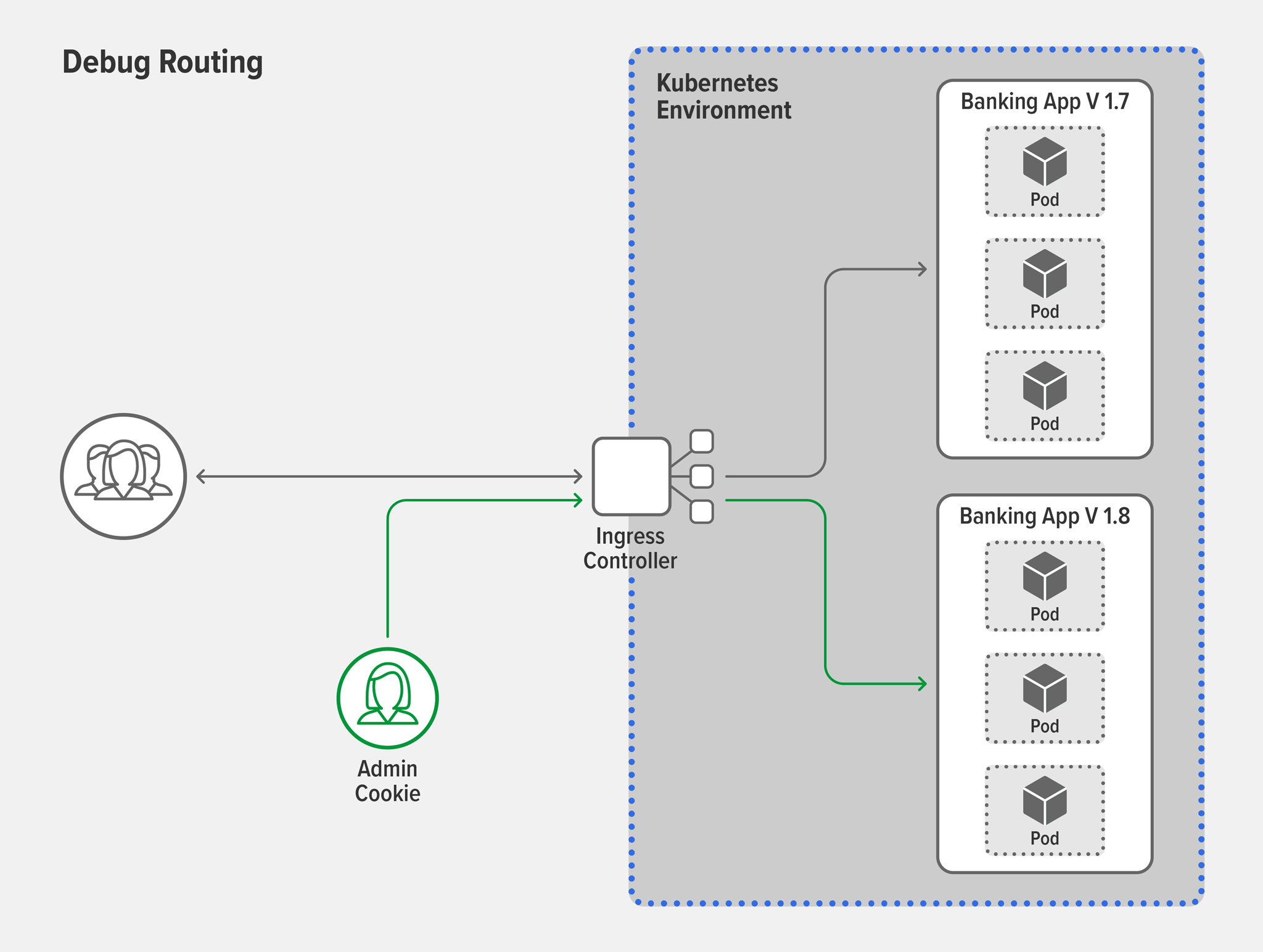

Use case: I need to make sure my new version is stable

Solution: Canary deploymentThe concept of canary deployment is taken from an historic mining practice, where miners took a caged canary into a coal mine to serve as an early warning of toxic gases. The gas killed the canary before killing the miners, allowing them to quickly escape danger. In the world of apps, no birds are harmed! Canary deployments provide a safe and agile way to test the stability of a new feature or version. A typical canary deployment starts with a high share (say, 99%) of your users on the stable version and moves a tiny group (the other 1%) to the new version. If the new version fails, for example crashing or returning errors to clients, you can immediately move the test group back to the stable version. If it succeeds, you can switch users from the stable version to the new one, either all at once or (as is more common) in a gradual, controlled migration.

-

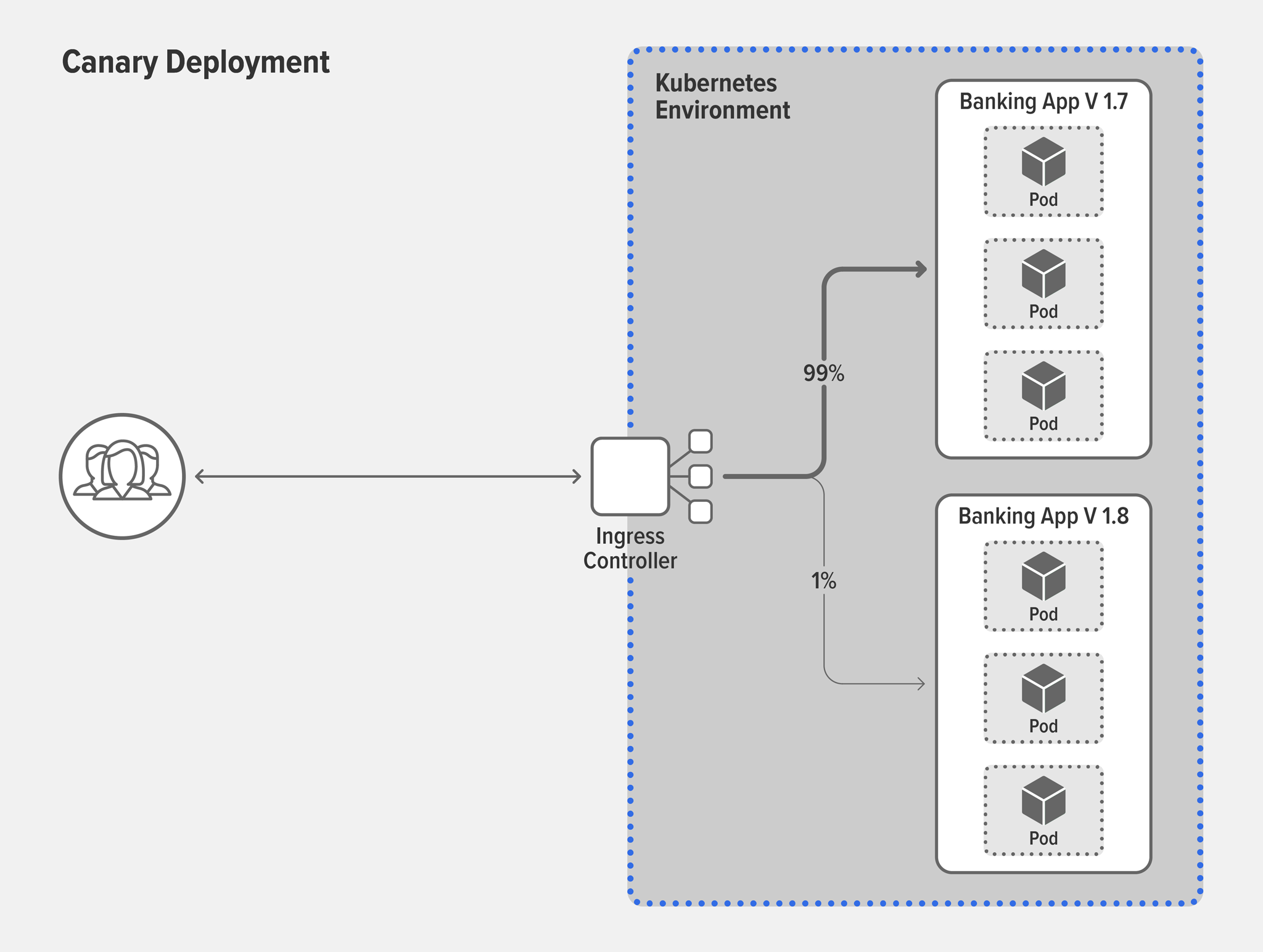

Use case: I need to find out if customers like a new version better than the current version

Solution: A/B testingNow that you’ve confirmed your new feature works in production, you might want to get customer feedback about the success of the feature, based on key performance indicators (KPIs) such as number of clicks, repeat users, or explicit ratings. A/B testing is a process used across many industries to measure and compare user behavior for the purpose of determining the relative success of different product or app versions across the customer base. In a typical A/B test, 50% of users get Version A (the current app version) while the remaining 50% gets Version B (the version with the new, but stable, feature). The winner is the one with the overall better set of KPIs.

-

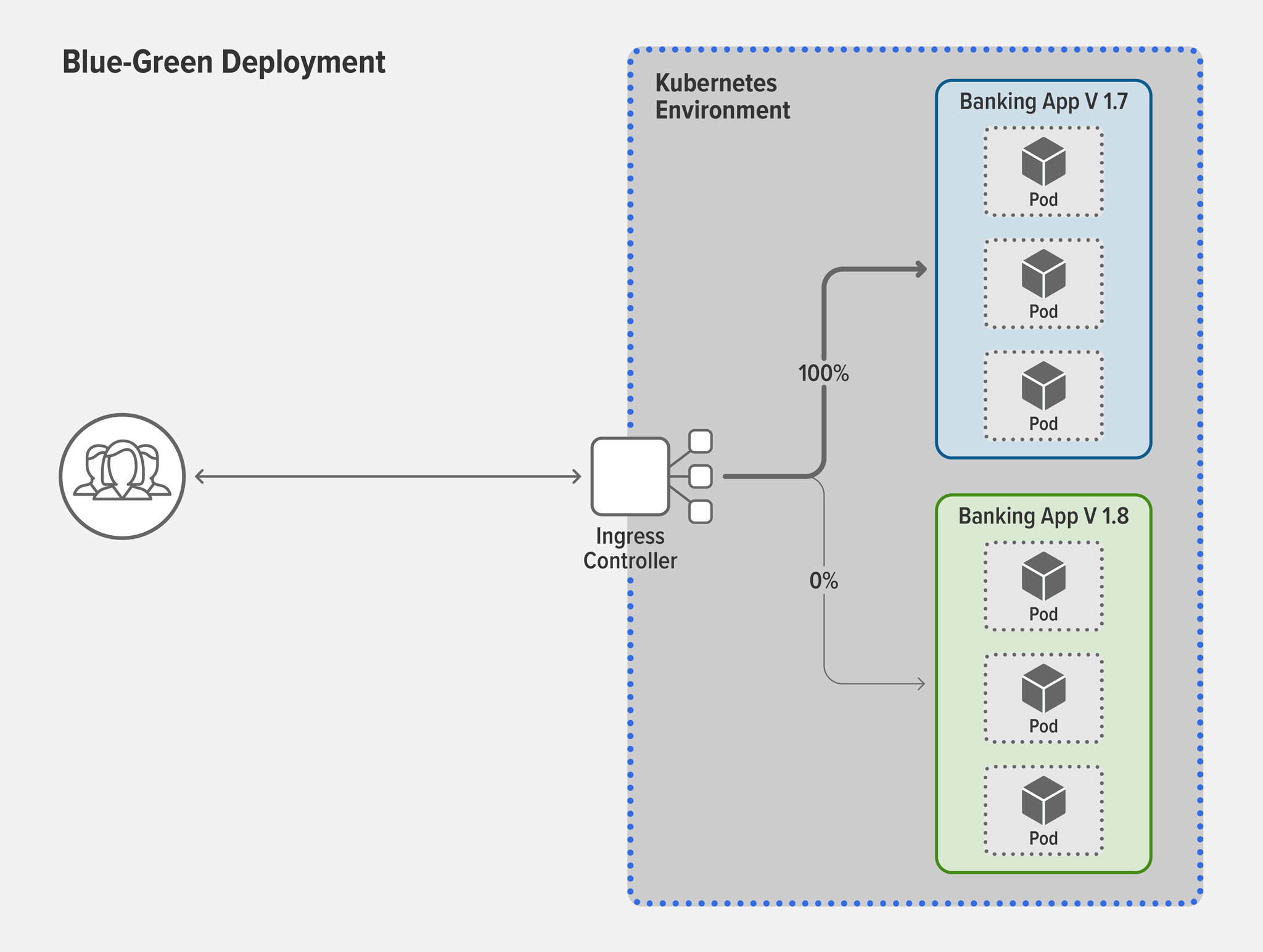

Use case: I want to move my users to a new version without downtime

Solution: Blue‑green deploymentNow let’s say your banking app is due for a major version change…congratulations! In the past, upgrading versions usually meant downtime for users because you had to take down the old version before moving the new version into production. But in today’s competitive environment, downtime for upgrades is unacceptable to most users. Blue‑green deployments greatly reduce, or even eliminate, downtime for upgrades. Simply keep the old version (blue) in production while simultaneously deploying the new version (green) alongside in the same production environment.

Most organizations don’t want to move 100% of users from blue to green at once – after all, what if the green version fails?! The solution is to use a canary deployment to move users in whatever increments best meet your risk‑mitigation strategy. If the new version is a disaster, you can easily revert everyone back to the stable version in just a couple of keystrokes.

How NGINX Can Help

You can accomplish advanced traffic control and splitting with most Ingress controllers and service meshes. Which technology to use depends on your app architecture and use cases. For example, using an Ingress controller makes sense in these three scenarios:

- Your apps have just one endpoint, as with simple apps or monolithic apps that you have “lifted and shifted” into Kubernetes.

- There’s no service-to-service communication in your cluster.

- There is service-to-service communication but you aren’t yet using a service mesh.

If your deployment is complex enough to need a service mesh, a common use case is splitting traffic between services for testing or upgrade of individual microservices. For example, you might want to do a canary deployment behind your mobile front‑end, between two different versions of your geo‑location microservice API.

However, setting up traffic splitting with some Ingress controllers and service meshes can be time‑consuming and error‑prone, for a variety of reasons:

- Ingress controllers and service meshes from various vendors implement Kubernetes features in different ways.

- Kubernetes isn’t really designed to manage and understand Layer 7 traffic.

- Some Ingress controllers and service meshes don’t support sophisticated traffic management.

With NGINX Ingress Controller and NGINX Service Mesh, you can easily configure robust traffic routing and splitting policies in seconds. Check out this livestream demo with our experts and read on to learn how we save you time with easier configs, advanced customizations, and improved visibility.

Easier Configuration with NGINX Ingress Resources and the SMI Specification

These NGINX features make configuration easier:

-

NGINX Ingress resources for NGINX Ingress Controller – While the standard Kubernetes Ingress resource makes it easy to configure SSL/TLS termination, HTTP load balancing, and Layer 7 routing, it doesn’t include the kind of customization features required for circuit breaking, A/B testing, and blue‑green deployment. Instead, non‑NGINX users have to use annotations, ConfigMaps, and custom templates which all lack fine‑grained control, are insecure, and are error‑prone and difficult to use.

The NGINX Ingress Controller comes with NGINX Ingress resources as an alternative to the standard Ingress resource (which it also supports). They provide a native, type‑safe, and indented configuration style which simplifies implementation of Ingress load balancing. NGINX Ingress resources have an added benefit for existing NGINX users: they make it easier to repurpose load balancing configurations from non‑Kubernetes environments, so all your NGINX load balancers can use the same configurations.

-

NGINX Service Mesh with SMI – NGINX Service Mesh implements the Service Mesh Interface (SMI), a specification that defines a standard interface for service meshes on Kubernetes, with typed resources such as

TrafficSplit,TrafficTarget, andHTTPRouteGroup. Using standard Kubernetes configuration methods, NGINX Service Mesh and the NGINX SMI extensions make traffic‑splitting policies, like canary deployment, simple to deploy with minimal interruption to production traffic. For example, here’s how to define a canary deployment with NGINX Service Mesh:apiVersion: split.smi-spec.io/v1alpha2 kind: TrafficSplit metadata: name: target-ts spec: service: target-svc backends: - service: target-v1-0 weight: 90 - service: target-v2-0 weight: 10Our tutorial, Deployments Using Traffic Splitting, walks through sample deployment patterns that leverage traffic splitting, including canary and blue-green deployments.

More Sophisticated Traffic Control and Splitting with Advanced Customizations

These NGINX features make it easy to control and split traffic in sophisticated ways:

-

The key‑value store for canary deployments – When you’re performing A/B testing or blue‑green deployments, you might want to transition traffic to the new version at specific increments, for example 0%, 5%, 10%, 25%, 50%, and 100%. With most tools, this is a very manual process because you have to edit the percentage and reload the configuration file for each increment. With that amount of overhead, you might decide to take the risk of going straight from 5% to 100%. However, with the NGINX Plus-based version of NGINX Ingress Controller, you can leverage the key‑value store to change the percentages without the need for a reload.

-

Circuit breaking with NGINX Ingress Controller – Sophisticated circuit breakers save time and improve resilience by more quickly detecting failures and failing over, and even activating custom, formatted error pages for upstreams that are unhealthy. For example, a circuit breaker for a search service might be configured to return a correctly formatted but empty set of search results. To get this level of sophistication, the NGINX Plus-based version of NGINX Ingress Controller uses active health checks which proactively monitor the health of your TCP and UDP upstream servers. Because it’s monitoring in real time, your clients will be less likely to experience apps that return errors.

-

Circuit breaking with NGINX Service Mesh – The NGINX Service Mesh circuit breaker spec has three custom fields:

errors– The number of errors before the circuit tripstimeoutSeconds– The window for errors to occur within before tripping the circuit, as well as the amount of time to wait before closing the circuitfallback– The name and port of the Kubernetes service to which traffic is rerouted after the circuit has been tripped

While

errorsandtimeoutSecondsare standard circuit‑breaker features,fallbackboosts resilience further by enabling you to define a backup server. If your backup server responses are unique, they can be an early indicator of trouble in your cluster, allowing you to start troubleshooting right away.

Interpret Traffic Splitting Results with Dashboards

You’ve implemented your traffic splitting…now what? It’s time to analyze the result. This can be the hardest part because many organizations are missing key insights into how their Kubernetes traffic and apps are performing. NGINX makes getting insights easier with the NGINX Plus dashboard and pre‑built Grafana dashboards that visualize metrics exposed by the Prometheus Exporter. For more on improving visibility to gain insight, read How to Improve Visibility in Kubernetes on our blog.

Master Microservices with NGINX

The NGINX Ingress Controller based on NGINX Plus is available for a 30-day free trial that includes NGINX App Protect to secure your containerized apps.

To try NGINX Ingress Controller with NGINX Open Source, you can obtain the release source code, or download a prebuilt container from DockerHub.

The always‑free NGINX Service Mesh is available for download at f5.com.