Editor – This post is part of a 10-part series:

- Reduce Complexity with Production-Grade Kubernetes

- How to Improve Resilience in Kubernetes with Advanced Traffic Management

- How to Improve Visibility in Kubernetes

- Six Ways to Secure Kubernetes Using Traffic Management Tools (this post)

- A Guide to Choosing an Ingress Controller, Part 1: Identify Your Requirements

- A Guide to Choosing an Ingress Controller, Part 2: Risks and Future-Proofing

- A Guide to Choosing an Ingress Controller, Part 3: Open Source vs. Default vs. Commercial

- A Guide to Choosing an Ingress Controller, Part 4: NGINX Ingress Controller Options

- How to Choose a Service Mesh

- Performance Testing NGINX Ingress Controllers in a Dynamic Kubernetes Cloud Environment

You can also download the complete set of blogs as a free eBook – Taking Kubernetes from Test to Production.

As discussed in Secure Cloud‑Native Apps Without Losing Speed, we have observed three factors that make cloud‑native apps more difficult to secure than traditional apps:

- Cloud‑native app delivery causes tool sprawl and offers inconsistent enterprise‑grade services

- Cloud‑native app delivery costs can be unpredictable and high

- SecOps teams struggle to protect cloud‑native apps and are at odds with DevOps

While all three factors can equally impact security, the third factor can be the most difficult problem to solve, perhaps because it’s the most “human”. When SecOps isn’t able or empowered to protect cloud‑native apps, some of the consequences are obvious (vulnerabilities and breaches), but others are hidden, including slowed agility and stalled digital transformation.

Let’s dig deeper into those hidden costs. Organizations choose Kubernetes for its promise of agility and cost savings. But when there are security incidents in a Kubernetes environment, most organizations pull their Kubernetes deployments out of production. That slows down digital transformation initiatives that are essential for the future of the organization – never mind the wasted engineering efforts and money. The logical conclusion is: if you’re going to try to get Kubernetes from test to production, then security must be considered a strategic component that is owned by everyone in the organization.

In this post, we cover six security use cases that you can solve with Kubernetes traffic‑management tools, enabling SecOps to collaborate with DevOps and NetOps to better protect your cloud‑native apps and APIs. A combination of these techniques is often used to create a comprehensive security strategy designed to keep apps and APIs safe while minimizing the impact to customers.

- Resolve CVEs quickly to avoid cyberattacks

- Stop OWASP Top 10 and denial-of-service attacks

- Offload authentication and authorization from apps

- Set up self‑service with guardrails

- Implement end-to-end encryption

- Ensure clients are using a strong cipher with a trusted implementation

Security and Identity Terminology

Before we jump into the use cases, here’s a quick overview of some security and identity terms you’ll encounter throughout this post.

-

Authentication and authorization – Functions required to ensure only the “right” users and services can gain access to backends or application components:

-

Authentication – Verification of identity to ensure that clients making requests are who they claim to be. Accomplished through ID tokens, such as passwords or JSON Web Tokens (JWTs).

-

Authorization – Verification of permission to access a resource or function. Accomplished through access tokens, such as Layer 7 attributes like session cookies, session IDs, group IDs, or token contents.

-

-

Critical Vulnerabilities and Exposures (CVEs) – A database of publicly disclosed flaws “in a software, firmware, hardware, or service component resulting from a weakness that can be exploited, causing a negative impact to the confidentiality, integrity, or availability of an impacted component or components” (https://www.cve.org/). CVEs may be discovered by the developers who manage the tool, a penetration tester, a user or customer, or someone from the community (such as a “bug hunter”). It’s common practice to give the software owner time to develop a patch before the vulnerability is publicly disclosed, so as not to give bad actors an advantage.

-

Denial-of-service (DoS) attack – An attack in which a bad actor floods a website with requests (TCP/UDP or HTTP/HTTPS) with the goal of making the site crash. Because DoS attacks impact availability, their primary outcome is damage to the target’s reputation. A distributed denial-of-service (DDoS) attack, in which multiple sources target the same network or service, is more difficult to defend against due to the potentially large network of attackers. DoS protection requires a tool that adaptively identifies and prevents attacks. Learn more in What is Distributed Denial of Service (DDoS)?

-

End-to-end encryption (E2EE) – The practice of fully encrypting data as it passes from the user to the app and back. E2EE requires SSL certificates and potentially mTLS.

-

Mutual TLS (mTLS) – The practice of requiring authentication (via SSL/TLS certificate) for both the client and the host. Use of mTLS also protects the confidentiality and integrity of the data passing between the client and the host. mTLS can be accomplished all the way down to the Kubernetes pod level, between two services in the same cluster. For an excellent introduction to SSL/TLS and mTLS, see What is mTLS? at F5 Labs.

-

Single sign‑on (SSO) – SSO technologies (including SAML, OAuth, and OIDC) make it easier to manage authentication and authorization.

-

Simplified authentication – SSO eliminates the need for a user to have a unique ID token for each different resource or function.

-

Standardized authorization – SSO facilitates setting of user access rights based on role, department, and level of seniority, eliminating the need to configure permissions for each user individually.

-

-

SSL (Secure Sockets Layer)/TLS (Transport Layer Security) – A protocol for establishing authenticated and encrypted links between networked computers. SSL/TLS certificates authenticate a website’s identity and establish an encrypted connection. Although the SSL protocol was deprecated in 1999 and replaced with the TLS protocol, it is still common to refer to these related technologies as “SSL” or “SSL/TLS” – for the sake of consistency, we’ll use SSL/TLS for the remainder of this post.

-

Web application firewall (WAF) – A reverse proxy that detects and blocks sophisticated attacks against apps and APIs (including OWASP Top 10 and other advanced threats) while letting “safe” traffic through. WAFs defend against attacks that try to harm the target by stealing sensitive data or hijacking the system. Some vendors consolidate WAF and DoS protection in the same tool, whereas others offer separate WAF and DoS tools.

-

Zero trust – A security concept that is frequently used in higher security organizations, but is relevant to everyone, in which data must be secured at all stages of storage and transport. This means that the organization has decided not to “trust” any users or devices by default, but rather require that all traffic is thoroughly vetted. A zero‑trust architecture typically includes a combination of authentication, authorization, and mTLS with a high probability that the organization implements E2EE.

Use Case: Resolve CVEs Quickly to Avoid Cyberattacks

Solution: Use tools with timely and proactive patch notifications

According to a study by the Ponemon Institute, in 2019 there was an average “grace period” of 43 days between the release of a patch for a critical or high‑priority vulnerability and organizations seeing attacks that tried to exploit the vulnerability. At F5 NGINX, we’ve seen that window narrow significantly in the following years (even down to day zero in the case of Apple iOS 15 in 2021), which is why we recommend patching as soon as possible. But what if patches for your traffic management tools aren’t available for weeks, or even months, after a CVE is announced?

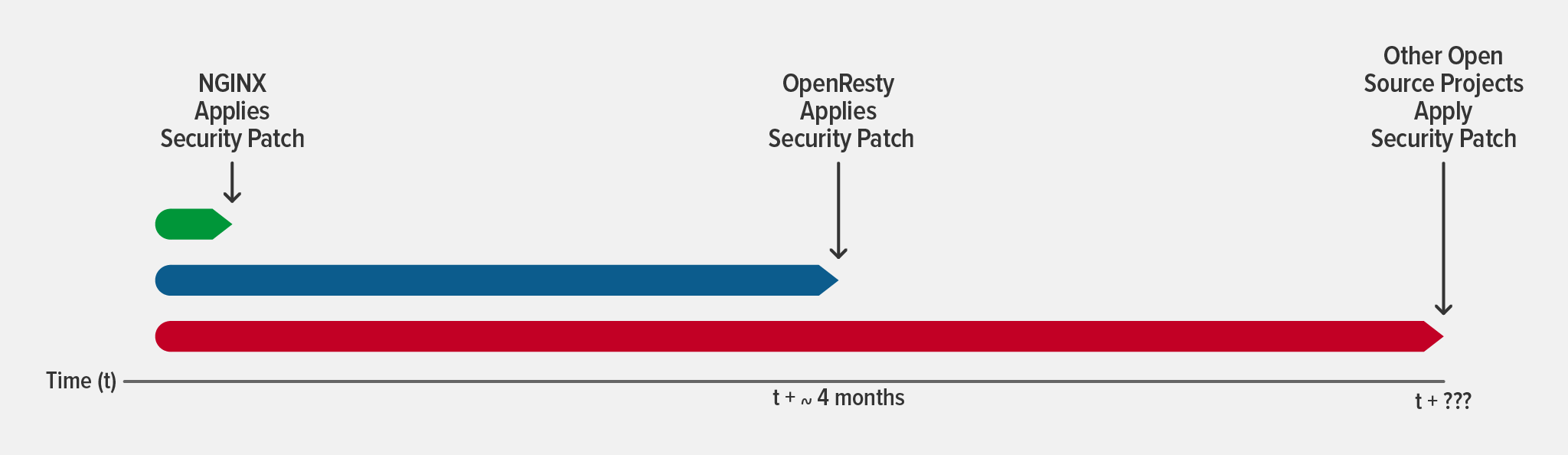

Tools that are developed and maintained by community contributors (rather than a dedicated engineering team) have the potential to lag weeks or months behind CVE announcements, making it unlikely that organizations can patch within that 43-day window. In one case, it took OpenResty four months to apply an NGINX‑related security patch. That left anyone using an OpenResty‑based Ingress controller vulnerable for at least four months, but realistically there’s usually additional delay before patches are available for software that depends on an open source project.

To get the fastest CVE patching, look for two characteristics when selecting traffic management tools:

- A dedicated engineering team – When tool development is managed by an engineering team instead of community volunteers, you know there’s a group of people who are dedicated to the health of the tool and can prioritize release of a patch as soon as possible.

- An integrated code base – Without any external dependencies (like we discussed with OpenResty), patches are just an agile sprint away.

For more on CVE patching, read Mitigating Security Vulnerabilities Quickly and Easily with NGINX Plus.

Use Case: Stop OWASP Top 10 and DoS Attacks

Solution: Deploy flexible, Kubernetes‑friendly WAF and DoS protection

Choosing the right WAF and DoS protection for Kubernetes apps depends on two factors (in addition to features):

- Flexibility – There are scenarios when it’s best to deploy tools inside Kubernetes, so you want infrastructure‑agnostic tools that can run within or outside Kubernetes. Using the same tool for all your deployments enables you to reuse policies and lowers the learning curve for your SecOps teams.

- Footprint – The best Kubernetes tools have a small footprint, which allows for appropriate resource consumption with minimal impact to throughput, requests per second, and latency. Given that DevOps teams often resist security tools because of a perception that they slow down apps, choosing a high‑performance tool with a small footprint can increase the probability of adoption.

While a tool that consolidates WAF and DoS protection may seem more efficient, it’s actually expected to have issues around both CPU usage (due to a larger footprint) and flexibility. You’re forced to deploy the WAF and DoS protection together, even when you don’t need both. Ultimately, both issues can drive up the total cost of ownership for your Kubernetes deployments while creating budget challenges for other essential tools and services.

Once you’ve chosen the right security tools for your organization, it’s time to decide where to deploy those tools. There are four locations where application services can typically be deployed to protect Kubernetes apps:

- At the front door (on an external load balancer such as F5 NGINX Plus or F5 BIG‑IP) – Good for “coarse‑grained” global protection because it allows you to apply global policies across multiple clusters

- At the edge (on an Ingress controller such as F5 NGINX Ingress Controller) – Ideal for providing “fine‑grained” protection that’s standard across a single cluster

- At the service (on a lightweight load balancer like NGINX Plus) – Can be a necessary approach when there are a small number of services within a cluster that have a shared need for unique policies

- At the pod (as part of the application) – A very custom approach that might be used when the policy is specific to the app

So, out of the four options, which is best? Well…that depends!

Where to Deploy a WAF

First, we’ll look at WAF deployment options since that tends to be a more nuanced choice.

- Front door and edge – If your organization prefers a “defense in depth” security strategy, then we recommend deploying a WAF at both the external load balancer and the Ingress controller to deliver an efficient balance of global and custom protections.

- Front door or edge – In the absence of a “defense in depth” strategy, a single location is acceptable, and the deployment location depends on ownership. When a traditional NetOps team owns security, they may be more comfortable managing it on a traditional proxy (the external load balancer). However, DevSecOps teams that are comfortable with Kubernetes (and prefer having their security configuration in the vicinity of their cluster configs) may choose to deploy a WAF at the ingress level.

- Per service or pod – If your teams have specific requirements for their services or apps, then they can deploy additional WAFs in an à la carte fashion. But be aware: these locations come with higher costs. In addition to increased development time and a higher cloud budget, this choice can also increase operational costs related to troubleshooting efforts – such as when determining “Which of our WAFs is unintentionally blocking traffic?”

Where to Deploy DoS Protection

Protection against DoS attacks is more straightforward since it’s only needed at one location – either at the front door or at the Ingress controller. If you deploy a WAF both at the front door and the edge, then we recommend that you deploy DoS protection in front of the front‑door WAF, as it’s the most global. That way, unwanted traffic can be thinned out before hitting the WAF, allowing you to make more efficient use of compute resources.

For more details on each of these deployment scenarios, read Deploying Application Services in Kubernetes, Part 2.

Use Case: Offload Authentication and Authorization from Apps

Solution: Centralize authentication and authorization at the point of ingress

A common non‑functional requirement that gets built into apps and services is authentication and authorization. On a small scale, this practice adds a manageable amount of complexity that’s acceptable when the app doesn’t require frequent updates. But with faster release velocities at larger scale, integrating authentication and authorization into your apps becomes untenable. Ensuring that each app maintains the appropriate access protocols can distract from the business logic of the app, or worse, can get overlooked and lead to an information breach. While use of SSO technologies can improve security by eliminating separate usernames and passwords in favor of one set of credentials, developers still have to include code in their apps to interface with the SSO system.

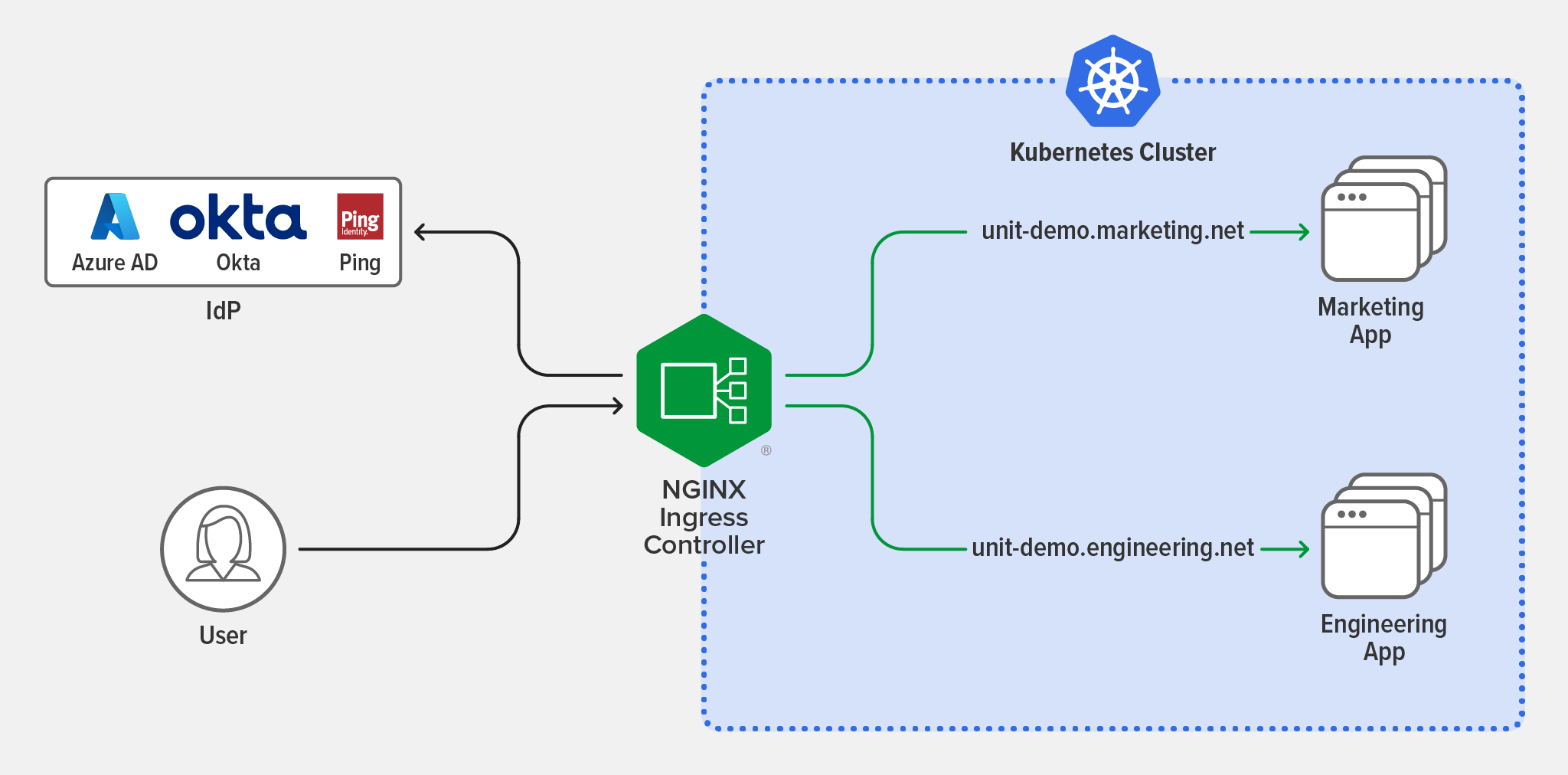

There’s a better way: offload authentication and authorization to an Ingress controller.

Because the Ingress controller is already scrutinizing all traffic entering the cluster and routing it to the appropriate services, it’s an efficient choice for centralized authentication and authorization. This removes the burden from developers of building, maintaining, and replicating the logic in the application code; instead, they can quickly leverage SSO technologies at the ingress layer using the native Kubernetes API.

For more on this topic, read Implementing OpenID Connect Authentication for Kubernetes with Okta and NGINX Ingress Controller.

Use Case: Set Up Self-Service with Guardrails

Solution: Implement role‑based access control (RBAC)

Kubernetes uses RBAC to control the resources and operations available to different types of users. This is a valuable security measure as it allows an administrator or superuser to determine how users, or groups of users, can interact with any Kubernetes object or specific namespace in the cluster.

While Kubernetes RBAC is enabled by default, you need to take care that your Kubernetes traffic management tools are also RBAC‑enabled and can align with your organization’s security needs. With RBAC in place, users get gated access to the functionality they need to do their jobs without waiting around for a ticket to be fulfilled. But without RBAC configured, users can gain permissions they don’t need or aren’t entitled to, which can lead to vulnerabilities if the permissions are misused.

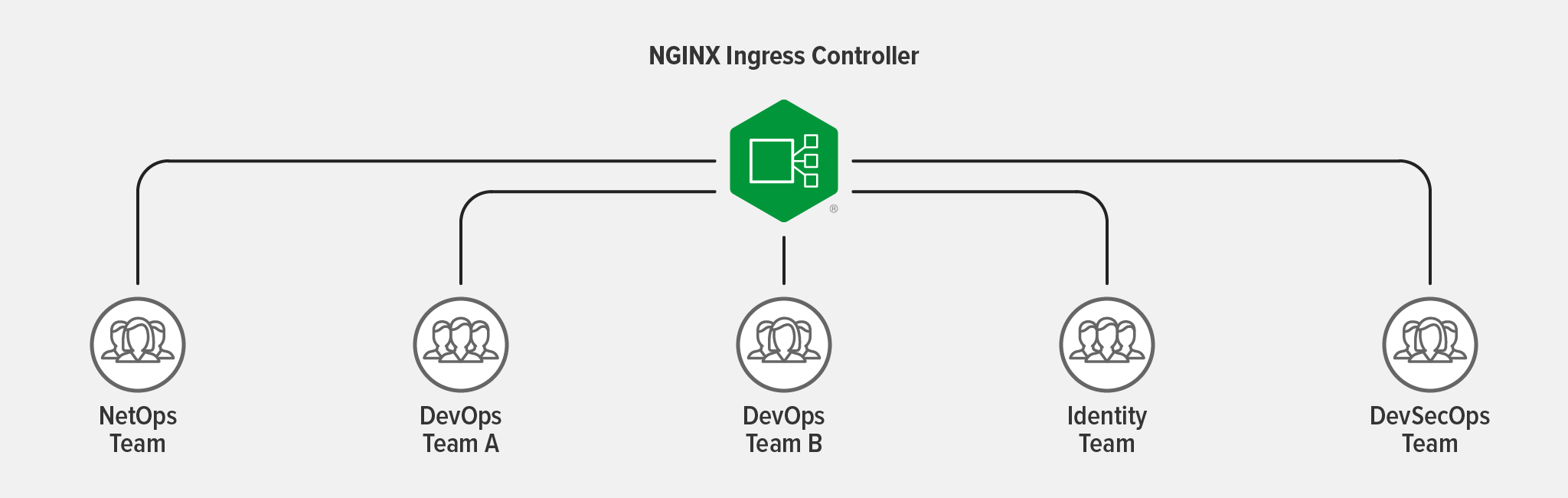

An Ingress controller is a prime example of a tool that can serve numerous people and teams when configured with RBAC. When the Ingress controller allows for fine‑grained access management – even down to a single namespace – you can use RBAC to enable efficient use of resources through multi‑tenancy.

As an example, multiple teams might use the Ingress controller as follows:

- NetOps Team – Configures external entry point of the application (like the hostname and TLS certificates) and delegates traffic control policies to various teams

- DevOps Team A – Provisions TCP/UDP load balancing and routing policies

- DevOps Team B – Configures rate‑limiting policies to protect services from excessive requests

- Identity Team – Manages authentication and authorization components while configuring mTLS policies as part of an end-to-end encryption strategy

- DevSecOps Team – Sets WAF policies

To learn how to leverage RBAC in NGINX Ingress Controller, watch Advanced Kubernetes Deployments with NGINX Ingress Controller. Starting at 13:50, our experts explain how to leverage RBAC and resource allocation for security, self‑service, and multi‑tenancy.

Use Case: Implement End-to-End Encryption

Solution: Use traffic management tools

End-to-end encryption (E2EE) is becoming an increasingly common requirement for organizations that handle sensitive or personal information. Whether it’s financial data or social media messaging, consumer privacy expectations combined with regulations like GDPR and HIPAA are driving demand for this type of protection. The first step in achieving E2EE is either to architect your backend apps to accept SSL/TLS traffic or to use a tool that offloads SSL/TLS management from your apps (the preferred option for separation of security function, performance, key management, etc.). Then, you configure your traffic management tools depending on the complexity of your environment.

Most Common Scenario: E2EE Using an Ingress Controller

When you have apps with just one endpoint (simple apps, or monolithic apps that you’ve “lifted and shifted” into Kubernetes) or there’s no service-to-service communication, then you can use an Ingress controller to implement E2EE within Kubernetes.

Step 1: Ensure your Ingress controller only allows encrypted SSL/TLS connections using either service‑side or mTLS certificates, ideally for both ingress and egress traffic.

Step 2: Address the typical default setting that requires the Ingress controller to decrypt and re‑encrypt traffic before sending it to the apps. This can be accomplished in a couple of ways – the method you choose depends on your Ingress controller and requirements:

- If your Ingress controller supports SSL/TLS passthrough, it can route SSL/TLS‑encrypted connections based on the Service Name Indication (SNI) header, without decrypting them or requiring access to the SSL/TLS certificates or keys.

- Alternately, you can set up SSL/TLS termination, where the Ingress controller terminates the traffic, then proxies it to the backends or upstreams – either in clear‑text or by re‑encrypting the traffic with mTLS or service‑side SSL/TLS to your Kubernetes services.

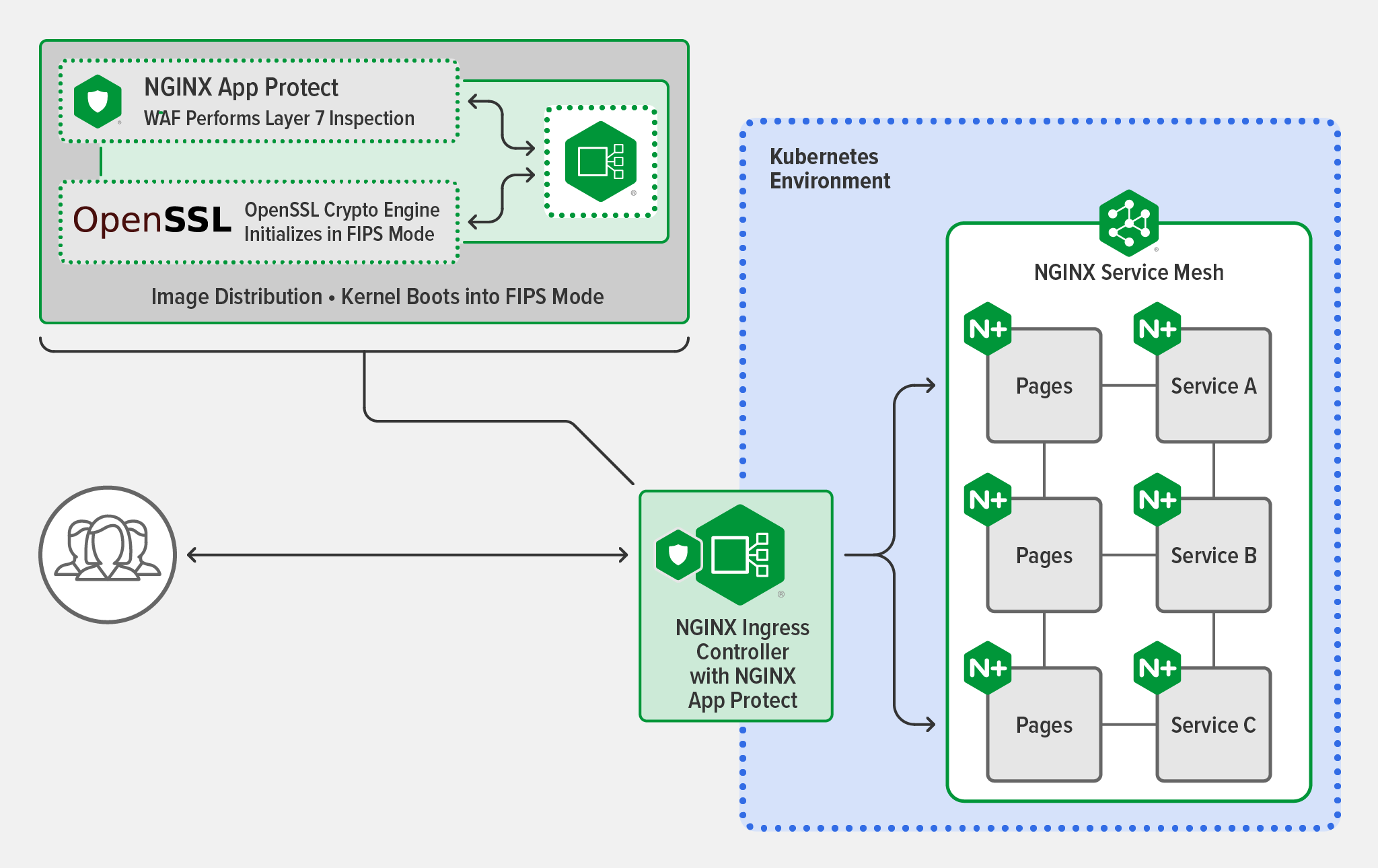

Less Common Scenario: E2EE Using an Ingress Controller and Service Mesh

If there’s service-to-service communication within your cluster, you need to implement E2EE on two planes: ingress‑egress traffic with the Ingress controller and service-to-service traffic with a service mesh. In E2EE, a service mesh’s role is to ensure that only specific services are allowed to talk to each other and that they do so in a secure manner. When you’re setting up a service mesh for E2EE, you need to enable a zero‑trust environment through two factors: mTLS between services (set to require a certificate) and traffic access control between services (dictating which services are authorized to communicate). Ideally, you also implement mTLS between the applications (managed by a service mesh and the ingress‑egress controller) for true E2EE security throughout the Kubernetes cluster.

For more on encrypting data that’s been exposed on the wire, read The mTLS Architecture in NGINX Service Mesh.

Use Case: Ensure Clients Are Using a Strong Cipher with a Trusted Implementation

Solution: Comply with the Federal Information Processing Standards (FIPS)

In the software industry, FIPS usually refers to the publication specifically about cryptography, FIPS PUB 140-2 Security Requirements for Cryptographic Modules, which is a joint effort between the United States and Canada. It standardizes the testing and certification of cryptographic modules that are accepted by the federal agencies of both countries for the protection of sensitive information. “But wait!” you say. “I don’t care about FIPS because I don’t work with North American government entities.” Becoming FIPS‑compliant can be a smart move regardless of your industry or geography, because FIPS is also the de facto global cryptographic baseline.

Complying with FIPS doesn’t have to be difficult, but it does require that both your operating system and relevant traffic management tools can operate in FIPS mode. There’s a common misconception that FIPS compliance is achieved simply by running the operating system in FIPS mode. Even with the operating system in FIPS mode, it’s still possible that clients communicating with your Ingress controller aren’t using a strong cipher. When operating in FIPS mode, your operating system and Ingress controller may use only a subset of the typical SSL/TLS ciphers.

Setting up FIPS for your Kubernetes deployments is a four‑step process:

- Step 1: Configure your operating system for FIPS mode

- Step 2: Verify the operating system and OpenSSL are in FIPS mode

- Step 3: Install the Ingress controller

- Step 4: Verify compliance with FIPS 140-2 by performing a FIPS status check

In the example below, when FIPS mode is enabled for both the operating system and the OpenSSL implementation used by NGINX Plus, all end‑user traffic to and from NGINX Plus is decrypted and encrypted using a validated, FIPS‑enabled crypto engine.

Read more about FIPS in Achieving FIPS Compliance with NGINX Plus.

Make Kubernetes More Secure with NGINX

If you’re ready to implement some (or all) of these security methods, you need to make sure your tools have the right features and capabilities to support your use cases. NGINX can help with our suite of production‑ready Kubernetes traffic management tools:

-

NGINX Ingress Controller – NGINX Plus-based Ingress controller for Kubernetes that handles advanced traffic control and shaping, monitoring and visibility, authentication and SSO, and acts as an API gateway.

-

F5 NGINX App Protect – Holistic protection for modern apps and APIs, built on F5’s market‑leading security technologies, that integrates with NGINX Ingress Controller and NGINX Plus. Use a modular approach for flexibility in deployment scenarios and optimal resource utilization:

-

NGINX App Protect WAF – A strong, lightweight WAF that protects against OWASP Top 10 and beyond with PCI DDS compliance.

-

NGINX App Protect DoS – Behavioral DoS detection and mitigation with consistent and adaptive protection across clouds and architectures.

-

-

F5 NGINX Service Mesh – Lightweight, turnkey, and developer‑friendly service mesh featuring NGINX Plus as an enterprise sidecar.

Get started by requesting your free 30-day trial of NGINX Ingress Controller with NGINX App Protect WAF and DoS, and download the always‑free NGINX Service Mesh.