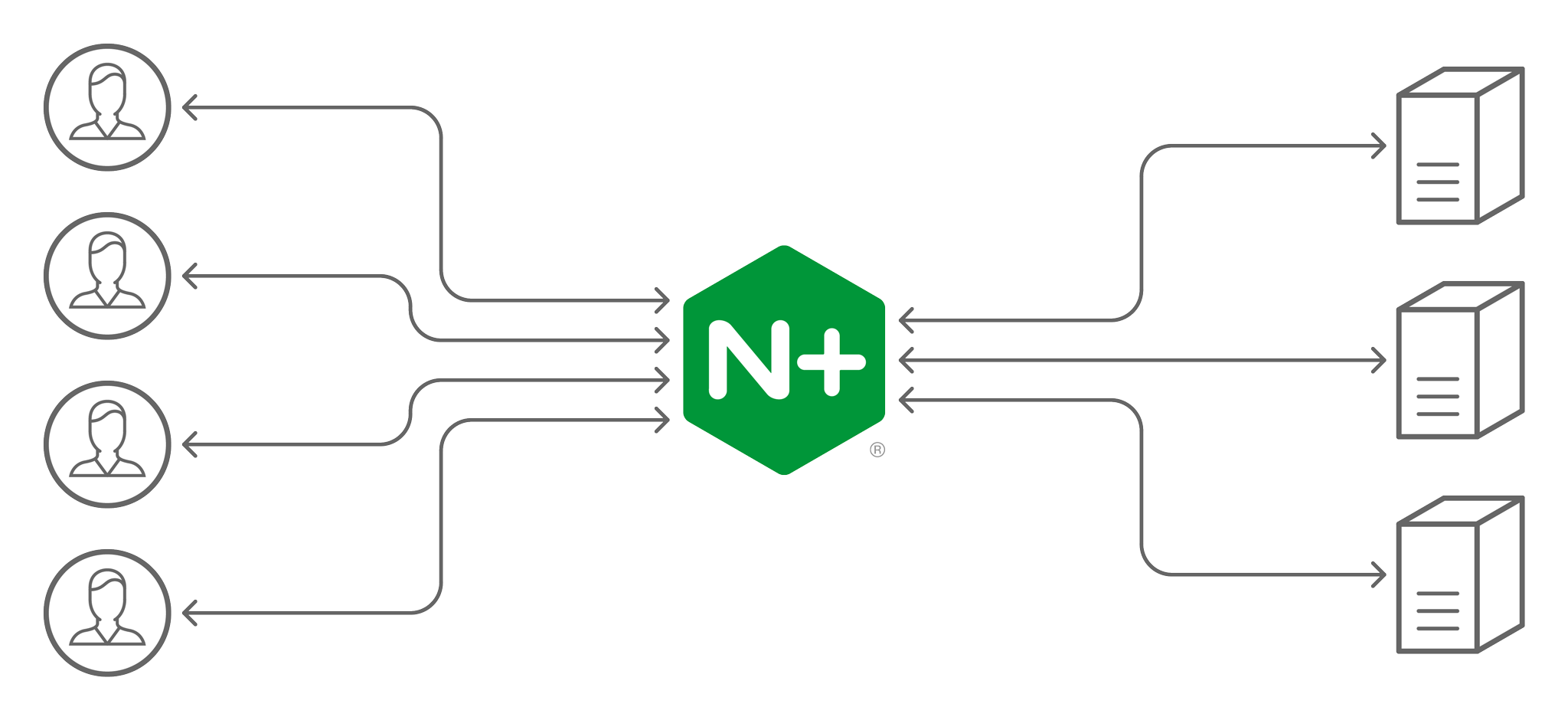

Balancing your network traffic across servers, clients, and proxies is key to keeping your customers happy and optimizing your infrastructure. With NGINX Plus high‑performance load balancing, you can scale out and provide redundancy, dynamically reconfigure your infrastructure without the need for a restart, and enable global server load balancing (GSLB), session persistence, and active health checks. NGINX Plus load balances not only HTTP traffic, but also TCP, UDP, and gRPC.

HTTP Load Balancing

When load balancing HTTP traffic, NGINX Plus terminates each HTTP connection and processes each request individually. You can strip out SSL/TLS encryption, inspect and manipulate the request, queue the request using rate limits, and then select the load‑balancing policy.

To improve performance, NGINX Plus can automatically apply a wide range of optimizations to an HTTP transaction, including HTTP protocol upgrades, keepalive optimization, and transformations such as content compression and response caching.

Load balancing HTTP traffic is easy with NGINX Plus:

http {

upstream my_upstream {

server server1.example.com;

server server2.example.com;

}

server {

listen 80;

location / {

proxy_set_header Host $host;

proxy_pass http://my_upstream;

}

}

}First specify a virtual server using the server directive, and then specify the port to listen for traffic on. Match the URLs of client requests using a location block, set the Host header with the proxy_set_header directive, and include the proxy_pass directive to forward the request to an upstream group. (The upstream block defines the servers across which NGINX Plus load balances traffic.)

For more information, check out this introduction to load balancing with NGINX and NGINX Plus.

TCP and UDP Load Balancing

NGINX Plus can also load balance TCP applications such as MySQL, and UDP applications such as DNS and RADIUS. For TCP applications, NGINX Plus terminates the TCP connections and creates new connections to the backend.

stream {

upstream my_upstream {

server server1.example.com:1234;

server server2.example.com:2345;

}

server {

listen 1123 [udp];

proxy_pass my_upstream;

}

}The configuration is similar to HTTP: specify a virtual server with the server directive, listen for traffic on a port, and proxy_pass the request to an upstream group.

For more information on TCP and UDP load balancing, see the NGINX Plus Admin Guide.

Load‑Balancing Methods

NGINX Plus supports a number of application load‑balancing methods for HTTP, TCP, and UDP load balancing. All methods take into account the weight you can optionally assign to each upstream server.

- Round Robin (the default) – Distributes requests across the upstream servers in order.

- Least Connections – Forwards requests to the server with the lowest number of active connections.

- Least Time – Forwards requests to the least‑loaded server, based on a calculation that combines response time and number of active connections. Exclusive to NGINX Plus.

- Hash – Distributes requests based on a specified key, for example client IP address or request URL. NGINX Plus can optionally apply a consistent hash to minimize redistribution of loads if the set of upstream servers changes.

- IP Hash (HTTP only) – Distributes requests based on the first three octets of the client IP address.

- Random with Two Choices – Picks two servers at random and forwards the request to the one with the lower number of active connections (the Least Connections method). With NGINX Plus, the Least Time method can also be used.

Connection Limiting with NGINX Plus

You can limit the number of connections NGINX Plus establishes with upstream HTTP or TCP servers, or the number of sessions with UDP servers. When the number of connections or sessions with a server exceeds a defined limit, NGINX Plus stops establishing new ones.

In the configuration snippet below, the connection limit for webserver1 is 250 and for webserver2 is 150. The queue directive specifies the maximum number of excess connections that NGINX Plus places in the operating system’s listen queue, for up to 30 seconds each; additional excess requests are dropped.

upstream backend {

zone backends 64k;

queue 750 timeout=30s;

server webserver1 max_conns=250;

server webserver2 max_conns=150;

}When the number of active connections on a server falls below its limit, NGINX Plus sends it connections from the queue. Limiting connections helps to ensure consistent, predictable servicing of client requests – even during traffic spikes.

Session Persistence

You can configure NGINX Plus to identify user sessions and send all requests within a session to the same upstream server. This is vital when app servers store state locally, because fatal errors occur when a request for an in‑progress user session is sent to a different server. Session persistence can also improve performance when applications share information across a cluster.

In addition to the hash‑based session persistence supported by NGINX Open Source (the Hash and IP Hash load‑balancing methods), NGINX Plus supports cookie‑based session persistence, including sticky cookie. NGINX Plus adds a session cookie to the first response from the upstream group to a given client, securely identifying which server generated the response. Subsequent client requests include the cookie, which NGINX Plus uses to route the request to the same upstream server:

upstream backend {

server webserver1;

server webserver2;

sticky cookie srv_id expires=1h domain=.example.com path=/;

}In the example above, NGINX Plus inserts a cookie called srv_id in the initial client response to identify the server that handled the request. When a subsequent request contains the cookie, NGINX Plus forwards it to the same server.

NGINX Plus also supports sticky learn and sticky route persistence methods.

Note: Cookie‑based session persistence is exclusive to NGINX Plus.

Active Health Checks

By default NGINX performs basic checks on responses from upstream servers, retrying failed requests where possible. NGINX Plus adds out-of-band application health checks (also known as synthetic transactions) and a slow‑start feature to gracefully add new and recovered servers into the load‑balanced group.

These features enable NGINX Plus to detect and work around a much wider variety of problems, significantly improving the reliability of your HTTP and TCP/UDP applications.

upstream my_upstream {

zone my_upstream 64k;

server server1.example.com slow_start=30s;

}

server {

# ...

location /health {

internal;

health_check interval=5s uri=/test.php match=statusok;

proxy_set_header Host www.example.com;

proxy_pass http://my_upstream

}

}

match statusok {

# Used for /test.php health check

status 200;

header Content-Type = text/html;

body ~ "Server[0-9]+ is alive";

}In the example above, NGINX Plus sends a request for /test.php every five seconds. The match block defines the conditions the response must meet in order for the upstream server to be considered healthy: a status code of 200 OK and a response body containing the text ServerN is alive.

Note: Active health checks are exclusive to NGINX Plus.

Service Discovery Using DNS

By default NGINX Plus servers resolve DNS names upon start up, caching the resolved values persistently. When you use a domain name such as example.com to identify a group of upstream servers with the server directive and resolve parameter, NGINX Plus periodically re‑resolves the domain name. If the associated list of IP addresses has changed, NGINX Plus immediately begins load balancing across the updated group of servers.

To configure NGINX Plus to use DNS SRV records, include the resolver directive along with the service=http parameter on the server directive, as shown:

resolver 127.0.0.11 valid=10s;

upstream service1 {

zone service1 64k;

server service1 service=http resolve;

}In the example above, NGINX Plus queries 127.0.0.11 (the built‑in Docker DNS server) every 10 seconds to re‑resolve the domain name service1.

Note: Service discovery using DNS is exclusive to NGINX Plus.

NGINX Plus API

NGINX Plus includes a RESTful API with a single API endpoint. Use the NGINX Plus API to update upstream configurations and key‑value stores on the fly with zero downtime.

The following configuration snippet includes the api directive to enable read‑write access to the /api endpoint.

upstream backend {

zone backends 64k;

server 10.10.10.2:220 max_conns=250;

server 10.10.10.4:220 max_conns=150;

}

server {

listen 80;

server_name www.example.org;

location /api {

api write=on;

}

}With the API enabled as in the example above, you can add a new server to an existing upstream group with the following curl command. It uses the POST method and JSON encoding to set the server’s IP address to 192.168.78.66, its weight to 200, and the maximum number of simultaneous connections to 150. (For version, substitute the API’s current version number as specified in the reference documentation.)

$ curl -iX POST -d '{"server":"192.168.78.66:80","weight":"200","max_conns":"150"}' http://localhost:80/api/version/http/upstreams/backend/servers/To display the complete configuration of all backend upstream servers in JSON format, run:

$ curl -s http://localhost:80/api/version/http/upstreams/backend/servers/ | python -m json.tool

{

"backup": false,

"down": false,

"fail_timeout": "10s",

"id": 0,

"max_conns": 250,

"max_fails": 1,

"route": "",

"server": "10.10.10.2:220",

"slow_start": "0s",

"weight": 1

},

{

"backup": false,

"down": false,

"fail_timeout": "10s",

"id": 1,

"max_conns": 150,

"max_fails": 1,

"route": "",

"server": "10.10.10.4:220",

"slow_start": "0s",

"weight": 1

},

{

"backup": false,

"down": false,

"fail_timeout": "10s",

"id": 2,

"max_conns": 200,

"max_fails": 1,

"route": "",

"server": "192.168.78.66:80",

"slow_start": "0s",

"weight": 200

}To modify the configuration of an existing upstream server, identify it by its internal ID, which appears in the id field in the output above. The following command uses the PATCH method to reconfigure the server with ID 2, setting its IP address and listening port to 192.168.78.55:80, its weight to 500, and the connection limit to 350.

$ curl -iX PATCH -d '{"server":"192.168.78.55:80","weight":"500","max_conns":"350"}' http://localhost:80/api/version/http/upstreams/backend/servers/2You can access the full Swagger (OpenAPI Specification) documentation of the NGINX Plus API at https://demo.nginx.com/swagger-ui/.

Note: The NGINX Plus API is exclusive to NGINX Plus.