Cookie preferences

Accept cookies for analytics, social media, and advertising, or learn more and adjust your preferences. These cookies are on by default for visitors outside the UK and EEA. Privacy Notice.

Enterprise-Class Availability, Security, and Visibility for Apps

Deployed at the central point of entry into a Kubernetes cluster, NGINX Ingress Controller reduces complexity, increases uptime, and provides better insights into app health and performance at scale.

Why Use NGINX Ingress Controller?

Simplify Operations

NGINX Ingress Controller reduces tool sprawl through technology consolidation:

- A universal Kubernetes-native tool for implementing API gateways, load balancers, and Ingress controllers at the edge of a Kubernetes cluster

- The same data and control planes across any hybrid, multi-cloud environment

- Focus on core business functionality, offloading security and other non-functional requirements to the platform layer

Recommended Resources:

Deliver Apps Without Disruption

NGINX Ingress Controller ensures availability of business-critical apps at all times with:

- Layer 7 (HTTP/HTTPS, HTTP/2, gRPC, WebSocket) and Layer 4 (TCP/UDP) load balancing with advanced distribution methods and active health checks

- Blue-green and canary deployments to avoid downtime when rolling out a new version of an app

- Dynamic reconfiguration, rate limiting, circuit breaking, and request buffering to prevent connection timeouts and errors during topology changes, extremely high request rates, and service failures

Recommended Resources:

Mitigate Cybersecurity Threats

Integrate strong security controls at the edge of a Kubernetes cluster with NGINX Ingress Controller:

- Manage user and service identities and their authorized access and actions with HTTP Basic authentication, JSON Web Tokens (JWTs), OpenID Connect (OIDC), and role-based access control (RBAC)

- Secure incoming and outgoing communications through end-to-end encryption (SSL/TLS passthrough, TLS termination)

- Protect apps from the OWASP Top 10 and Layer 7 DoS attacks through integration with optional NGINX App Protect modules

Recommended Resources:

Gain Better Insight

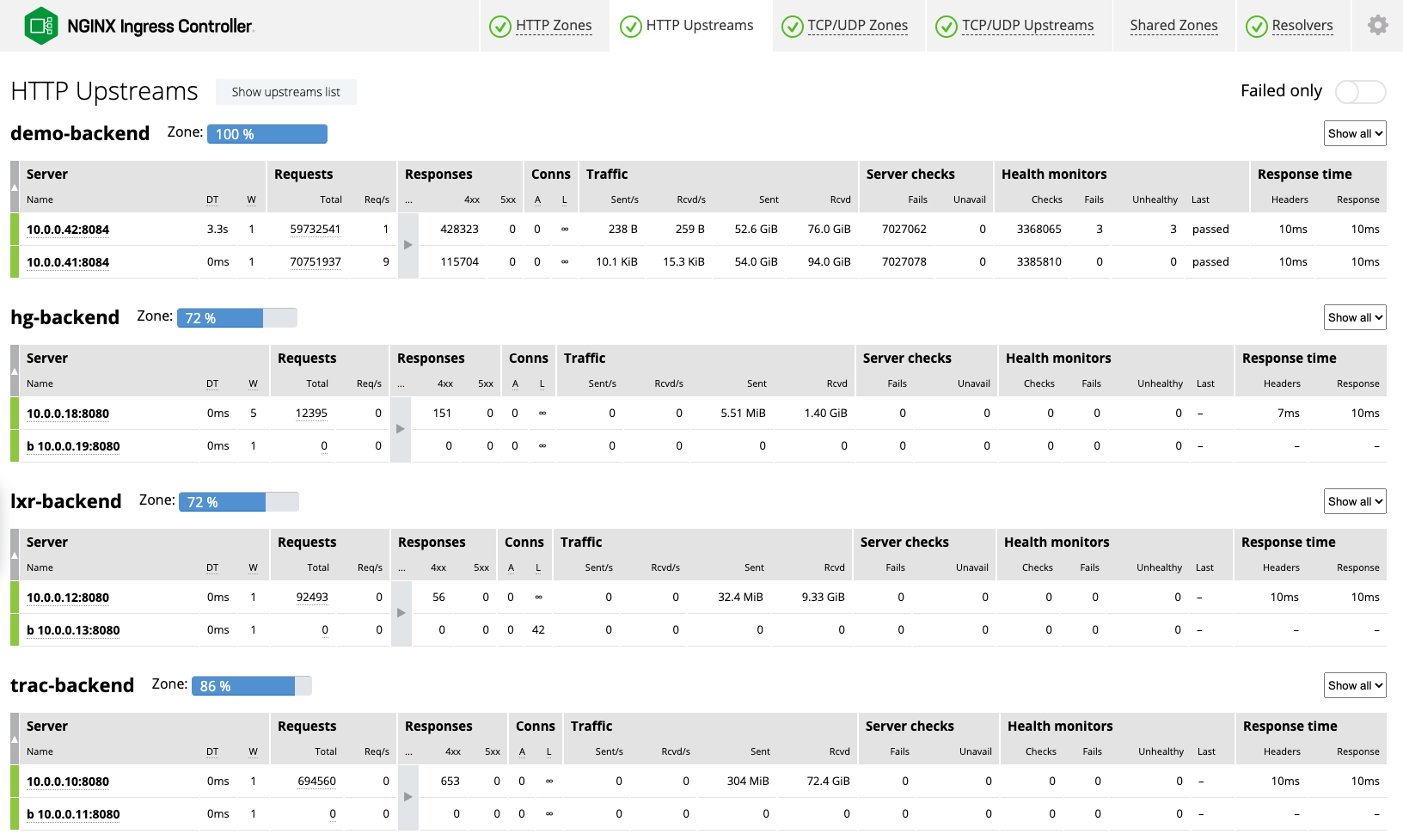

Achieve better visibility into app health and performance with more than 200 granular metrics monitored and reported by NGINX Ingress Controller:

- Reduce outages and downtime by discovering problems before they impact your customers

- Simplify troubleshooting by finding the root cause of app issues quickly

- Collect, monitor, and analyze your data through prebuilt integrations with your favorite ecosystem tools, including OpenTelemetry, Grafana, Prometheus, and Jaeger

Recommended Resources:

Speed Up Releases

Deliver apps faster and easier with NGINX Ingress Controller:

- Focus on implementing core business expertise and functionality within the app

- Offload security and other non-functional requirements to the platform layer

- Enable self-service governance across multiple platform and development teams

Recommended Resources:

Deploy on Any Kubernetes Platform, Including:

- Amazon Elastic Kubernetes Service (EKS)

- Microsoft Azure Kubernetes Service (AKS)

- Google Kubernetes Engine (GKE)

- Red Hat OpenShift

- SUSE Rancher

- VMware Tanzu

- Any other Kubernetes-conformant platform

Learn More About NGINX Ingress Controller

Blog

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for...

Blog

A Guide to Choosing an Ingress Controller, Part 4: NGINX Ingress Controller Options

Learn which NGINX Ingress controller is best for you, based on authorship, development philosophy, production readiness, security, and support.

Ebook

Managing Kubernetes Traffic with F5 NGINX: A Practical Guide

Learn how to manage Kubernetes traffic with F5 NGINX Ingress Controller and F5 NGINX Service Mesh and solve the complex challenges of running Kubernetes in production.