[Editor– This post is an extract from our comprehensive eBook, Managing Kubernetes Traffic with F5 NGINX: A Practical Guide. Download it for free today.]

As organizations scale up, development and operational workflows in Kubernetes get more complex. It’s generally more cost‑effective – and can be more secure – for teams to share Kubernetes clusters and resources, rather than each team getting its own cluster. But there can be critical damage to your deployments if teams don’t share those resources in a safe and secure manner or hackers exploit your configurations.

Multi‑tenancy practices and namespace isolation at the network and resource level help teams share Kubernetes resources safely. You can also significantly reduce the magnitude of breaches by isolating applications on a per‑tenant basis. This method helps boost resiliency because only subsections of applications owned by specific teams can be compromised, while systems providing other functionalities remain intact.

NGINX Ingress Controller supports multiple multi‑tenancy models, but we see two primary patterns. The infrastructure service provider pattern typically includes multiple NGINX Ingress Controller deployments with physical isolation, while the enterprise pattern typically uses a shared NGINX Ingress Controller deployment with namespace isolation. In this section we explore the enterprise pattern in depth; for information about running multiple NGINX Ingress Controllers see our documentation.

Delegation with NGINX Ingress Controller

NGINX Ingress Controller supports both the standard Kubernetes Ingress resource and custom NGINX Ingress resources, which enable both more sophisticated traffic management and delegation of control over configuration to multiple teams. The custom resources are VirtualServer, VirtualServerRoute, GlobalConfiguration, TransportServer, and Policy.

With NGINX Ingress Controller, cluster administrators use VirtualServer resources to provision Ingress domain (hostname) rules that route external traffic to backend applications, and VirtualServerRoute resources to delegate management of specific URLs to application owners and DevOps teams.

There are two models you can choose from when implementing multi‑tenancy in your Kubernetes cluster: full self‑service and restricted self‑service.

Implementing Full Self-Service

In a full self‑service model, administrators are not involved in day-to-day changes to NGINX Ingress Controller configuration. They are responsible only for deploying NGINX Ingress Controller and the Kubernetes Service which exposes the deployment externally. Developers then deploy applications within an assigned namespace without involving the administrator. Developers are responsible for managing TLS secrets, defining load‑balancing configuration for domain names, and exposing their applications by creating VirtualServer or standard Ingress resources.

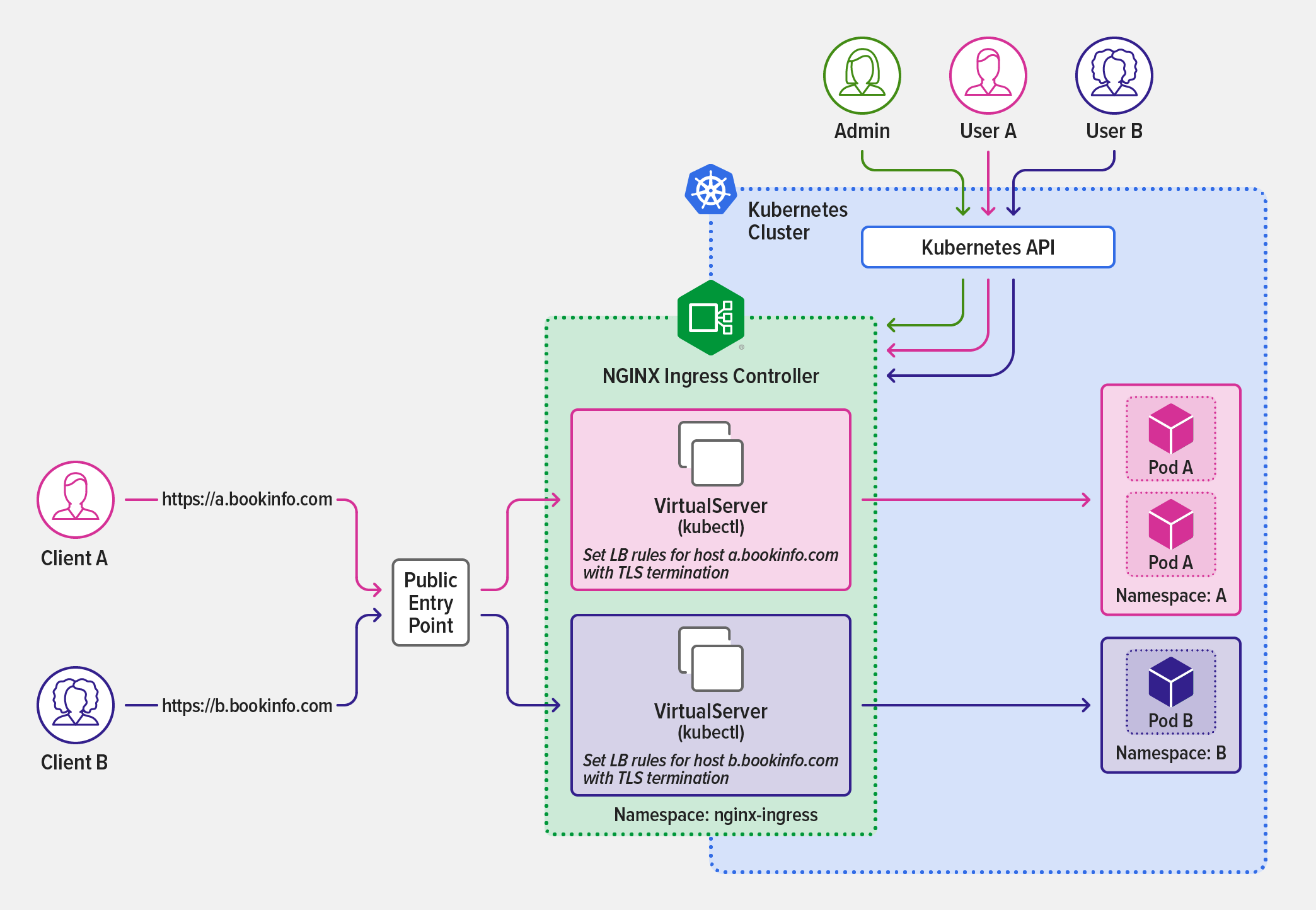

To illustrate this model, we replicate the sample bookinfo application (originally created by Istio) with two subdomains, a.bookinfo.com and b.bookinfo.com, as depicted in the following diagram. Once the administrator installs and deploys NGINX Ingress Controller in the nginx-ingress namespace (highlighted in green), teams DevA (pink) and DevB (purple) create their own VirtualServer resources and deploy applications isolated within their namespaces (A and B respectively).

Teams DevA and DevB set Ingress rules for their domains to route external connections to their applications.

Team DevA applies the following VirtualServer resource object to expose applications for the a.bookinfo.com domain in the A namespace.

Similarly, team DevB applies the following VirtualServer resource to expose applications for the b.bookinfo.com domain in the B namespace.

Implementing Restricted Self-Service

In a restricted self‑service model, administrators configure VirtualServer resources to route traffic entering the cluster to the appropriate namespace, but delegate configuration of the applications in the namespaces to the responsible development teams. Each such team is responsible only for its application subroute as instantiated in the VirtualServer resource and uses VirtualServerRoute resources to define traffic rules and expose application subroutes within its namespace.

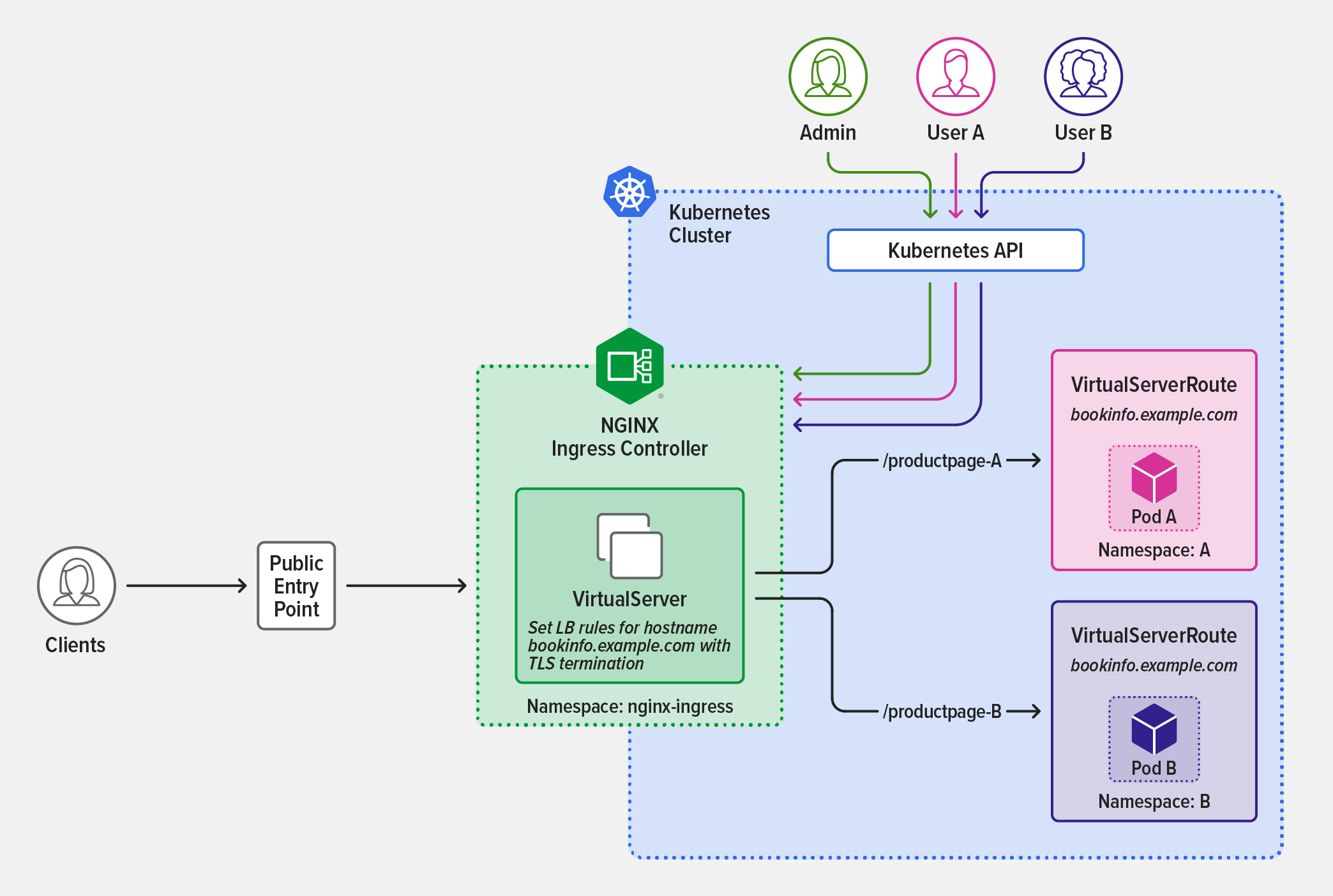

As illustrated in the diagram, the cluster administrator installs and deploys NGINX Ingress Controller in the nginx-ingress namespace (highlighted in green), and defines a VirtualServer resource that sets path‑based rules referring to VirtualServerRoute resource definitions.

This VirtualServer resource definition sets two path‑based rules that refer to VirtualServerRoute resource definitions for two subroutes, /productpage-A and /productpage-B.

The developer teams responsible for the apps in namespaces A and B then define VirtualServerRoute resources to expose application subroutes within their namespaces. The teams are isolated by namespace and restricted to deploying application subroutes set by VirtualServer resources provisioned by the administrator:

-

Team DevA (pink in the diagram) applies the following VirtualServerRoute resource to expose the application subroute rule set by the administrator for

/productpage-A. -

Team DevB (purple) applies the following VirtualServerRoute resource to expose the application subroute rule set by the administrator for

/productpage-B.

For more information about features you can configure in VirtualServer and VirtualServerRoute resources, see the NGINX Ingress Controller documentation.

Note: You can use mergeable Ingress types to configure cross‑namespace routing, but in a restricted self‑service delegation model that approach has three downsides compared to VirtualServer and VirtualServerRoute resources:

- It is less secure.

- As your Kubernetes deployment grows becomes larger and more complex, it becomes increasingly prone to accidental modifications, because mergeable Ingress types do not prevent developers from setting Ingress rules for hostnames within their namespace.

- Unlike VirtualServer and VirtualServerRoute resources, mergeable Ingress types don’t enable the primary (“master”) Ingress resource to control the paths of “minion” Ingress resources.

Leveraging Kubernetes RBAC in a Restricted Self-Service Model

You can use Kubernetes role‑based access control (RBAC) to regulate a user’s access to namespaces and NGINX Ingress resources based on the roles assigned to the user.

For instance, in a restricted self‑service model, only administrators with special privileges can safely be allowed to access VirtualServer resources – because those resources define the entry point to the Kubernetes cluster, misuse can lead to system‑wide outages.

Developers use VirtualServerRoute resources to configure Ingress rules for the application routes they own, so administrators set RBAC policies that allow developers to create only those resources. They can even restrict that permission to specific namespaces if they need to regulate developer access even further.

In a full self‑service model, developers can safely be granted access to VirtualServer resources, but again the administrator might restrict that permission to specific namespaces.

For more information on RBAC authorization, see the Kubernetes documentation.

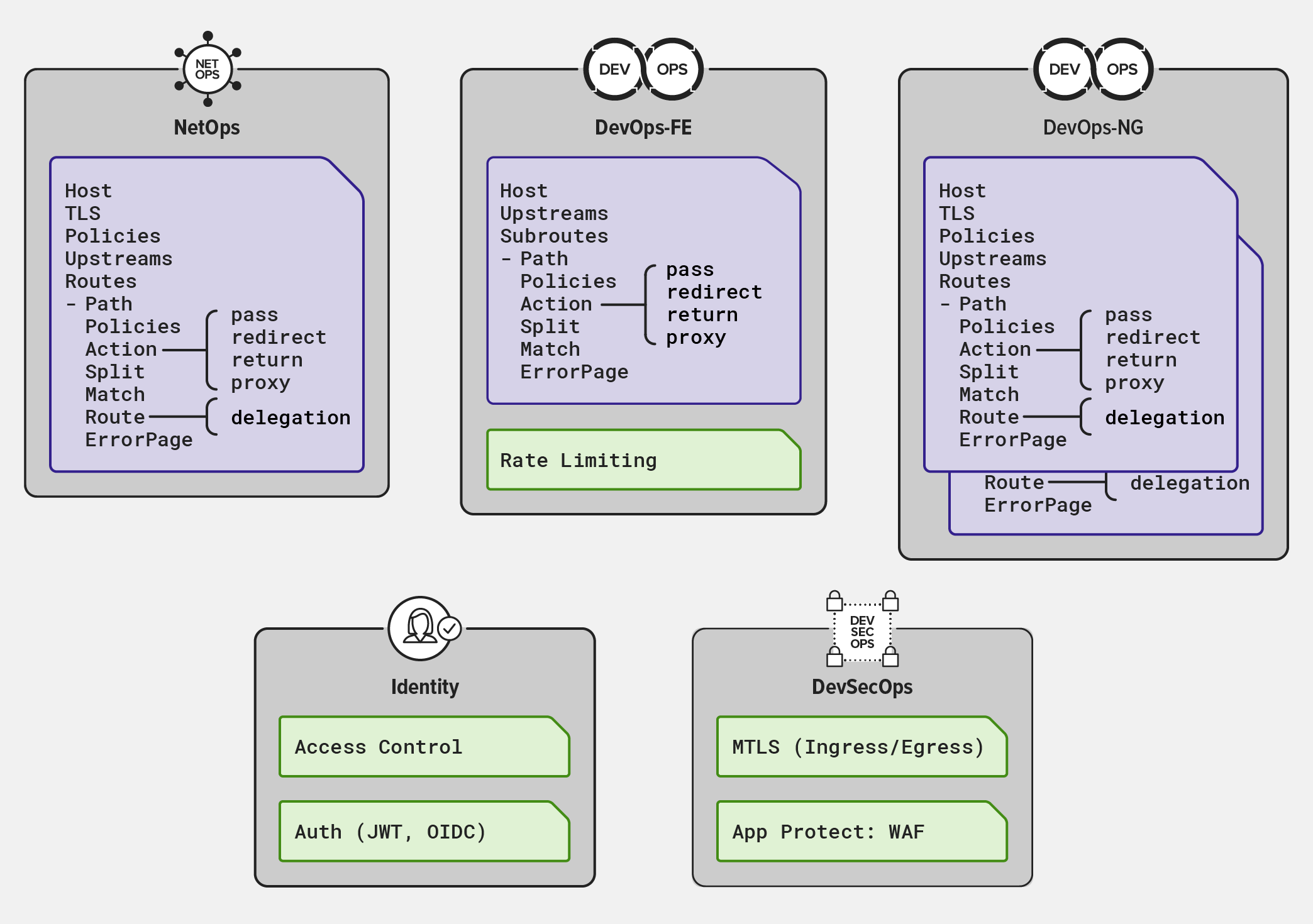

Adding Policies

NGINX Policy resources are another tool for enabling distributed teams to configure Kubernetes in multi‑tenancy deployments. Policy resources enable functionalities like authentication using OAuth and OpenID Connect (OIDC), rate limiting, and web application firewall (WAF). Policy resources are referenced in VirtualServer and VirtualServerRoute resources to take effect in the Ingress configuration.

For instance, a team in charge of identity management in a cluster can define JSON Web Token (JWT) or OIDC policies like the following which uses Okta as the OIDC identity provider (IdP).

NetOps and DevOps teams can use VirtualServer or VirtualServerRoute resources to reference those policies, as in this example.

Together, the NGINX Policy, VirtualServer, and VirtualServerRoute resources enable distributed configuration architectures, where administrators can easily delegate configuration to other teams. Teams can assemble modules across namespaces and configure the NGINX Ingress Controller with sophisticated use cases in a secure, scalable, and manageable fashion.

For more information about Policy resources, see the NGINX Ingress Controller documentation.

This post is an extract from our comprehensive eBook, Managing Kubernetes Traffic with NGINX: A Practical Guide. Download it for free today.

Try the NGINX Ingress Controller based on NGINX Plus for yourself in a 30-day free trial today or contact us to discuss your use cases.