The first mention of QUIC and HTTP/3 on the NGINX blog was four years ago (!), and like you we’re now eagerly looking forward to the imminent merging of our QUIC implementation into the NGINX Open Source mainline branch. Given the long gestation, it’s understandable if you haven’t QUIC much thought.

At this point, however, as a developer or site administrator you need to be aware of how QUIC shifts responsibility for some networking details from the operating system to NGINX (and all HTTP apps). Even if networking is not your bag, adopting QUIC means that worrying about the network is now (at least a little bit) part of your job.

In this post, we dive into key networking and encryption concepts used in QUIC, simplifying some details and omitting non‑essential information in pursuit of clarity. While some nuance might be lost in the process, our intention is to provide enough information for you to effectively adopt QUIC in your environment, or at least a foundation on which to build your knowledge.

If QUIC is entirely new to you, we recommend that you first read one of our earlier posts and watch our overview video.

For a more detailed and complete explanation of QUIC, we recommend the excellent Manageability of the QUIC Transport Protocol document from the IETC QUIC working group, along with the additional materials linked throughout this document.

Why Should You Care About Networking and Encryption in QUIC?

The grimy details of network connection between clients and NGINX have not been particularly relevant for most users up to now. After all, with HTTP/1.x and HTTP/2 the operating system takes care of setting up the Transmission Control Protocol (TCP) connection between clients and NGINX. NGINX simply uses the connection once it’s established.

With QUIC, however, responsibility for connection creation, validation, and management shifts from the underlying operating system to NGINX. Instead of receiving an established TCP connection, NGINX now gets a stream of User Datagram Protocol (UDP) datagrams, which it must parse into client connections and streams. NGINX is also now responsible for dealing with packet loss, connection restarts, and congestion control.

Further, QUIC combines connection initiation, version negotiation, and encryption key exchange into a single connection‑establishment operation. And although TLS encryption is handled in a broadly similar way for both QUIC+HTTP/3 and TCP+HTTP/1+2, there are differences that might be significant to downstream devices like Layer 4 load balancers, firewalls, and security appliances.

Ultimately, the overall effect of these changes is a more secure, faster, and more reliable experience for users, with very little change to NGINX configuration or operations. NGINX administrators, however, need to understand at least a little of what’s going on with QUIC and NGINX, if only to keep their mean time to innocence as short as possible in the event of issues.

(It’s worth noting that while this post focuses on HTTP operations because HTTP/3 requires QUIC, QUIC can be used for other protocols as well. A good example is DNS over QUIC, as defined in RFC 9250, DNS over Dedicated QUIC Connections.)

With that introduction out of the way, let’s dive into some QUIC networking specifics.

TCP versus UDP

QUIC introduces a significant change to the underlying network protocol used to transmit HTTP application data between a client and server.

As mentioned, TCP has always been the protocol for transmitting HTTP web application data. TCP is designed to deliver data reliably over an IP network. It has a well‑defined and understood mechanism for establishing connections and acknowledging receipt of data, along with a variety of algorithms and techniques for managing the packet loss and delay that are common on unreliable and congested networks.

While TCP provides reliable transport, there are trade‑offs in terms of performance and latency. In addition, data encryption is not built into TCP and must be implemented separately. It has also been difficult to improve or extend TCP in the face of changing HTTP traffic patterns – because TCP processing is performed in the Linux kernel, any changes must be designed and tested carefully to avoid unanticipated effects on overall system performance and stability.

Another issue is that in many scenarios, HTTP traffic between client and server passes through multiple TCP processing devices, like firewalls or load balancers (collectively known as “middleboxes”), which may be slow to implement changes to TCP standards.

QUIC instead uses UDP as the transport protocol. UDP is designed to transmit data across an IP network like TCP, but it intentionally disposes of connection establishment and reliable delivery. This lack of overhead makes UDP suitable for a lot of applications where efficiency and speed are more important than reliability.

For most web applications, however, reliable data delivery is essential. Since the underlying UDP transport layer does not provide reliable data delivery, these functions need to be provided by QUIC (or the application itself). Fortunately, QUIC has a couple advantages over TCP in this regard:

- QUIC processing is performed in Linux user space, where problems with a particular operation pose less risk to the overall system. This makes rapid development of new features more feasible.

- The “middleboxes” mentioned above generally do minimal processing of UDP traffic, and so do not constrain enhancements to the QUIC protocol.

A Simplified QUIC Network Anatomy

QUIC streams are the logical objects containing HTTP/3 requests or responses (or any other application data). For transmission between network endpoints, they are wrapped inside multiple logical layers as depicted in the diagram.

Starting from the outside in, the logical layers and objects are:

- UDP Datagram – Contains a header specifying the source and destination ports (along with length and checksum data), followed by one or more QUIC packets. The datagram is the unit of information transmitted from client to server across the network.

- QUIC Packet – Contains one QUIC header and one or more QUIC frames.

-

QUIC Header – Contains metadata about the packet. There are two types of header:

- The long header, used during connection establishment.

- The short header, used after the connection is established. It contains (among other data) the connection ID, packet number, and key phase (used to track which keys were used to encrypt the packet, in support of key rotation). Packet numbers are unique (and always increase) for a particular connection and key phase.

- Frame – Contains the type, stream ID, offset, and stream data. Stream data is spread across multiple frames, but can be assembled using the connection ID, stream ID, and offset, which is used to present the chunks of data in the correct order.

- Stream – A unidirectional or bidirectional flow of data within a single QUIC connection. Each QUIC connection can support multiple independent streams, each with its own stream ID. If a QUIC packet containing some streams is lost, this does not affect the progress of any streams not contained in the missing packet (this is critical to avoiding the head-of-line blocking experienced by HTTP/2). Streams can be bidirectional and created by either endpoint.

Connection Establishment

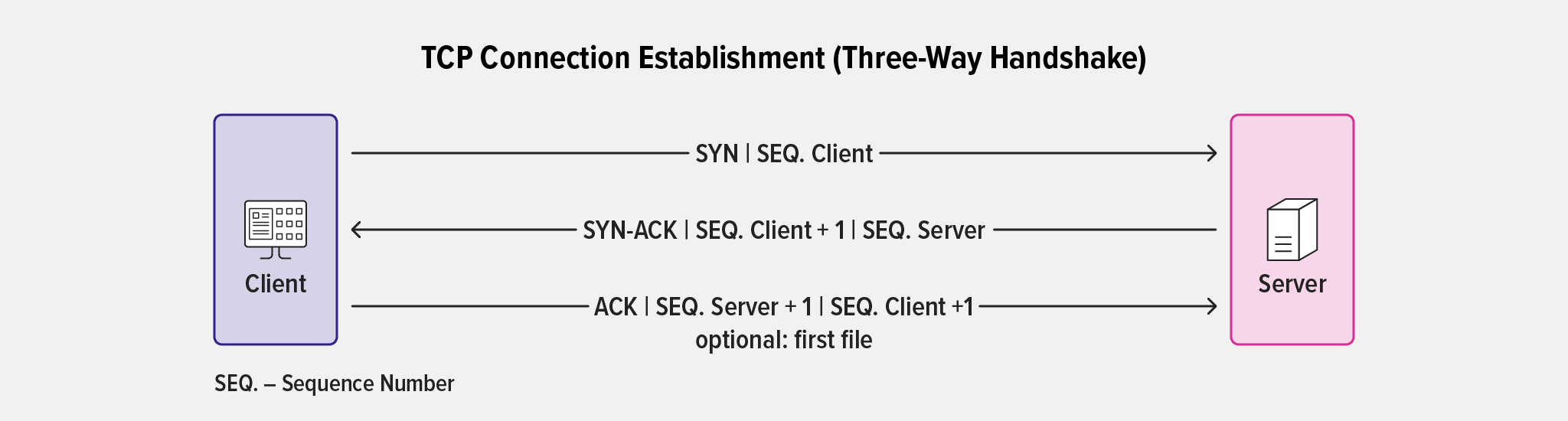

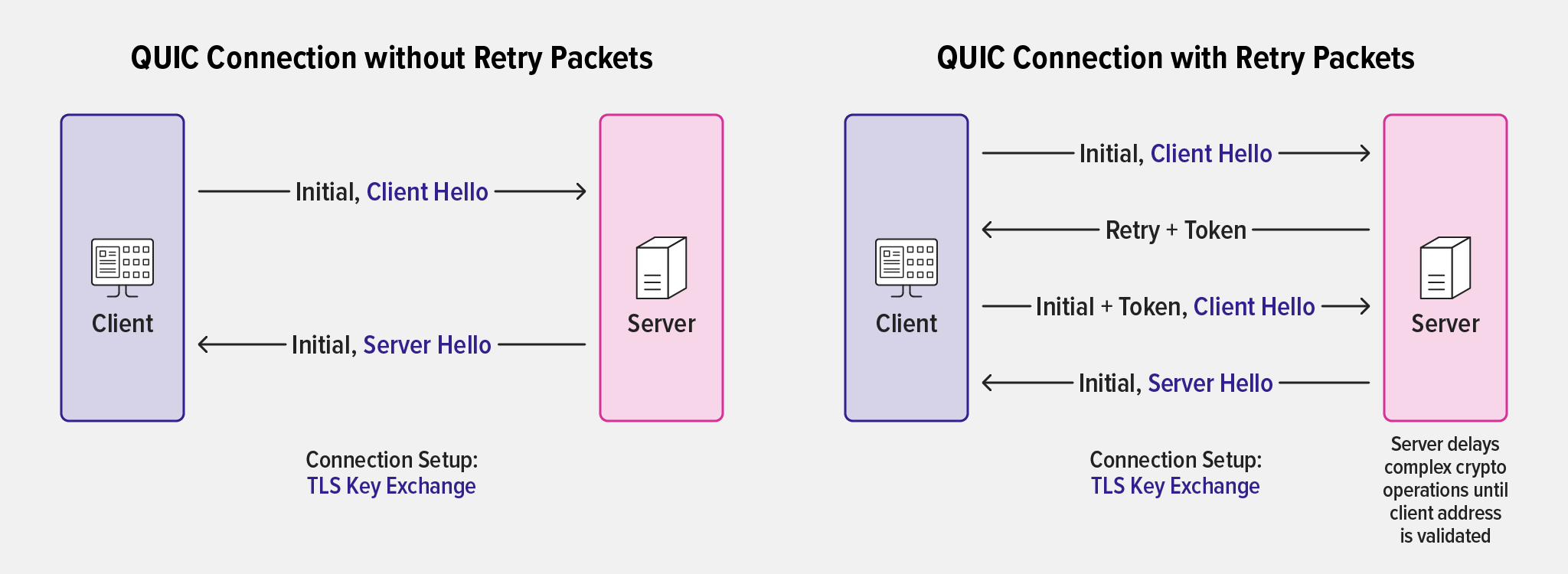

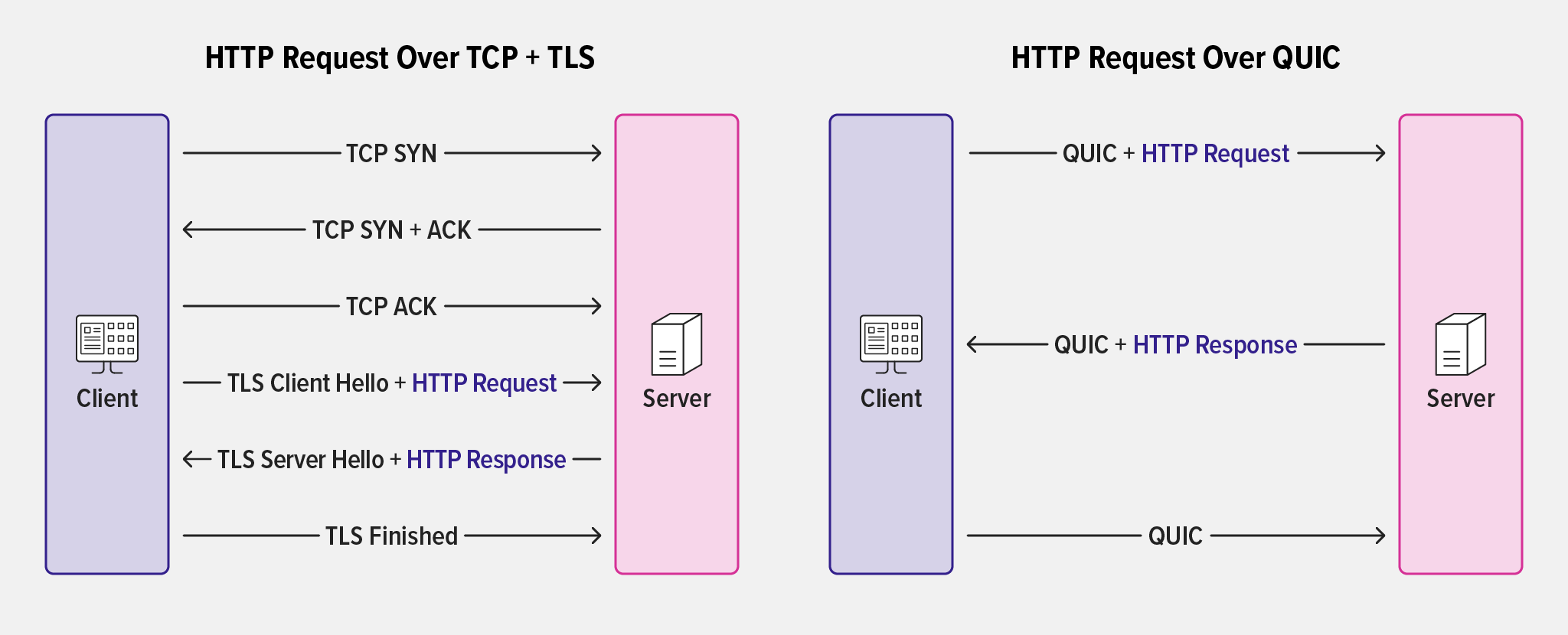

The familiar SYN / SYN-ACK / ACK three‑way handshake establishes a TCP connection:

Establishing a QUIC connection involves similar steps, but is more efficient. It also builds address validation into the connection setup as part of the cryptographic handshake. Address validation defends against traffic amplification attacks, in which a bad actor sends the server a packet with spoofed source address information for the intended attack victim. The attacker hopes the server will generate more or larger packets to the victim than the attacker can generate on its own, resulting in an overwhelming amount of traffic. (For more details, see Section 8 of RFC 9000, QUIC: A UDP‑Based Multiplexed and Secure Transport.)

As part of connection establishment, the client and server provide independent connection IDs which are encoded in the QUIC header, providing a simple identification of the connection, independent of the client source IP address.

However, as the initial establishment of a QUIC connection also includes operations for exchange of TLS encryption keys, it’s more computationally expensive for the server than the simple SYN-ACK response it generates during establishment of a TCP connection. It also creates a potential vector for distributed denial-of-service (DDoS) attacks, because the client IP address is not validated before the key‑exchange operations take place.

But you can configure NGINX to validate the client IP address before complex cryptographic operations begin, by setting the quic_retry directive to on. In this case NGINX sends the client a retry packet containing a token, which the client must include in connection‑setup packets.

This mechanism is somewhat like the three‑way TCP handshake and, critically, establishes that the client owns the source IP address that it is presenting. Without this check in place, QUIC servers like NGINX might be vulnerable to easy DoS attacks with spoofed source IP addresses. (Another QUIC mechanism that mitigates such attacks is the requirement that all initial connection packets must be padded to a minimum of 1200 bytes, making sending them a more expensive operation.)

In addition, retry packets mitigate an attack similar to the TCP SYN flood attack (where server resources are exhausted by a huge number of opened but not completed handshakes stored in memory), by encoding details of the connection in the connection ID it sends to the client; this has the further benefit that no server‑side information need be retained, as connection information can be reconstituted from the connection ID and token subsequently presented by the client. This technique is analogous to TCP SYN cookies. In addition, QUIC servers like NGINX can supply an expiring token to be used in future connections from the client, to speed up connection resumption.

Using connection IDs enables the connection to be independent of the underlying transport layer, so that changes in networking need not cause connections to break. This is discussed in Gracefully Managing Client IP Address Changes.

Loss Detection

With a connection established (and encryption enabled, as discussed further below), HTTP requests and responses can flow back and forth between the client and NGINX. UDP datagrams are sent and received. However, there are many factors that might cause some of these datagrams to be lost or delayed.

TCP has complex mechanisms to acknowledge packet delivery, detect packet loss or delay, and manage the retransmission of lost packets, delivering properly sequenced and complete data to the application layer. UDP lacks this facility and therefore congestion control and loss detection are implemented in the QUIC layer.

- Both client and server send an explicit acknowledgment for each QUIC packet they receive (although packets containing only low‑priority frames aren’t acknowledged immediately).

-

When a packet containing frames that require reliable delivery has not been acknowledged after a set timeout period, it is deemed lost.

Timeout periods vary depending on what’s in the packet – for instance, the timeout is shorter for packets that are needed for establishing encryption and setting up the connection, because they are essential for QUIC handshake performance.

- When a packet is deemed lost, the missing frames are retransmitted in a new packet, which has a new sequence number.

- The packet recipient uses the stream ID and offset on packets to assemble the transmitted data in the correct order. The packet number dictates only the order of sending, not how packets should be assembled.

- Because data assembly at the receiver is independent of transmission order, a lost or delayed packet affects only the individual streams it contains, not all streams in the connection. This eliminates the head-of-line blocking problem that affects HTTP/1.x and HTTP/2 because streams are not part of the transport layer.

A complete description of loss detection is beyond the scope of this primer. See RFC 9002, QUIC Loss Detection and Congestion Control, for details about the mechanisms for determining timeouts and how much unacknowledged data is allowed to be in transit.

Gracefully Managing Client IP Address Changes

A client’s IP address (referred to as the source IP address in the context of an application session) is subject to change during the session, for example when a VPN or gateway changes its public address or a smartphone user leaves a location covered by WiFi, which forces a switch to a cellular network. Also, network administrators have traditionally set lower timeouts for UDP traffic than for TCP connections, which results in increased likelihood of network address translation (NAT) rebinding.

QUIC provides two mechanisms to reduce the disruption that can result: a client can proactively inform the server that its address is going to change, and servers can gracefully handle an unplanned change in the client’s address. Since the connection ID remains consistent through the transition, unacknowledged frames can be retransmitted to the new IP address.

Changes to the source IP address during QUIC sessions may pose a problem for downstream load balancers (or other Layer 4 networking components) that use source IP address and port to determine which upstream server is to receive a particular UDP datagram. To ensure correct traffic management, providers of Layer 4 network devices will need to update them to handle QUIC connection IDs. To learn more about the future of load balancing and QUIC, see the IETF draft QUIC‑LB: Generating Routable QUIC Connection IDs.

Encryption

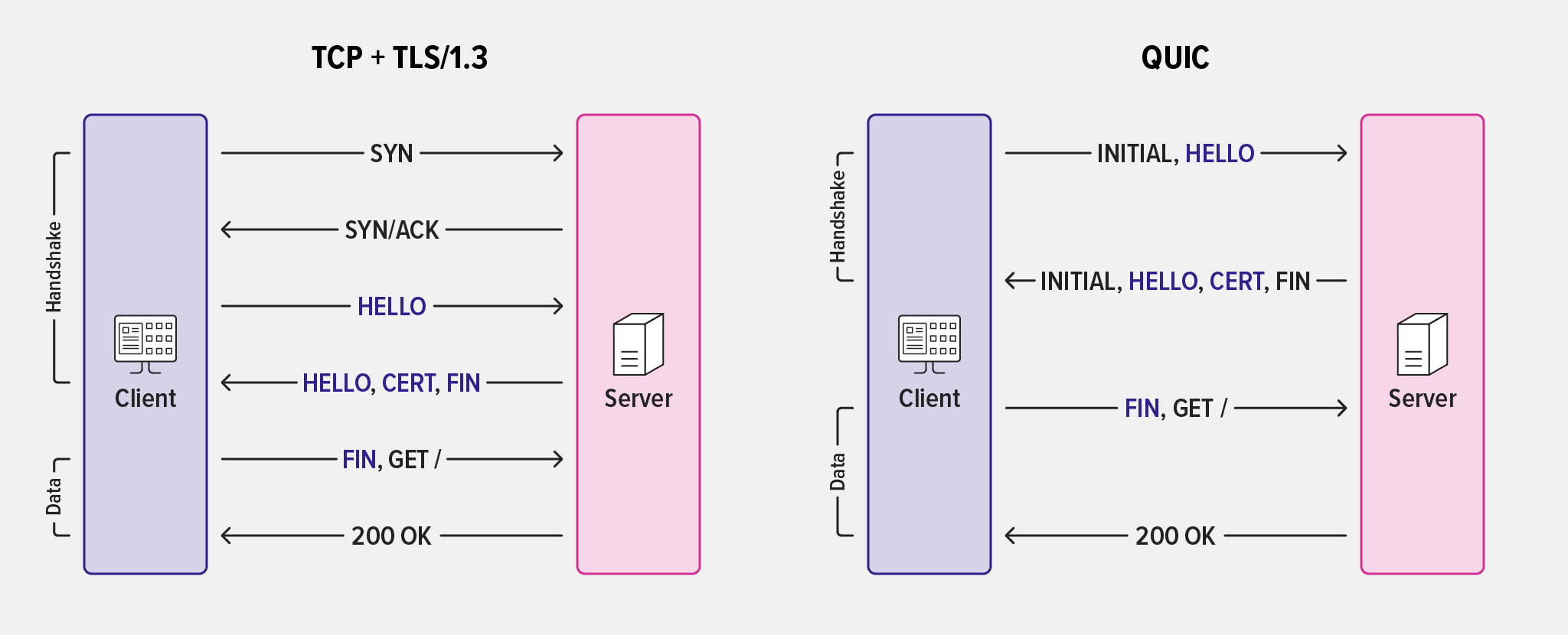

In Connection Establishment, we alluded to the fact that the initial QUIC handshake does more than simply establish a connection. Unlike the TLS handshake for TCP, with UDP the exchange of keys and TLS 1.3 encryption parameters occurs as part of the initial connection. This feature removes several exchanges and enables zero round‑trip time (0‑RTT) when the client resumes a previous connection.

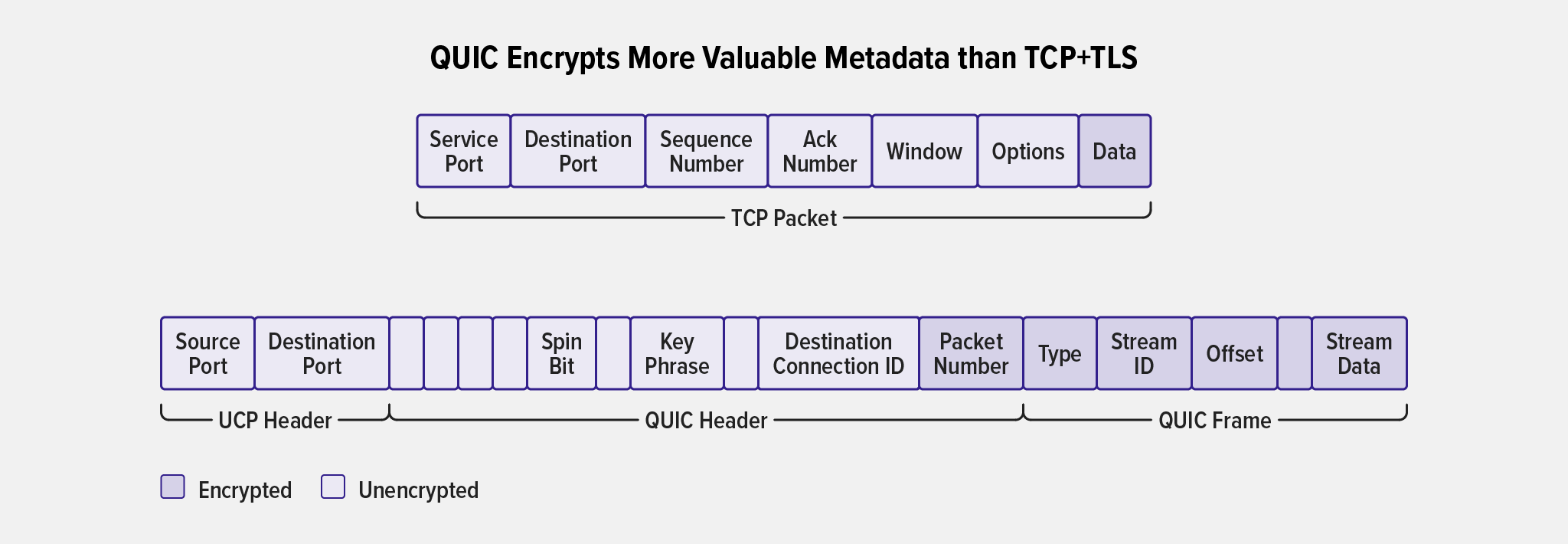

In addition to folding the encryption handshake into the connection‑establishment process, QUIC encrypts a greater portion of the metadata than TCP+TLS. Even before key exchange has occurred, the initial connection packets are encrypted; though an eavesdropper can still derive the keys, it takes more effort than with unencrypted packets. This better protects data such as the Server Name Indicator (SNI) which is relevant to both attackers and potential state‑level censors. Figure 5 illustrates how QUIC encrypts more potentially sensitive metadata (in red) than TCP+TLS.

All data in the QUIC payload is encrypted using TLS 1.3. There are two advantages: older, vulnerable cipher suites and hashing algorithms are not allowed and forward secrecy (FS) key‑exchange mechanisms are mandatory. Forward secrecy prevents an attacker from decrypting the data even if the attacker captures the private key and a copy of the traffic.

Low and Zero-RTT Connections Reduce Latency

Reducing the number of round trips that must happen between a client and server before any application data can be transmitted improves the performance of applications, particularly over networks with higher latency.

TLS 1.3 introduced a single round trip to establish an encrypted connection, and zero round trips to resume a connection, but with TCP this means the handshake has to occur before the TLS Client Hello.

Because QUIC combines cryptographic operations with connection setup, it provides true 0‑RTT connection re‑establishment, where a client can send a request in the very first QUIC packet. This reduces latency by eliminating the initial roundtrip for connection establishment before the first request.

In this case, the client sends an HTTP request encrypted with the parameters used in a previous connection, and for address‑validation purposes includes a token supplied by the server during the previous connection.

Unfortunately, 0‑RTT connection resumption does not provide Forward Secrecy, so the initial client request is not as securely encrypted as other traffic in the exchange. Requests and responses beyond the first request are protected by Forward Secrecy. Possibly more problematic is that the initial request is also vulnerable to replay attacks, where an attacker can capture the initial request and replay it to the server multiple times.

For many applications and websites, the performance improvement from 0‑RTT connection resumption outweighs these potential vulnerabilities, but that’s a decision you need to make for yourself.

This feature is disabled by default in NGINX. To enable it, set the ssl_early_data directive to on.

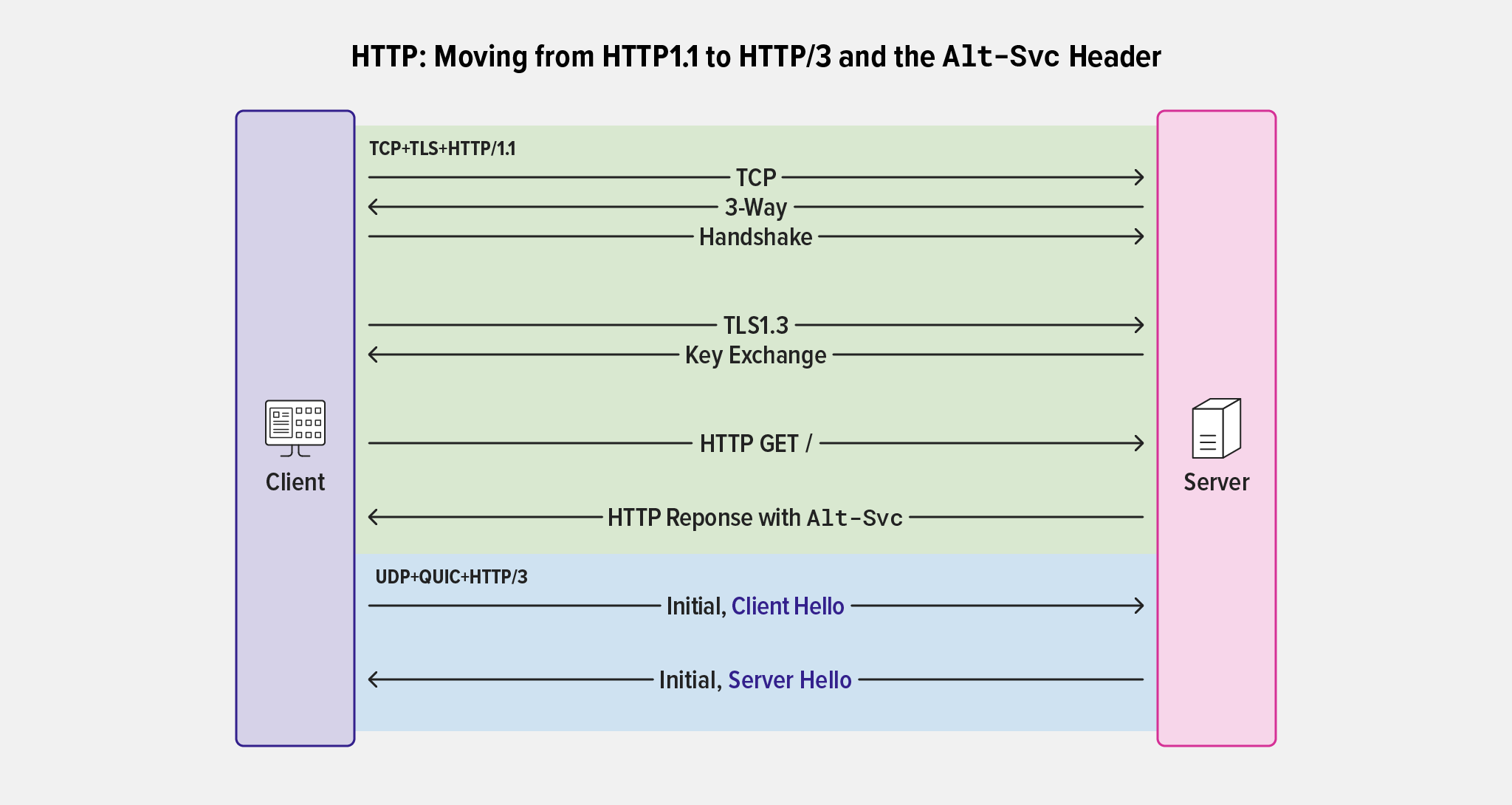

Moving from HTTP/1.1 to HTTP/3 with the Alt-Svc Header

Nearly all clients (browsers in particular) make initial connections over TCP/TLS. If a server supports QUIC+HTTP/3, it signals that fact to the client by returning an HTTP/1.1 response that includes the h3 parameter to the Alt-Svc header. The client then chooses whether to use QUIC+HTTP/3 or stick with an earlier version of HTTP. (As a matter of interest, the Alt-Svc header, defined in RFC 7838, predates QUIC and can be used for other purposes as well.)

Alt-Svc header is used to convert a connection from HTTP/1.1 to HTTP/3The Alt-Svc header tells a client that the same service is available on an alternate host, protocol, or port (or a combination thereof). In addition, clients can be informed how long it’s safe to assume that this service will continue to be available.

Some examples:

Alt-Svc: h3=":443" |

HTTP/3 is available on this server on port 443 |

Alt-Svc: h3="new.example.com:8443" |

HTTP/3 is available on server new.example.com on port 8443 |

Alt-Svc: h3=":8443"; ma=600 |

HTTP/3 is available on this server on port 8443 and will be available for at least 10 minutes |

Although not mandatory, in most cases servers are configured to respond to QUIC connections on the same port as TCP+TLS.

To configure NGINX to include the Alt-Svc header, use the add_header directive. In this example, the $server_port variable means that NGINX accepts QUIC connections on the port to which the client sent its TCP+TLS request, and 86,400 is 24 hours:

add_header Alt-Svc 'h3=":$server_port"; ma=86400';Conclusion

This blog provides a simplified primer on QUIC, and hopefully gives you enough of an overview to understand key networking and encryption operations used with QUIC.

For a more comprehensive look at configuring NGINX for QUIC + HTTP/3 read Binary Packages Now Available for the Preview NGINX QUIC+HTTP/3 Implementation on our blog or watch our webinar, Get Hands‑On with NGINX and QUIC+HTTP/3. For details on all NGINX directives for QUIC+HTTP/3 and complete instructions for installing prebuilt binaries or building from source, see the NGINX QUIC webpage.