Recently, we announced the release of the Ansible collection for NGINX Controller. The collection contains a set of Ansible Roles that make it easy to incorporate NGINX Controller into your workflows. You can automate routine tasks such as generating ephemeral API tokens, managing certificate lifecycles, and configuring Controller objects (Gateways, Applications, and Components).

If you’re an Ansible Automation Platform subscriber, you can access the certified collection on Ansible Automation Hub. The upstream, community version is on Ansible Galaxy. The NGINX Controller Ansible Tower examples are in my GitHub repository.

NGINX Controller is our cloud‑agnostic control‑plane solution for managing your NGINX Plus instances in multiple environments and leveraging critical insights into performance and error states. Its modules provide centralized configuration management for application delivery (load balancing) and API management. You can become familiar with the capabilities of NGINX Controller through our instructor‑led course.

What Can You Do with the Collection?

Some of the roles in the collection support day-to-day activities such as:

- Creating NGINX Plus instances (nginx)

- Registering NGINX Plus instances with NGINX Controller, by installing the NGINX Controller agent on the NGINX Plus host (nginx_controller_agent)

- Deploying and configuring NGINX Plus instances as gateways for routing and protecting incoming traffic (nginx_controller_gateway)

- Managing application workspaces (nginx_controller_application)

- Configuring components to intelligently and securely distribute traffic to your backend workloads (nginx_controller_component)

- Managing certificates that are distributed by NGINX Controller to the NGINX Plus instances where they are needed (nginx_controller_certificate)

- Retrieving an ephemeral API token for the NGINX Controller API (nginx_controller_generate_token)

Roles for that actions you might perform less frequently include:

- Installing NGINX Controller itself; you might want to automate installation in your lab environment as part of a proof of concept or trial (nginx_controller_install)

- Managing your evaluation and production licenses (nginx_controller_licence)

We plan to support additional workflows in future, including user onboarding.

Overview of the Sample Scenario

This post accompanies a video in which we use sample playbooks I have written, which invoke the NGINX Controller roles, to set up an application in an AWS production environment for a fictitious customer, ACME Financial, by promoting the configuration of an existing development environment. We take advantage of all the roles in the collection, as well as the sample playbooks and Ansible Tower workflows, to:

- Create new NGINX Plus instances in AWS

- Register the instances to be managed by NGINX Controller

- Manage certificates and configuration with NGINX Controller

Notes:

- This blog assumes familiarity with Ansible and NGINX Controller.

- In the demo we use Ansible Tower to control the process flow, but you can use the Ansible CLI so long as you pass in extra variables on the command line.

- As with any organization, there are multiple implementation patterns that can be leveraged depending on your needs. These are examples to help get you started.

- To help you track along with the demo, we include the time points from the video in the headers.

Establishing a Credential for NGINX Controller (3:30)

We first establish a new credential type for NGINX Controller in Ansible Tower. I have previously loaded the contents of the input_credential.yaml and injector_credential.yaml files from the credential_type.nginx_controller directory in my GitHub repo into Ansible Tower. The contents of these files are subject to enhancement over time, but in this demo the three important pieces are an FQDN, a user ID (email address), and a user password.

With the credential type established, we establish an actual credential called AWS NGINX Controller in Ansible Tower. I’ve previously entered my email address, password, and the FQDN of my NGINX Controller instance. As we go through the subsequent playbooks, Ansible Tower automatically passes the credentials as needed.

Provisioning NGINX Plus Instances in the Cloud (4:30)

Before we can promote the app from the development to production, we have to create and configure new NGINX Plus instances to host it. I have created some workflow and job templates in Ansible Tower, and to begin we launch the add_aws_controller_gateways workflow. It strings together playbooks from the controller_gateway_instances directory of my GitHub repo to do several things:

- Create new Amazon EC2 instances

- Add the instances to my Ansible Tower inventory

- Install NGINX Plus

- Install the NGINX Controller agent alongside NGINX Plus

Creating Amazon EC2 Instances (5:10)

When we kick off the add_aws_controller_gateways workflow, Ansible Tower brings up the contents of the aws_add_instance_vars.yaml file. We set count to 2 to create two EC2 instances and set environmentName to production-us-east. We leave the locationName unspecified in NGINX Controller, and verify that securityGroup, imageID, keyName, and loadbalancerPublicSubnet are set to the values we want.

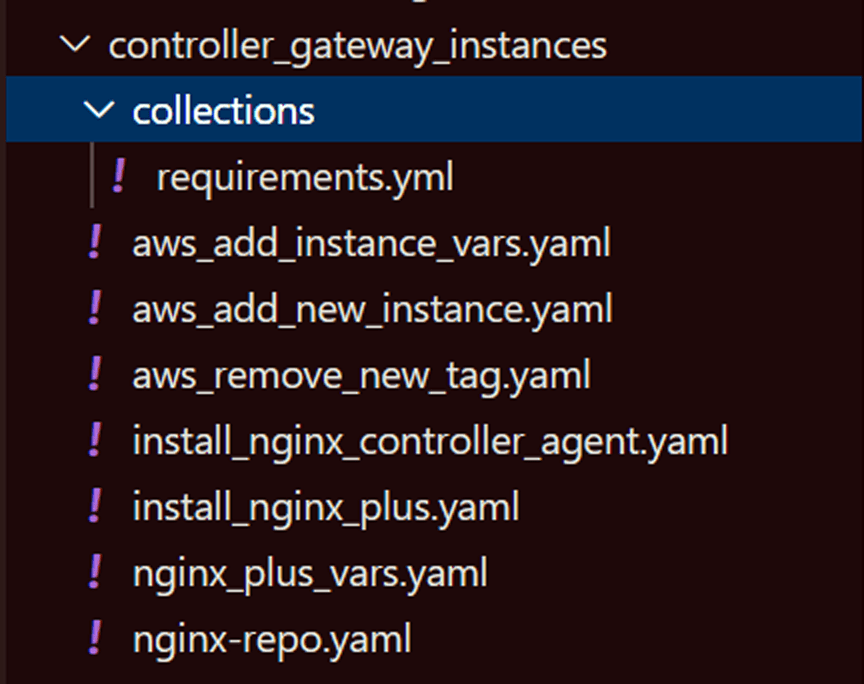

While the workflow starts to run, we look in Visual Studio Code at the contents of the controller_gateway_instances directory, as shown in this screenshot. In the demo we review the contents of various files at the appropriate stage.

The two files we examine at this stage are:

- collections/requirements.yml fetches the nginx_controller collection into Ansible Tower from the nginxinc namespace in Ansible Galaxy or Ansible Automation Hub, so I don’t have to mess with manually installing the roles.

- add_aws_new_instance.yaml provisions the load‑balancing machine instances in Amazon EC2. It tags them with environment and location names, which establishes a sortable link between the AWS infrastructure and NGINX Controller. In particular, I apply the new_gateway key as a tag to use in controlling later stages of the workflow.

Establishing a Machine Inventory (7:08)

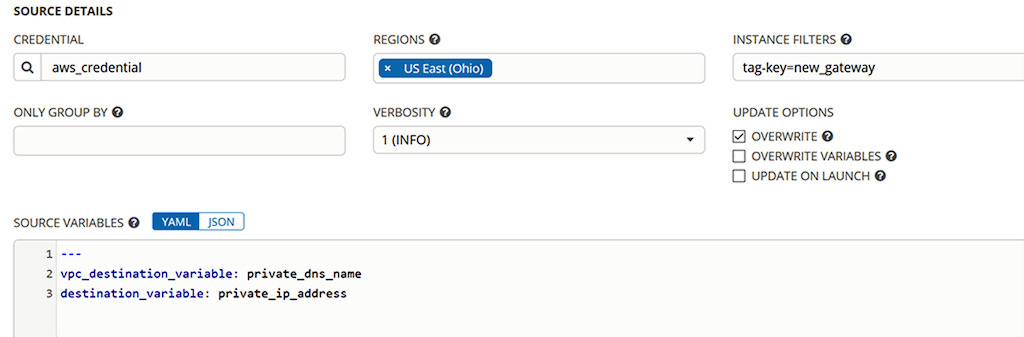

Ansible offers a few options to manage inventory, and the one you choose is really a personal preference. I took advantage of the Ansible Tower cloud‑based inventory “source” feature instead of statically setting machine names in the inventory. In the aws_us-east-2_gateway_new inventory, we look at the details on the SOURCES tab:

- In the SOURCE field, I have specified Amazon EC2.

- In the INSTANCE FILTERS field, I have set a filter on the new_gateway key that was set by the add_aws_new_instance.yaml playbook in Creating Amazon EC2 Instances.

- In the SOURCE VARIABLES field, I have modified the default behavior of setting a public IP address as the endpoint of a host in the inventory. In this scenario Ansible Tower is running in AWS and can reach the private IP addresses of my instances, so my preference is to use them instead of publicly exposed interfaces.

At this point in the demo, Ansible Tower notifies us that the two EC2 instances are created, and we switch to the HOSTS tab to display them.

Installing NGINX Plus (8:30)

We then access the notification and approve the continuation of the workflow, to install NGINX Plus on the two new EC2 instances that are tagged with the new_gateway key.

While the workflow progresses, we review the actions in the install_nginx_plus.yaml playbook which installs and configures NGINX Plus. The playbook uses the hosts statement to switch between performing actions on localhost (the Ansible Tower host) and the new EC2 instances marked with tag_new_gateway.

First, on the Ansible Tower host the playbook reads in the NGINX Plus key and certificate from Ansible Vault and decrypts them for use by the nginx role as it installs NGINX Plus on the EC2 instances.

On the EC2 instances, the playbook:

- Loads the nginx_plus_vars.yaml file (which we examine in detail just below).

- Invokes the official nginx role to install NGINX Plus. The role is not specific to NGINX Controller, but is included in the collection for convenience because (as in the demo) you often create new NGINX Plus instances before registering them for management by Controller.

- Sets some logging parameters appropriate for the very small EC2 instance size used in the demo.

Back on the Ansible Tower host, the playbook removes the decrypted NGINX Plus key and certificate to make sure they’re not inadvertently exposed.

Now we examine the nginx_plus_vars.yaml file which configures NGINX Plus on our instances by:

- Enabling and starting NGINX Plus

- Protecting the NGINX Plus key and certificate from exposure

- Enabling the NGINX Plus API, which Controller uses to manage NGINX Plus on the instances

- Loading the NGINX JavaScript (njs) module

We finish this stage with a quick look at the nginx-repo.yaml file, which has the encrypted NGINX Plus key and certificate as stored in Ansible Vault.

Registering NGINX Plus Instances with NGINX Controller (11:10)

As the workflow continues in the background, we examine the install_nginx_controller_agent.yaml playbook, which registers the NGINX Plus instances with NGINX Controller by installing the Controller agent on them. The agent is the conduit for communication between an NGINX Plus instance and NGINX Controller.

As in Installing NGINX Plus, the playbook uses the hosts statement to switch between performing actions on localhost (the Ansible Tower host) and the new EC2 instances marked with tag_new_gateway.

On the Ansible Tower host, the playbook invokes the nginx_controller_generate_token role and creates an ephemeral API token that the agent will use to communicate with NGINX Controller. This role is invoked in several of the playbooks, whenever we need an ephemeral token for API communication with Controller.

On the NGINX Plus instances, the playbook invokes the nginx_controller_agent role to install the Controller agent.

Verifying the NGINX Plus Installation and Registration (12:05)

At this point in the demo, we receive a notification that installation of NGINX Plus and the Controller agent is complete, so we switch to Controller and look at the Infrastructure > Instances tab. We see the two new instances, identified as 112 and 114.

From the Services > Environments tab, we navigate to our new production-us-east Environment and see that it is not yet configured. A Controller Environment is a set of infrastructure objects managed by a designated set of consumers in your organization, like a specific Development or Operations team.

Looking at the Amazon EC2 dashboard, we see that one of the instances has the new_gateway tag on it and another doesn’t. We don’t actually need to distinguish between new and old instances anymore, however, and when we return to Ansible Tower and approve the continuation of the workflow, the tag gets removed from any EC2 instances that have it.

Configuring the Production Environment (14:03)

There are several configuration objects associated with a Controller Environment. For this demo, they are stored in GitHub: a certificate, a Gateway configuration (defining the ingress details), an Application configuration, and Component configurations. Next we pull those objects into Ansible Tower and apply them to our production-us-east Environment in Controller.

For certificates, for example, I have set things up so that when I update a certificate in GitHub, Ansible Tower automatically receives a webhook notification from GitHub and updates the certificate in Controller.

Having the configuration objects in GitHub is very convenient, because we can bundle them all up at any point in time and restore the Environment to that state if we need to. This is the common benefit of using an external source of truth that we are already familiar with.

To configure our production environment, we launch another workflow with the 1-promote_dev_to_X–trading template in Ansible Tower. On the DETAILS tab, we define the environmentName as production-us-east, the two instanceNames as 112 and 114, and the ingressUris as {http,https}://trading.acmefinancial.net.

Uploading the App Certificate (16:33)

While the workflow progresses in the background, we examine the nginx_controller_certificate.yaml playbook from the certificates_trading.acmefinancial.net directory in GitHub (the directory was called certificate_merch.acmefinancial.net when the demo was recorded).

The playbook runs on the Ansible Tower host. It invokes the nginx_controller_generate_token role to generate an ephemeral token for the Controller API, loads the certificate and key for the trading.acmefinancial.net application from the vars.yaml file, and invokes the nginx_controller_certificate role to update the certificate for trading.acmefinancial.net in Controller.

Examining the vars.yaml file, we see in the displayName field the name of the certificate as displayed in Controller, in the type field that the certificate type is PEM, and in the final three fields the names of the key, certificate, and certificate chain (note that the values in the demo are slightly different from the values in the GitHub file).

One key to success here is the formatting of the certificate variables. Here I have the key and certificate bodies being dynamically read from files in the local directory in GitHub, but they could also be secured by Ansible Vault just as well. However, it is the pipe symbol ( | ) in the variable definition that keeps all the line endings intact and thus results in a valid certificate or key in the variable body. The variable caCerts is an array of certificates for an intermediate certificate authority, such as if you were using your internal PKI.

Configuring a Gateway to Define Ingress Traffic (17:40)

Now, we are at the point of defining a Gateway. A Gateway describes how our customers reach our application and it can service one or many ingress paths, URIs, or domains, depending on how your organization defines how the customer reaches your network.

We examine the nginx_controller_gateway.yaml playbook from the gateway_retail.dev_trading directory in GitHub (in the demo, the directory name is gateway_lending.prod_public, but the contents of the file are the same). Running on the Ansible Tower host, the playbook loads the vars.yaml file and invokes the nginx_controller_generate_token and nginx_controller_gateway roles.

The key here is that an NGINX Plus instance is tied to a Gateway configuration. In fact, a single instance or set of instances can service multiple Gateway configurations. NGINX Controller generates and applies the configuration for you, all the while ensuring that instances that are sharing configurations don’t have configurations applied that conflict with each other.

Configuring the Application and Component Paths to Back‑End Workloads (18:15)

The playbooks in the application_trading_configurations directory set up the Application and Component configurations in NGINX Controller. A Component defines a sub‑part of an Application, enabling you to deploy self‑contained pieces responsible for a particular function of the overall application. It defines which backend servers respond to requests for specific URIs under the FQDN handled by a given gateway. In our demo, each Component is built and managed by a different team.

- app_trading.yaml defines the configuration of the Application itself

- component_files.yaml defines the handling of requests to the /file URI

- component_main.yaml defines the handling of requests to the / URI

- component_referrals.yaml defines the handling of requests to the /app3 URI

- component_transfers.yaml defines the handling of requests to the /api URI

The task_app.yaml playbook configures the Application by reading in the configuration from app_trading.yaml and invoking the nginx_controller_application role.

Similarly, the task_components.yaml playbook configures Components by reading in the configuration from the component_*.yaml files and invoking the nginx_controller_component role.

Summary (20:20)

We conclude by navigating to the production-us-east Environment in Controller, under Services > Environments. We see that our Application (Trading.ACME.Financial.net), Certificate, Gateway are all defined. When we drill down into the Application, we see the four Components (Transfers, Referrals, File, and Main) we configured in the previous section.

With this demo, we’ve shown how to promote an application from a development to a production environment using NGINX Controller incorporated into a pipeline controlled by Ansible roles and playbooks.

We see the NGINX Controller collection as a starting place that will grow over time through efforts from NGINX and the community. I invite you to contribute to the collection and let us know what new functionality is important to you.

If you want to take NGINX Controller for a spin, request a free trial or contact us to discuss your use cases.