Author’s note – This blog post is the fourth in a series:

- Introducing the Microservices Reference Architecture from NGINX

- MRA, Part 2: The Proxy Model

- MRA, Part 3: The Router Mesh Model

- MRA, Part 4: The Fabric Model (this post)

- MRA, Part 5: Adapting the Twelve‑Factor App for Microservices

- MRA, Part 6: Implementing the Circuit Breaker Pattern with NGINX Plus

All six blogs, plus a blog about web frontends for microservices applications, have been collected into a free ebook.

Also check out these other NGINX resources about microservices:

- A very useful and popular series by Chris Richardson about microservices application design

- The Chris Richardson articles collected into a free ebook, with additional tips on implementing microservices with NGINX and NGINX Plus

- Other microservices blog posts

- Microservices webinars

- Topic: Microservices

Introducing the Fabric Model

The Fabric Model is the most sophisticated of the three models found in the NGINX Microservices Reference Architecture (MRA). It’s internally secure, fast, efficient, and resilient.

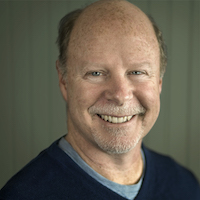

Like the Proxy Model, the Fabric Model places NGINX Plus as a reverse proxy server in front of application servers, bringing many benefits. In the Router Mesh Model, NGINX Plus instances within the microservices application act as central communications points for other service instances. But in the Fabric Model, there is a dedicated NGINX Plus server instance in each microservice container. As a result, SSL/TLS security can be implemented for all connections at the microservice level.

Using many NGINX Plus instances has one crucial benefit: you can dynamically create SSL/TLS connections between microservices – connections that are stable, persistent, and therefore fast. An initial SSL/TLS handshake establishes a connection that the microservices application can reuse without further overhead, for scores, hundreds, or thousands of interservice requests.

Figure 1 shows how in the Fabric Model NGINX Plus runs on the reverse proxy server and also each service instance, allowing fast, secure, and smart interservice communication. The Pages service, which has multiple instances in the figure, is a web‑frontend microservice used in the MRA.

The Fabric Model turns the usual view of application development and delivery on its head. Because NGINX Plus is on both ends of every connection, its capabilities become properties of the network that the app is running on, rather than capabilities of specific servers or microservices. NGINX Plus becomes the medium for bringing the network, the “fabric”, to life, making it fast, secure, smart, and extensible.

The Fabric Model is suitable for several use cases, which include:

- Government and military apps – For government apps, security is crucial and sometimes even required by law. The need for security in military computation and communications is obvious – as is the need for speed.

- Health and finance apps – Regulatory and user requirements mandate a combination of security and speed for financial and health apps, with billions of dollars in financial and reputational value at stake.

- Ecommerce apps – User trust is a huge issue for ecommerce, and speed is a key competitive differentiator. So combining speed and security is crucial.

As an increasing number of apps use SSL/TLS to protect client communication, it makes sense for backend – service‑to‑service – communications to be secured as well.

Why the Fabric Model?

The use of microservices for larger apps raises a number of questions, as described in our ebook, Microservices: From Design to Deployment, and series of blog posts on microservices design. There are four specific problems that affect larger apps. The Fabric Model addresses these problems – and, we believe, largely resolves them.

These issues are:

- Secure, fast communication – Monolithic apps use in‑memory communication between processes; microservices communicate over the network. The move to network communications raises issues of speed and security. The Fabric Model makes communications secure by using SSL/TLS connections for all requests; it makes them fast by using NGINX Plus to make the connections persistent – minimizing the most resource‑intensive part of the process, the SSL handshake.

- Service discovery – In a monolith, functional components in an app are connected to each other by the application engine. A microservices environment is dynamic, so services need to find each other before communicating. In the Fabric Model, services do their own service discovery, with NGINX Plus using its built‑in DNS resolver to query the service registry.

- Load balancing – User requests need to be distributed efficiently across microservice instances. In the Fabric Model, NGINX Plus manages load balancing between microservice instances, providing a variety of load‑balancing schemes to match the needs of the services on both ends of the connection.

- Resilience – A badly behaving service instance can greatly impact the performance and stability of an app. In the Fabric Model, NGINX Plus can run health checks on every microservice, implementing the powerful circuit breaker pattern as an inherent property of the network environment the app runs in.

The Fabric Model is designed to work with external code for container management and for service discovery and registration. This can be provided by a container management framework such as Deis, Kubernetes, or DCOS; specific service discovery tools, such as Consul, etcd, or ZooKeeper; custom code; or a combination.

Through the use of NGINX Plus within each microservice instance, in collaboration with a container management framework or custom code, all aspects of these capabilities – interprocess communication, service discovery, load balancing, and the app’s inherent security and resilience – are fully configurable and subject to progressive improvement.

Fabric Model Capabilities

This section describes the specific, additional capabilities of the Fabric Model, discussed in the previous section, in greater depth. Properties that derive from the use of NGINX Plus “in front of” application servers are also part of the other two Models, and are described in our Proxy Model blog post.

The “Normal” Process

The Fabric Model is an improvement on the typical microservices approach to service discovery, load balancing, and interprocess communication. To understand the advantages of the Fabric Model, it’s valuable to first take a look at how a “normal” microservices app carries out these functions.

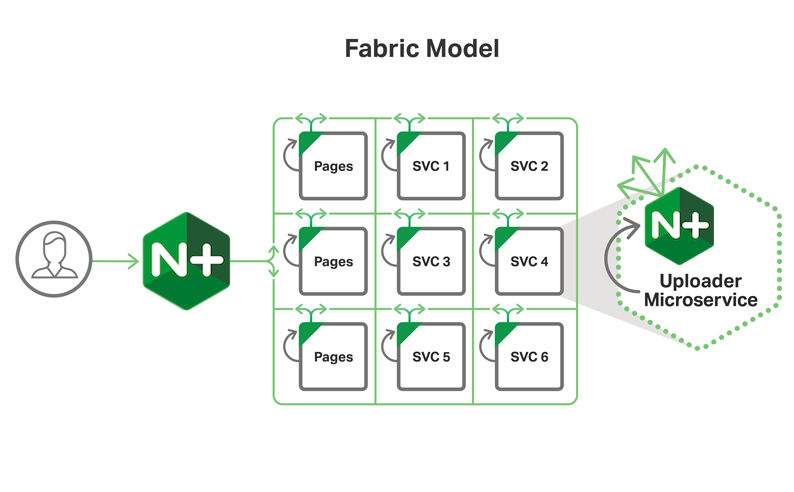

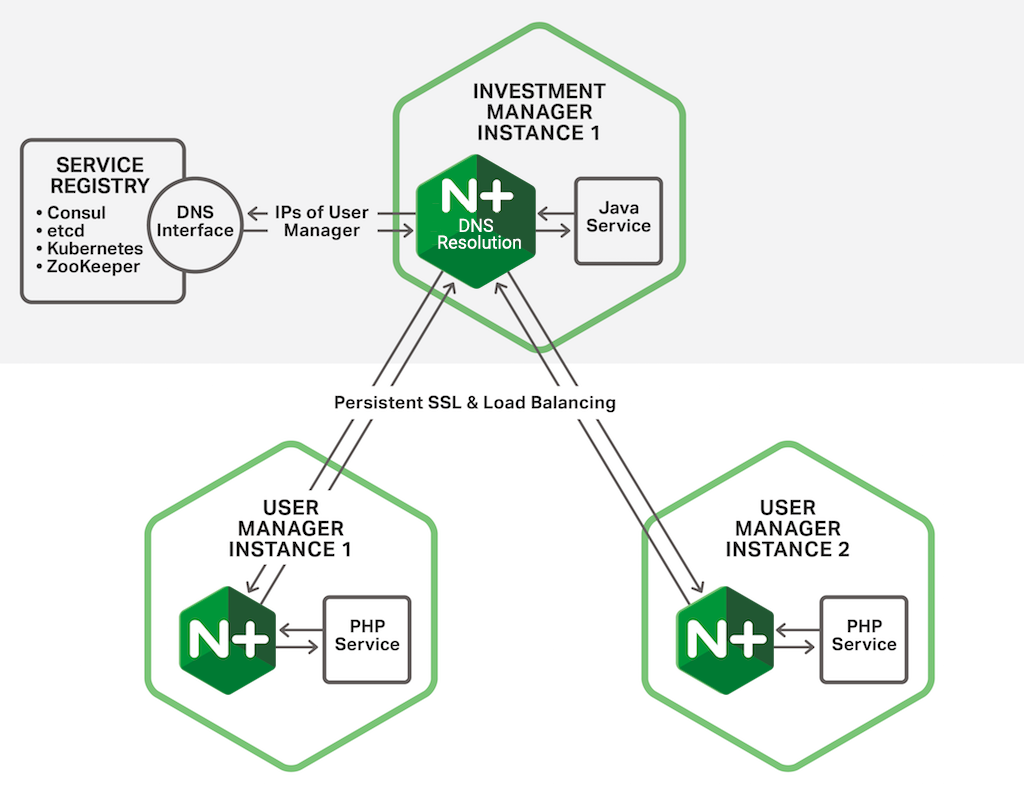

Figure 2 shows a microservices app with three service instances – one instance of an investment manager service and two instances of a user manager service.

When Investment Manager Instance 1 needs to make a request of User Manager Instance 1, it initiates the following process:

- Investment Manager Instance 1 creates an instance of an HTTP client.

- The HTTP client requests the address of the User Manager instance from the service registry’s DNS interface.

- The service registry sends back the IP address for one of the User Manager service instances – in this case, Instance 1.

- Investment Manager Instance 1 initiates an SSL/TLS connection to User Manager Instance 1 – a lengthy, nine‑step process.

- Using the new connection, Investment Manager Instance 1 sends the request.

- Replying on the same connection, User Manager Instance 1 sends the response.

- Investment Manager Instance 1 closes down the connection.

Dynamic Service Discovery

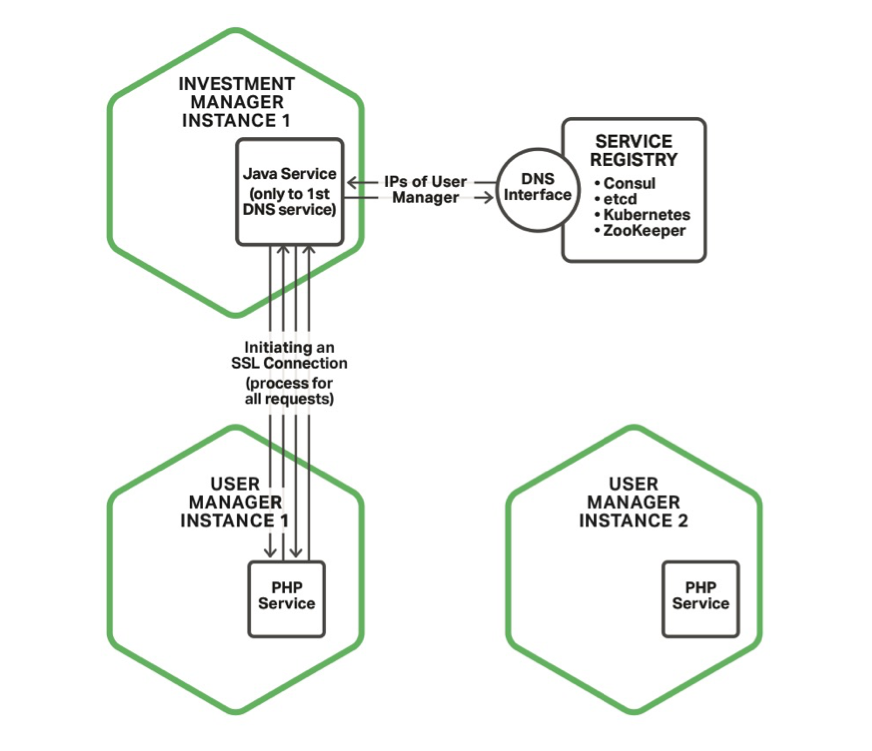

In the Fabric Model, the service discovery mechanism is entirely different. The DNS resolver running in NGINX Plus maintains a table of available service instances. The table is updated regularly; NGINX Plus does not require a restart to update the table.

To keep the table up to date, NGINX Plus runs an asynchronous, nonblocking resolver that queries the service registry regularly, perhaps every few seconds, using DNS SRV records for service discovery – a feature introduced in NGINX Plus R9. When a service instance needs to make a request, the endpoints for all peer microservices are already available.

It is important to note that neither NGINX Plus nor the Fabric Model provide any mechanism for service registration – the Fabric Model is wholly dependent on a container management system, a service discovery tool, or equivalent custom code to manage the orchestration and registration of containers.

Load Balancing

The table of service instances maintained by the NGINX Plus DNS resolver is also the load‑balancing pool to which NGINX Plus routes requests. As the developer, you choose the load balancing method to use. One option is Least Time, a sophisticated algorithm that interacts with health checks to send data to the fastest‑responding service. (Generally, health normally run asynchronously in the background.)

If a service has to connect to a monolithic system, or some other stateful system, session persistence ensures that requests within a given user session continue to be sent to the same service instance.

With load balancing built in, you can optimize the performance of each service instance – and therefore the app as a whole.

SSL/TLS Connections “For Free”

SSL/TLS connections in the Fabric Model are persistent. A connection is created, with a full SSL handshake, the first time one service instance makes a request of another – and then the same connection is reused, perhaps thousands of times, for future requests.

In essence, a mini‑VPN is created between pairs of service instances. The effect is dramatic; in one recent test, fewer than 1% of transactions required a new SSL handshake. (It’s important to note that even though the overhead for handshakes is very small, SSL/TLS is still not free, because all message data is encoded and decoded.)

With service discovery and load balancing running as background tasks, not repeated as a part of each new transaction, transactions are very fast.

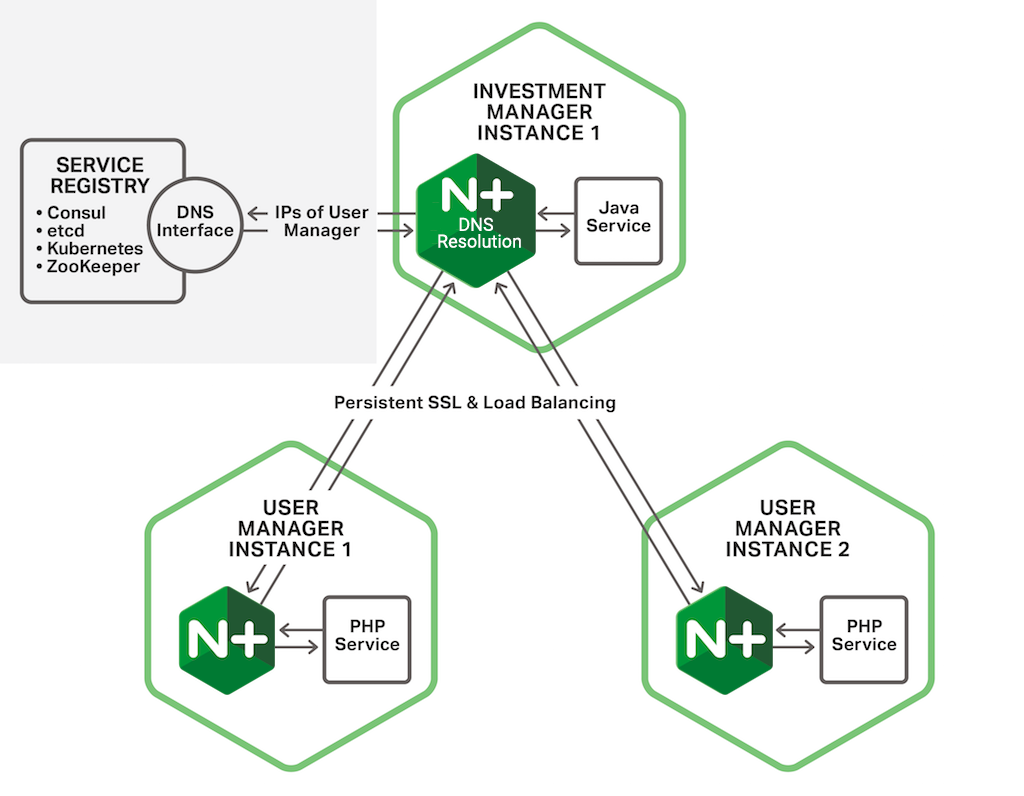

Here’s how connections are created and used for the same operation outlined for the “normal” process:

- Within Investment Manager Instance 1, application code constructs a request to be sent to the User Manager and sends the request to its local NGINX Plus instance.

- From its internal table, and applying the load‑balancing method chosen by the developer, NGINX Plus selects the endpoint for User Manager Instance 1 as the destination for the request (see Dynamic Service Discovery).

- If this is the first request between the two service instances, the NGINX Plus instance establishes a persistent SSL/TLS connection to User Manager Instance 1. For later requests, the persistent connection is reused.

- Using the persistent connection, Investment Manager Instance 1 sends the request.

- Replying on the same connection, User Manager Instance 1 sends the response.

Resilience

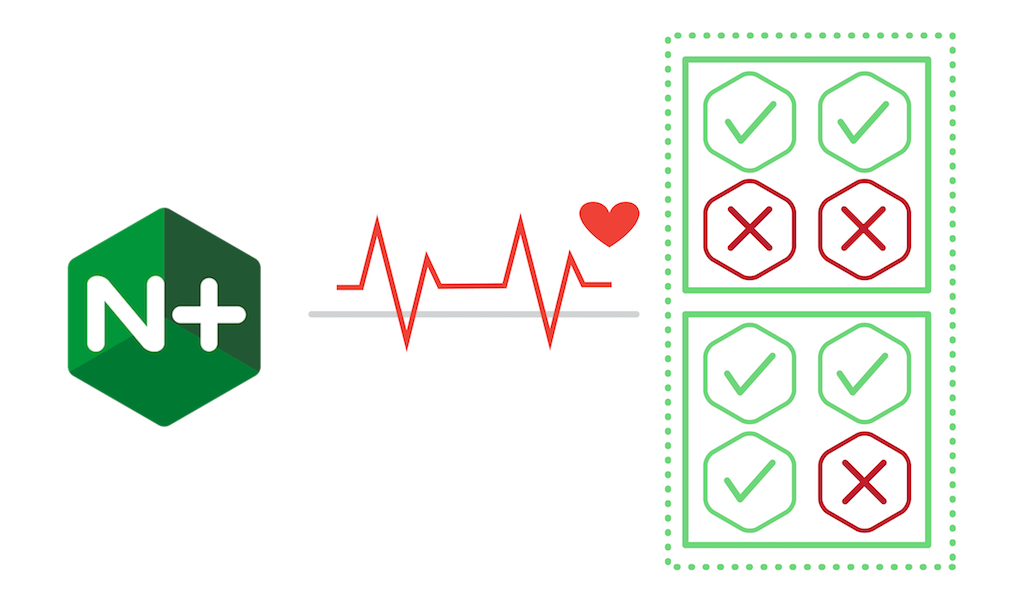

With the application health check capability in NGINX Plus, you can build the Circuit Breaker pattern into your microservices app. NGINX Plus can send a health check to a specific endpoint for each service instance. You can define a range of responses and have NGINX Plus evaluate them using its built‑in regular expression interpreter.

NGINX Plus stops sending traffic to unhealthy instances but allows requests that are in process to finish. It also offers a slow‑start mode for recovering service instances, so they aren’t overwhelmed with new traffic. If a service goes down entirely, NGINX can serve “stale” cached data in response to requests in order to provide continuity of service even if the microservice is unavailable.

These resilience features make the entire app faster, more stable, and more secure.

Comparing the Fabric Model to the “Normal” Process

To sum up the differences, and highlight some of the advantages of the Fabric Model over the “normal” process, this table compares how each works for major app functions.

| Normal Process | Fabric Model | Comparison |

|---|---|---|

| Service discovery happens after request is made; wait for needed URL | Service discovery runs as background task; URL available instantly | Fabric Model is faster |

| Load balancing happens after request is made; primitive techniques | Health checks used for load balancing run in the background; advanced techniques | Fabric Model is faster, more flexible, and more advanced |

| New nine‑step SSL handshake for every service request and response | Persistent “mini‑VPN” with few handshakes | Fabric Model is much faster |

| Resilience poor; “sick” or “dead” services cause delays | Resilience built in; “sick” and “dead” services isolated proactively | Fabric Model is much more resilient |

Table 1. The Fabric Model is fast, flexible, advanced, and resilient

The difference between the Fabric Model and the “normal” process is strongest in the most‑repeated activity for any app: interprocess communication. In the “normal” process, every request requires a separate service discovery request, a load‑balancing check, and a full nine‑step SSL handshake. For the Fabric Model, service discovery and load balancing happen in the background, before a request is made.

In the Fabric Model, SSL handshakes are rare; they only occur the first time one service instance makes a request of another. In one recent test of an application using the Fabric Model, only 300 SSL handshakes were needed to establish interservice connections for 100,000 total transactions. That’s a 99.7% reduction in SSL handshakes, delivering a strong boost in application performance while maintaining secure interprocess communications.

Implementing the Fabric Model

With the Microservices Reference Architecture still in development, there are two approaches you can take to begin implementing the Fabric Model today:

- Implement NGINX Plus “in front of” your existing server architecture. You can begin to use it as a reverse proxy server, cache for static files, and more. (All three Models have this use of NGINX Plus in common.) Then wait for the public release of the Microservices Reference Architecture later this year to to start implementing the Fabric Model.

- Contact NGINX Professional Services today. Our Professional Services team can help you assess your needs and begin implementation of the Fabric Model, even as it’s prepared for public release later this year.

Conclusion

The Fabric Model networking architecture for microservices is the most sophisticated and capable of the MRA models. NGINX Plus, acting as both the reverse proxy server for the entire app and handling all ingress and egress traffic for each individual service, brings to life a network that connects service instances.

In the Fabric Model, stable SSL/TLS connections provide both speed and security. Service discovery, working with a service registry tool or custom code, and load balancing, in cooperation with a container management tool or custom code, are fast, capable, and configurable. Health checks per service instance make the system as a whole faster, more stable, and more secure.

NGINX Plus is key to the Fabric Model. To try NGINX Plus, start your free 30-day trial today or contact us to discuss your use cases.