In a previous blog, we showed how real‑time APIs play a critical role in our lives. As companies seek to compete in the digital era, APIs become a critical IT and business resource. Architecting the right underlying infrastructure ensures not only that your APIs are stable and secure, but also that they qualify as real‑time APIs, able to process API calls end-to-end within 30 milliseconds (ms).

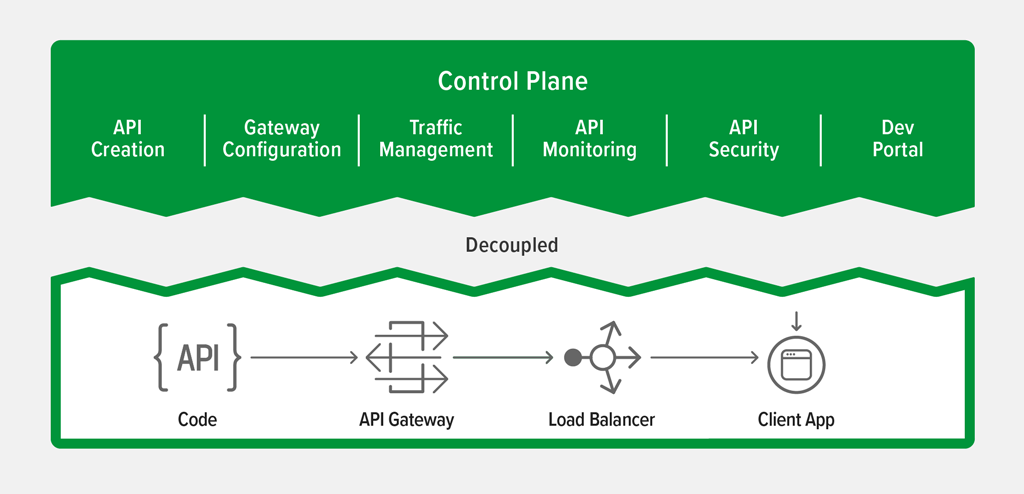

API architectures are broadly broken up into two components: the data plane, or API gateway, and the control plane, which includes policy and developer portal servers. A real‑time API architecture depends mostly on the API gateway, which acts as a proxy to process API traffic. It’s the critical link in the performance chain.

API gateways perform a variety of functions including authenticating API calls, routing requests to the right backends, applying rate limits to prevent overburdening your systems, and handling errors and exceptions. Once you have decided to implement real‑time APIs, what are the key characteristics of the API gateway architecture? How do you deploy API gateways?

This blog addresses these questions, providing a real‑time API reference architecture based on our work with NGINX’s largest, most demanding customers. We encompass all aspects of the API management solution but go deeper on the API gateway which is responsible for ensuring real‑time performance thresholds are met.

The Real-Time API Reference Architecture

Our reference architecture for real‑time APIs has six components:

- API gateway. A fast, lightweight data‑plane component that processes API traffic. This is the most critical component in the real‑time architecture.

- API policy server. A decoupled server that configures API gateways, as well as supplying API lifecycle management policies.

- API developer portal. A decoupled web server that provides documentation for rapid onboarding for developers who use the API.

- API security service. A separate web application firewall (WAF) and fraud detection component which provides security beyond the basic security mechanisms built into the API gateway.

- API identity service. A separate service that sets authentication and authorization policies for identity and access management and integrates with the API gateway and policy servers.

- DevOps tooling. A separate set of tools to integrate API management into CI/CD and developer pipelines.

Of course, there are elements like the API consumer, the API endpoint, and various infrastructure components like routers, switches, VMs, containers, and whatnot. These are included in the reference architecture where needed but we’re not discussing them in detail as they’re assumed to be common infrastructure in the enterprise. Instead, we’re focusing on these six components, which are needed to create a comprehensive real‑time API architecture.

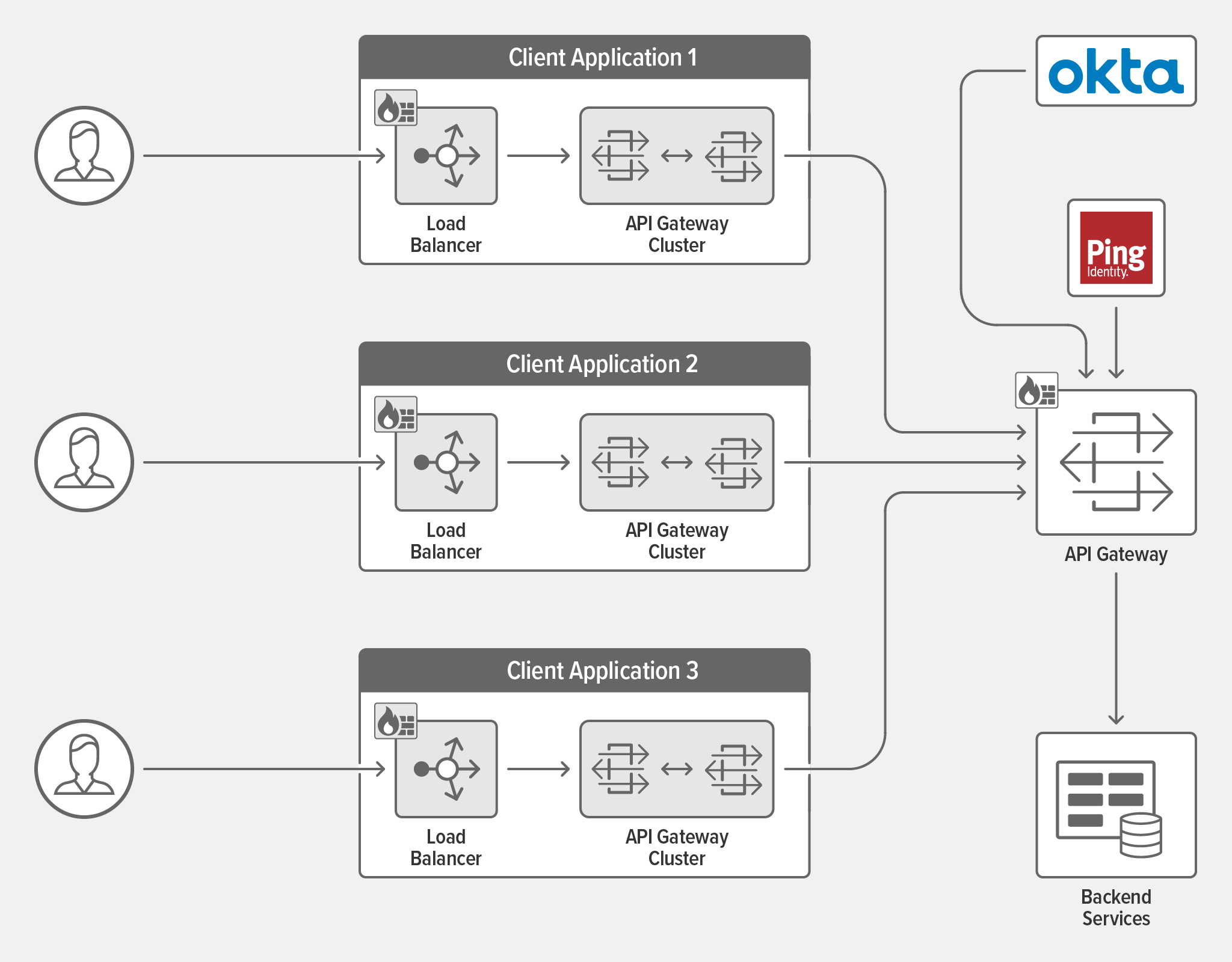

API Gateway

The functions of an API gateway include request routing, authentication, TLS termination, rate limiting, and service discovery when deployed in a microservices architecture. To ensure high availability, a cluster of API gateways needs to be deployed to manage traffic for each application that exposes APIs. To efficiently distribute API traffic, a load balancer is deployed in front of the cluster. The load balancer selects the gateway based on location (nearness to the client app) or capacity to process APIs. The gateway then forwards the request to the backend. For best performance, implement the best practices detailed in Real‑Time API Gateway Characteristics and Architectural Guidelines below.

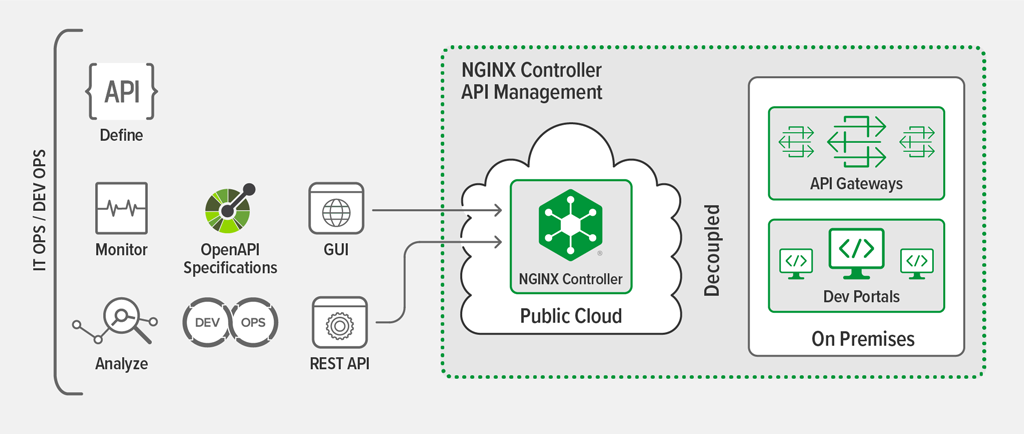

API Policy Server

This is the control plane that allows developers and DevOps teams to define, publish, and secure APIs, and IT Operations to monitor and analyze APIs. This is where you configure request routing to backend services and fine‑grained access control policies that specify what’s allowed on a published API (read‑only versus read‑write, for example) and the kinds of users or client apps that are permitted to consume resources.

The policy server also configures the API gateways with all the APIs that need to be exposed for use. To achieve real‑time performance, this component must be completely decoupled from the API gateway data plane that mediates API traffic (see Figure 2). If the data plane relies on the policy server for authenticating, rate limiting, and routing each API call to the appropriate backend, then API calls initiated by the API consumer traverse through this control plane – and in some cases associated databases – resulting in extra latency due to the additional overhead.

API Developer Portal

This component enables developers who consume your APIs to onboard in an efficient manner. A developer portal (or dev portal) provides a catalog of all the published APIs, documentation for each API, and sample code. To improve performance and availability, the developer portal is decoupled from the control plane and hosted separately on its own web server. A distributed developer portal enables multiple instances to be located across different clouds, geographies, or availability zones. This can improve performance for developers accessing it, but because it’s decoupled form the data plane, it does not affect the efficiency of API call processing.

API Security Service

API security most commonly entails an advanced web application firewall (WAF) to detect a variety of attacks. It must provide high‑confidence signatures and be able to prevent breaches due to malformed JSON, null requests, or requests that do not comply with the gRPC protocol. It needs to support advanced API protection including path enforcement, method enforcement, data type validation, and full schema validation. This component needs to protect both the cluster of gateways and the load balancer.

Many organizations also deploy API fraud detection, which involves looking deeper into the logic of the API call to see if it is malicious or abnormal. Fraud detection is often a separate function but may be tied into the API gateway or WAF layer for enforcement.

It’s important to note that API security almost inevitably introduces latency, which may result in API processing that exceeds the real‑time API performance threshold of 30ms. We encourage organizations to determine if API security or absolute performance is more important. We include API security in the reference architecture to show how it differs from edge or perimeter security which may not provide API‑specific protections.

API Identity Service

This component provides access and authorization policies that secure APIs and protect backend resources. Recommended best practice is to integrate with leading identity providers such as Okta and Ping Identity to ensure secure access to APIs. These solutions enable you to create and manage access policies that key off of end‑user and client application attributes.

For the purposes of this reference architecture, we are assuming such identity services are already in place and mention them only for completeness of the overall API architecture.

DevOps Tooling

A declarative API interface must be provided to accomplish all aspects of API lifecycle management – creation, publication, gateway configuration, and monitoring. This enables automation of API creation and gateway configuration changes. You can accelerate API release velocity by seamlessly integrating with an automation platform such as Ansible and a CI/CD toolkit such as Jenkins.

Although this form of automation does not impact API call processing performance per se, it is critical to ensuring changes can be made quickly and as part of the development process. If a problem occurs that does impact performance – such as an DDoS attack or misconfigured API client – CI/CD integration ensures that you can quickly resolve the problem and reconfigure API gateways to restore performance back to an acceptable level.

Real-Time API Gateway Characteristics and Architectural Guidelines

NGINX has worked with some of the largest organizations in the financial services, retail, entertainment, and software industries to build their API architectures. These customers have scaled to handle hundreds of billions of API calls per month, all with less than 30ms of latency. From our work with these customers we’ve distilled the following six API gateway best practices that underpin our real‑time API reference architecture.

Deploy High Availability API Gateways

In order to ensure rapid responses, first and foremost the API gateway needs to be operational. You must employ a cluster of API gateways to boost availability. A cluster of two or more API gateways improves reliability and resiliency of your APIs. There must be a mechanism for sharing operational state (such as rate limits) among all the gateways so that effective controls can be applied in a consistent manner.

Authenticate but Do Not Authorize at the API Gateway

Authentication is the process of verifying that a user or calling entity is who it claims to be. Authorization is the process of determining which privileges or access levels are granted to a user.

Authorization entails additional processing – typically using JSON Web Tokens (JWTs) – to determine whether a client is entitled to access a specific resource. For instance, an e‑commerce app might grant read‑only access to product and pricing information to all clients, but allow read‑write access only to select users. Because of this granularity, authorization is best performed by the backend service that processes the API call itself, because it has the necessary context about the request. With authorization delegated to the business‑logic layer of the backend, the gateway doesn’t have to perform lookups, resulting in very fast response times. API gateways are certainly capable of performing this function, but you potentially sacrifice real‑time performance.

Enable Dynamic Authentication

Pre‑provisioning authentication information (whether using API keys or JWTs) in the gateway minimizes additional lookups at runtime. Authentication is thus almost instantaneous.

Fail Fast with Circuit Breakers

Implementing circuit breakers prevents cascading failures. Let me illustrate with an example. Assume an API call is handled by a backend consisting of several microservices. One of the microservices, microservice A, performs database lookups. When a database lookup fails, microservice A takes a very long time to return an error. This adversely impacts the API response time for not only the current API call, but also subsequent API calls.

Circuit breakers address this issue. You set a limit on the number of failures that can occur within a specified amount of time. When the threshold is exceeded, the circuit trips and all further calls instantly result in an error. No client application or user is left hanging due to resource exhaustion.

Do Not Transform at the API Gateway Layer

Data transformation, such as translating an XML‑formatted request payload to JSON, tends to be computationally intensive. Assign this work to another service. Performing transformations at the gateway adds significant latency to API calls.

Use gRPC If Possible

gRPC is a modern open source remote procedure call framework introduced by Google. It is gaining popularity because of its widespread language support and simple design. gRPC relies on protocol buffers which, according to Google, are a “language‑neutral, platform‑neutral, extensible mechanism for serializing structured data”. Because gRPC uses HTTP/2 as its transfer protocol, it automatically inherits all the benefits of HTTP/2, such as data compression and multiplexed requests over TCP connections. Multiplexing allows the client and server to send multiple requests and responses in parallel over a single TCP connection. HTTP/2 also supports server‑push which allows a server to pre‑emptively push resources to a client, anticipating that the client may soon request them. This reduces round‑trip latency as resources are sent even before they are requested. All these features result in faster response to the client app and efficient network utilization.

How Can NGINX Help?

NGINX Plus is built for delivering APIs in real time. It supports:

- High availability clustering that allows sharing of state information such as rate limits across all instances in a cluster.

- Authentication using both API keys and JWTs.

- In‑memory SSL/TLS certificate storage in the NGINX Plus key‑value store. This technique can be used to pre‑provision authorization information such as API Keys or JWTs.

- gRPC.

A high‑performance ethos is a defining feature of NGINX’s API management solution as well. Unlike traditional API management solutions, the data plane (NGINX Plus as the API gateway) has no runtime dependency on the control plane (the NGINX Controller API Management Module). A tightly coupled control and data plane adds significant latency as the API call must traverse databases, modules, and scripting. Decoupling the data and control planes reduces complexity and maximizes performance by reducing the average response time to serve an API call.

The API gateways and the load balancer can be secured by NGINX App Protect – a DevOps friendly WAF that provides enterprise‑grade security. NGINX Plus being an all-in-one load balancer, reverse proxy, and API gateway ensures high availability and high performance while reducing complexity and tool sprawl.

Can you serve APIs in real time? We’d love to hear from you in the comments below. Or you can contact our API experts to request a free assessment of your API’s performance.