In Load Balancing with NGINX and NGINX Plus, Part 1, we set up a simple HTTP proxy to load balance traffic across several web servers. In this article, we’ll look at additional features, some of them available in NGINX Plus: performance optimization with keepalives, health checks, session persistence, redirects, and content rewriting.

For details of the load‑balancing features in NGINX and NGINX Plus, check out the NGINX Plus Admin Guide.

Editor – NGINX Plus Release 5 and later can also load balance TCP-based applications. TCP load balancing was significantly extended in Release 6 by the addition of health checks, dynamic reconfiguration, SSL termination, and more. In NGINX Plus Release 7 and later, the TCP load balancer has full feature parity with the HTTP load balancer. Support for UDP load balancing was introduced in Release 9.

You configure TCP and UDP load balancing in the stream context instead of the http context. The available directives and parameters differ somewhat because of inherent differences between HTTP and TCP/UDP; for details, see the documentation for the HTTP and TCP/UDP Upstream modules.

A Quick Review

To review, this is the configuration we built in the previous article:

server {

listen 80;

location / {

proxy_pass http://backend;

# Rewrite the 'Host' header to the value in the client request,

# or primary server name

proxy_set_header Host $host;

# Alternatively, put the value in the config:

# proxy_set_header Host www.example.com;

}

}

upstream backend {

zone backend 64k; # Use NGINX Plus' shared memory

least_conn;

server webserver1 weight=1;

server webserver2 weight=4;

}In this article, we’ll look at a few simple ways to configure NGINX and NGINX Plus that improve the effectiveness of load balancing.

HTTP Keepalives

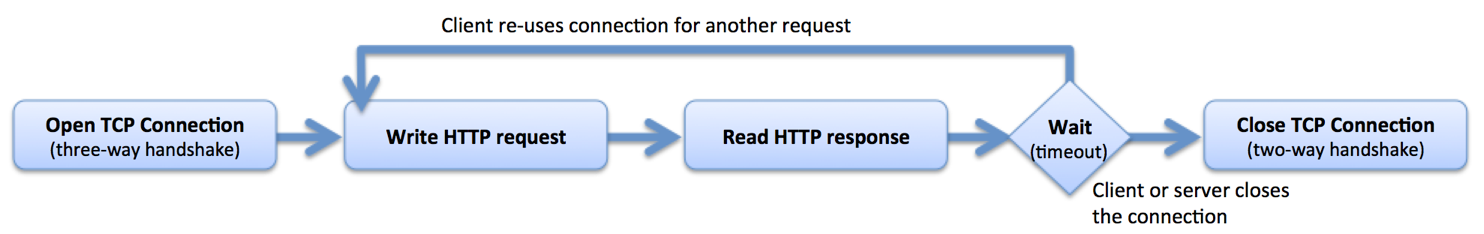

Enabling HTTP keepalives between NGINX or NGINX Plus and the upstream servers improves performance (by reducing latency) and reduces the likelihood that NGINX runs out of ephemeral ports.

The HTTP protocol uses underlying TCP connections to transmit HTTP requests and receive HTTP responses. HTTP keepalive connections allow for the reuse of these TCP connections, thus avoiding the overhead of creating and destroying a connection for each request:

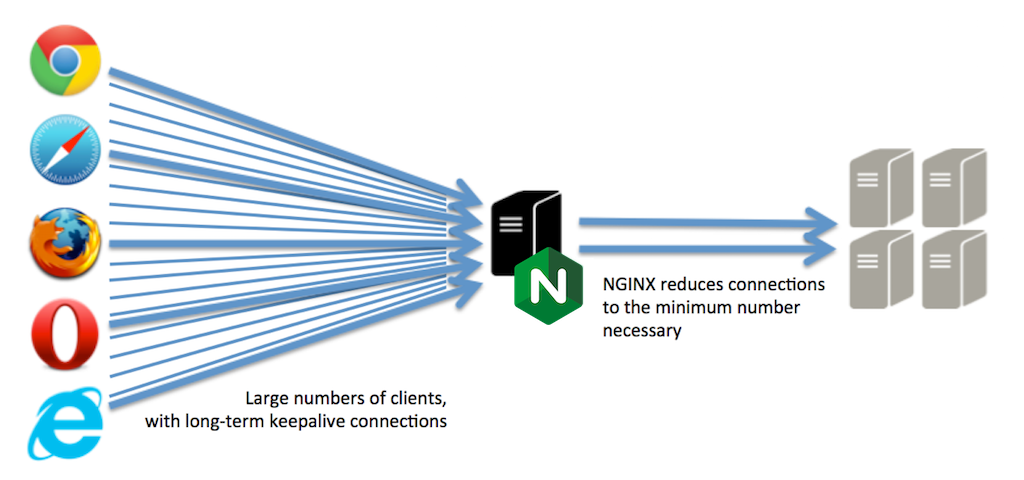

NGINX is a full proxy and manages connections from clients (frontend keepalive connections) and connections to servers (upstream keepalive connections) independently:

NGINX maintains a “cache” of keepalive connections – a set of idle keepalive connections to the upstream servers – and when it needs to forward a request to an upstream, it uses an already established keepalive connection from the cache rather than creating a new TCP connection. This reduces the latency for transactions between NGINX and the upstream servers and reduces the rate at which ephemeral ports are used, so NGINX is able to absorb and load balance large volumes of traffic. With a large spike of traffic, the cache can be emptied and in that case NGINX establishes new HTTP connections to the upstream servers.

With other load‑balancing tools, this technique is sometimes called multiplexing, connection pooling, connection reuse, or OneConnect.

You configure the keepalive connection cache by including the proxy_http_version, proxy_set_header, and keepalive directives in the configuration:

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

}

}

upstream backend {

server webserver1;

server webserver2;

# maintain up to 20 idle connections to the group of upstream servers

keepalive 20;

}Health Checks

Enabling health checks increases the reliability of your load‑balanced service, reduces the likelihood of end users seeing error messages, and can also facilitate common maintenance operations.

The health check feature in NGINX Plus can be used to detect the failure of upstream servers. NGINX Plus probes each server using “synthetic transactions,” and checks the response against the parameters you configure on the health_check directive (and, when you include the match parameter, the associated match configuration block):

server {

listen 80;

location / {

proxy_pass http://backend;

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# The health checks inherit other proxy settings

proxy_set_header Host www.foo.com;

}

}

match statusok {

# Used for /test.php health check

status 200;

header Content-Type = text/html;

body ~ "Server[0-9]+ is alive";

}The health check inherits some parameters from its parent location block. This can cause problems if you use runtime variables in your configuration. For example, the following configuration works for real HTTP traffic because it extracts the value of the Host header from the client request. It probably does not work for the synthetic transactions that the health check uses because the Host header is not set for them, meaning no Host header is used in the synthetic transaction.

location / {

proxy_pass http://backend;

# This health check might not work...

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# Extract the 'Host' header from the request

proxy_set_header Host $host;

}One good solution is to create a dummy location block that statically defines all of the parameters used by the health check transaction:

location /internal-health-check1 {

internal; # Prevent external requests from matching this location block

proxy_pass http://backend;

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# Explicitly set request parameters; don't use run-time variables

proxy_set_header Host www.example.com;

}For more information, check out the NGINX Plus Admin Guide.

Session Persistence

With session persistence, applications that cannot be deployed in a cluster can be load balanced and scaled reliably. Applications that store and replicate session state operate more efficiently and end user performance improves.

Certain applications sometimes store state information on the upstream servers, for example when a user places an item in a virtual shopping cart or edits an uploaded image. In these cases, you might want to direct all subsequent requests from that user to the same server.

Session persistence specifies where a request must be routed to, whereas load balancing gives NGINX the freedom to select the optimal upstream server. The two processes can coexist using NGINX Plus’ session‑persistence capability:

If the request matches a session persistence rule

then use the target upstream server

else apply the load‑balancing algorithm to select the upstream server

If the session persistence decision fails because the target server is not available, then NGINX Plus makes a load‑balancing decision.

The simplest session persistence method is the “sticky cookie” approach, where NGINX Plus inserts a cookie in the first response that identifies the sticky upstream server:

sticky cookie srv_id expires=1h domain=.example.com path=/;In the alternative “sticky route” method, NGINX selects the upstream server based on request parameters such as the JSESSIONID cookie:

upstream backend {

server backend1.example.com route=a;

server backend2.example.com route=b;

# select first non-empty variable; it should contain either 'a' or 'b'

sticky route $route_cookie $route_uri;

}For more information, check out the NGINX Plus Admin Guide.

Rewriting HTTP Redirects

Rewrite HTTP redirects if some redirects are broken, and particularly if you find you are redirected from the proxy to the real upstream server.

When you proxy to an upstream server, the server publishes the application on a local address, but you access the application through a different address – the address of the proxy. These addresses typically resolve to domain names, and problems can arise if the server and the proxy have different domains.

For example, in a testing environment, you might address your proxy directly (by IP address) or as localhost. However, the upstream server might listen on a real domain name (such as www.nginx.com). When the upstream server issues a redirect message (using a 3xx status and Location header, or using a Refresh header), the message might include the server’s real domain.

NGINX tries to intercept and correct the most common instances of this problem. If you need full control to force particular rewrites, use the proxy_redirect directive as follows:

proxy_redirect http://staging.mysite.com/ http://$host/;Rewriting HTTP Responses

Sometimes you need to rewrite the content in an HTTP response. Perhaps, as in the example above, the response contains absolute links that refer to a server other than the proxy.

You can use the sub_filter directive to define the rewrite to apply:

sub_filter /blog/ /blog-staging/;

sub_filter_once off;One very common gotcha is the use of HTTP compression. If the client signals that it can accept compressed data, and the server then compresses the response, NGINX cannot inspect and modify the response. The simplest measure is to remove the Accept-Encoding header from the client’s request by setting it to the empty string (""):

proxy_set_header Accept-Encoding "";A Complete Example

Here’s a template for a load‑balancing configuration that employs all of the techniques discussed in this article. The advanced features available in NGINX Plus are highlighted in orange.

[Editor – The following configuration has been updated to use the NGINX Plus API for live activity monitoring and dynamic configuration of upstream groups, replacing the separate modules that were originally used.]

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_set_header Accept-Encoding "";

proxy_redirect http://staging.example.com/ http://$host/;

# Rewrite the Host header to the value in the client request

proxy_set_header Host $host;

# Replace any references inline to staging.example.com

sub_filter http://staging.example.com/ /;

sub_filter_once off;

}

location /internal-health-check1 {

internal; # Prevent external requests from matching this location block

proxy_pass http://backend;

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# Explicitly set request parameters; don't use runtime variables

proxy_set_header Host www.example.com;

}

upstream backend {

zone backend 64k; # Use NGINX Plus' shared memory

least_conn;

keepalive 20;

# Apply session persistence for this upstream group

sticky cookie srv_id expires=1h domain=.example.com path=/servlet;

server webserver1 weight=1;

server webserver2 weight=4;

}

match statusok {

# Used for /test.php health check

status 200;

header Content-Type = text/html;

body ~ "Server[0-9]+ is alive";

}

server {

listen 8080;

root /usr/share/nginx/html;

location = /api {

api write=on; # Live activity monitoring and

# dynamic configuration of upstream groups

allow 127.0.0.1; # permit access from localhost

deny all; # deny access from everywhere else

}

}Try out all the great load‑balancing features in NGINX Plus for yourself – start your free 30-day trial today or contact us to discuss your use cases.