Earlier this year we benchmarked the performance of NGINX Plus and created a sizing guide for deploying it on bare metal servers. NGINX Open Source and NGINX Plus are widely used for Layer 7 load balancing, also known as application load balancing.

[Editor – We update the sizing guide periodically to reflect changes in both NGINX Plus capabilities and hardware costs and performance. The link above is always to the latest guide.

For information about the performance of NGINX and NGINX Plus as a web server, see Testing the Performance of NGINX and NGINX Plus Web Servers.]

The sizing guide outlines the performance you can expect to achieve with NGINX Plus running on various server sizes, along with the estimated costs for the hardware. You can use the sizing guide to appropriately spec out NGINX Plus deployments, and to avoid over‑provisioning – which costs you money immediately – or under‑provisioning – which can cause performance problems, and cost you money in the long run – as much as possible.

We’ve had a lot of interest in the sizing guide, along with questions about the methodology we used, from customers and others interested in reproducing our results. This blog post provides an overview of the testing we performed to achieve the results presented in the sizing guide. It covers the topology we used, the tests we ran, and how we found the prices listed in the sizing guide.

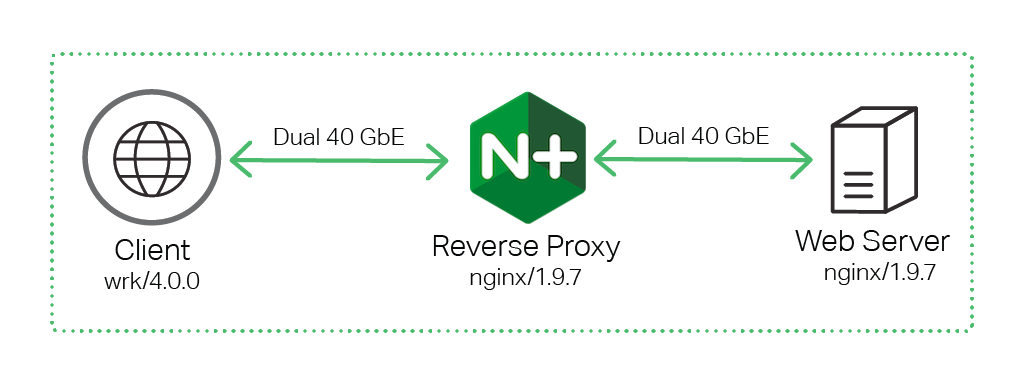

Note: Although we developed the sizing guide specifically for NGINX Plus, we used NGINX Open Source for testing so that anyone can reproduce our tests without an NGINX Plus subscription. The tests do not exercise any of the enhanced features of NGINX Plus, so results for NGINX Open Source and NGINX Plus are the same. NGINX Open Source version 1.9.7 roughly corresponds to NGINX Plus Release 7.

However, to better distinguish the reverse proxy from the backend web server in the testing topology, we refer to NGINX Plus for the former and NGINX (Open Source) for the latter.

Topology

All tests were done using three separate machines connected together with dual 40 GbE links in a simple, flat Layer 2 network.

To generate traffic from the client machine, we used wrk, a performance testing tool similar to ab (ApacheBench). As shown in the digram, all traffic was directed to the NGINX Plus Reverse Proxy, which forwarded the connections to the NGINX Open Source Web Server backend.

Hardware Used

We used the following hardware for the testing.

| Machine | CPU | Network | Memory |

|---|---|---|---|

| Client | 2x Intel(R) Xeon(R) CPU E5‑2699 v3 @ 2.30GHz, 36 real (or 72 HT) cores | 2x Intel XL710 40GbE QSFP+ (rev 01) | 16 GB |

| Reverse Proxy | 2x Intel(R) Xeon(R) CPU E5‑2699 v3 @ 2.30GHz, 36 real (or 72 HT) cores | 4x Intel XL710 40GbE QSFP+ (rev 01) | 16 GB |

| Web Server | 2x Intel(R) Xeon(R) CPU E5‑2699 v3 @ 2.30GHz, 36 real (or 72 HT) cores | 2x Intel XL710 40GbE QSFP+ (rev 01) | 16 GB |

Software Used

We used the following software for the testing:

- Version 4.0.0 of

wrkrunning on the client machine generated the traffic that NGINX proxied. We installed it according to these instructions. - Version 1.9.7 of NGINX Open Source ran on the Reverse Proxy and Web Server machines. We installed it from the official repository at nginx.org according to these instructions.

- Ubuntu Linux 14.04.1 ran on all three machines.

Testing Methodology

We tested the performance of the NGINX Plus reverse proxy server with different numbers of CPUs. One NGINX Plus worker process consumes a single CPU, so to measure the performance of different numbers of CPUs we varied the number of worker processes, repeating the tests with two worker processes, four, eight, and so on. For an overview of NGINX architecture, please see our blog.

Note: To set the number of worker processes manually, use the worker_processes directive. The default value is auto, which tells NGINX Plus to detect the number of CPUs and run one worker process per CPU.

Performance Metrics

We measured the following metrics:

- Requests per second (RPS) – Measures the ability to process HTTP requests. In our tests, each client sends requests for a 1 KB file over a keepalive connection. The Reverse Proxy processes each request and forwards it to the Web Server over another keepalive connection.

- SSL/TLS transactions per second (TPS) – Measures the ability to process new SSL/TLS connections. In our tests, each client sends a series of HTTPS requests, each on a new connection. The Reverse Proxy parses the requests and forwards them to the Web Server over an established keepalive connection. The Web Server sends back a 0‑byte response for each request.

- Throughput – Measures the throughput that NGINX Plus can sustain when serving 1 MB files over HTTP.

Running Tests

To generate all client traffic, we used wrk with the following options:

- The

-coption specifies the number of TCP connections to create. For our testing, we set this to 50 connections. - The

-doption specifies how long to generate traffic. We ran our tests for 3 minutes each. - The

-toption specifies the number of threads to create. We specified a single thread.

To fully exercise each CPU we used taskset, which can pin a single wrk process to a CPU. This method yields more consistent results than increasing the number of wrk threads.

Requests Per Second

To measure requests per second (RPS), we ran the following script:

for i in `seq 0 $(($(getconf _NPROCESSORS_ONLN) - 1))`; do

taskset -c $i wrk -t 1 -c 50 -d 180s http://Reverse-Proxy-Server-IP-address/1kb.bin &

doneThis test spawned one copy of wrk per CPU, 36 total for our client machine. Each copy created 50 TCP connections and made continuous requests for a 1 KB file over them for 3 minutes (180 seconds).

SSL/TLS Transactions Per Second

To measure SSL/TLS transactions per second (TPS), we ran the following script:

for i in `seq 0 $(($(getconf _NPROCESSORS_ONLN) - 1))`; do

taskset -c $i wrk -t 1 -c 50 -d 180s -H 'Connection: close' https://Reverse-Proxy-Server-IP-address/0kb.bin &

doneThis test uses the same values for -c, -d, and -t as the previous test, but differs in two notable ways because the focus is on processing of SSL/TLS connections:

- The client opens and closes a connection for each request (the

-Hoption sets theConnection:closeHTTP header). - The requested file is 0 (zero) bytes instead of 1 KB in size.

Throughput

To measure throughput, we ran the following script:

for i in `seq 0 $(($(getconf _NPROCESSORS_ONLN) - 1))`; do

taskset -c $i wrk -t 1 -c 50 -d 180s http://Reverse-Proxy-Server-IP-address/1mb.bin &

doneThe only difference from the first test is the larger file size of 1 MB. We found that using an even larger file size (10 MB) did not increase overall throughput.

Multiple Network Cards

In our testing we used multiple network cards. The following slightly modified script ensured traffic was distributed evenly between the two cards:

for i in `seq 0 $((($(getconf _NPROCESSORS_ONLN) - 1)/2))`; do

n=`echo $(($i+number-of-CPUs/2))`;

taskset -c $i ./wrk -t 1 -c 50 -d 180s http://Reverse-Proxy-Server-IP-address-1/1kb.bin &

taskset -c $n ./wrk -t 1 -c 50 -d 180s http://Reverse-Proxy-Server-IP-address-2/1kb.bin &

donePricing

The final step, once we had performance numbers with different numbers of cores, was to determine the cost of servers with the corresponding specs. We used prices for Dell PowerEdge servers with specs similar to those of the Intel hardware we used in our testing. The appendix below has a full bill of materials for each server, along with the full NGINX configuration for both the Reverse Proxy and the Web Server.

Appendix

Dell Hardware Configurations

The prices in the sizing guide are for the following Dell hardware configurations.

Note: Server models with the indicated specs and prices were available at the time we ran our tests, but are subject to change in the future.

| Server model | Specs | Price |

|---|---|---|

| Dell PowerEdge R230 | CPU: 2 core Intel Core I3 6100 3.7GHz, 2C/4T RAM: 4 GB HDD: 500 GB NIC: Intel X710 2×10 Gbe |

$1,200 |

| CPU: Intel® Xeon® E3‑1220 v5 3.0GHz, 4C/8T RAM: 4 GB HDD: 500 GB NIC: Intel XL710 2×40 Gbe |

$1,400 | |

| Dell PowerEdge R430 | CPU: Intel® Xeon® E5‑2630 v3 2.4GHz, 8C/16T RAM: 4 GB HDD: 500 GB NIC: Intel XL710 2×40 Gbe |

$2,200 |

| CPU: 2x Intel® Xeon® E5‑2630 v3 2.4GHz, 8C/16T RAM: 8 GB HDD: 500 GB NIC: Intel XL710 2×40 Gbe |

$3,000 | |

| Dell PowerEdge R630 | CPU: 2x Intel® Xeon® E5‑2697A v4 2.6GHz, 16C/32T RAM: 8 GB HDD: 500 GB NIC: Intel XL710 2×40 Gbe |

$8,000 |

| CPU: 2x Intel® Xeon® E5‑2699 v3 2.3GHz, 18C/36T RAM: 8 GB HDD: 500 GB NIC: Intel XL710 2×40 Gbe |

$11,000 |

NGINX Plus Reverse Proxy Configuration

The following configuration was used on the NGINX Plus Reverse Proxy. Note the two sets of keepalive_timeout and keepalive_requests directives:

- For SSL/TLS TPS tests, we set the values for both directives so that connections stayed open for just one request, as the goal of that test is to see how many SSL/TLS connections per second NGINX Plus can process. SSL/TLS session caching was also disabled.

- For the RPS tests, the directives were tuned to keep connections alive for as long as possible.

The configuration is a fairly standard reverse proxy server configuration otherwise, with NGINX Plus proxying to a web server using the proxy_pass directive.

user nginx;

worker_processes auto;

worker_rlimit_nofile 10240;

pid /var/run/nginx.pid;

events {

worker_connections 10240;

accept_mutex off;

multi_accept off;

}

http {

access_log off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for" "$ssl_cipher" '

'"$ssl_protocol" ';

sendfile on;

# RPS tests

keepalive_timeout 300s;

keepalive_requests 1000000;

# SSL/TLS TPS tests

#keepalive_timeout 0;

#keepalive_requests 1;

upstream webserver {

server Web-Server-IP-address;

}

server {

listen 80;

listen 443 ssl backlog=102400 reuseport;

ssl_certificate /etc/nginx/ssl/rsa-cert.crt;

ssl_certificate_key /etc/nginx/ssl/rsa-key.key;

ssl_session_tickets off;

ssl_session_cache off;

root /var/www/html;

location / {

proxy_pass http://webserver;

}

}

}

}NGINX Web Server Configuration

The configuration below was used on the NGINX Web Server. It serves static files from /var/www/html/, as configured by the root directive. The static files were generated using dd; this example creates a 1 KB file of zeroes:

dd if=/dev/zero of=1kb.bin bs=1KB count=1The configuration:

user nginx;

worker_processes auto;

worker_rlimit_nofile 10240;

pid /var/run/nginx.pid;

events {

worker_connections 10240;

accept_mutex off;

multi_accept off;

}

http {

access_log off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for" "$ssl_cipher" '

'"$ssl_protocol" ';

sendfile on;

keepalive_timeout 300s;

keepalive_requests 1000000;

server {

listen 80;

root /var/www/html;

}

}To try NGINX Plus yourself, start a free 30-day trial today or contact us to discuss your use cases.